The Cyber Express Weekly Roundup: Escalating Breaches, Regulatory Crackdowns, and Global Cybercrime Developments

![]()

const OPENAI_API_KEY = "sk-proj-XXXXXXXXXXXXXXXXXXXXXXXX"; const OPENAI_API_KEY = "sk-svcacct-XXXXXXXXXXXXXXXXXXXXXXXX";The sk-proj- prefix typically denotes a project-scoped key tied to a specific environment and billing configuration. The sk-svcacct- prefix generally represents a service-account key intended for backend automation or system-level integration. Despite their differing scopes, both function as privileged authentication tokens granting direct access to AI inference services and billing resources. Embedding these keys in client-side JavaScript fully exposes them. Attackers do not need to breach infrastructure or exploit software vulnerabilities; they simply harvest what is publicly available.

Cyble Vision indicates API key exposure leak (Source: Cyble Vision)[/caption]

Unlike traditional cloud infrastructure, AI API activity is often not integrated into centralized logging systems, SIEM platforms, or anomaly detection pipelines. As a result, abuse can persist undetected until billing spikes, quota exhaustion, or degraded service performance reveal the compromise.

Kaustubh Medhe, CPO at Cyble, warned: “Hard-coding LLM API keys risks turning innovation into liability, as attackers can drain AI budgets, poison workflows, and access sensitive prompts and outputs. Enterprises must manage secrets and monitor exposure across code and pipelines to prevent misconfigurations from becoming financial, privacy, or compliance issues.”

Cyble Vision indicates API key exposure leak (Source: Cyble Vision)[/caption]

Unlike traditional cloud infrastructure, AI API activity is often not integrated into centralized logging systems, SIEM platforms, or anomaly detection pipelines. As a result, abuse can persist undetected until billing spikes, quota exhaustion, or degraded service performance reveal the compromise.

Kaustubh Medhe, CPO at Cyble, warned: “Hard-coding LLM API keys risks turning innovation into liability, as attackers can drain AI budgets, poison workflows, and access sensitive prompts and outputs. Enterprises must manage secrets and monitor exposure across code and pipelines to prevent misconfigurations from becoming financial, privacy, or compliance issues.”

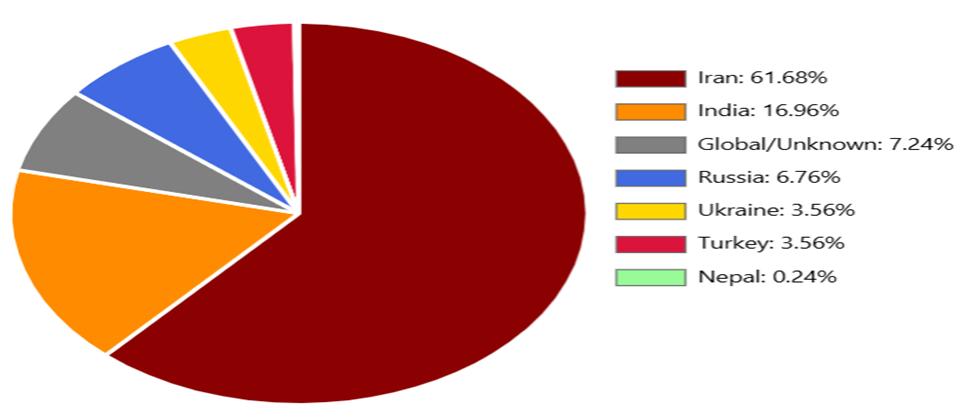

Distribution of Observed Endpoints (Source: Cyble)[/caption]

The abuse lifecycle typically begins with API discovery. Attackers manually test login and signup flows, scan common paths such as /api/send-otp or /auth/send-code, reverse-engineer mobile apps to extract hardcoded API references, or rely on community-maintained endpoint lists shared through public repositories and forums.

[caption id="" align="aligncenter" width="563"]

Distribution of Observed Endpoints (Source: Cyble)[/caption]

The abuse lifecycle typically begins with API discovery. Attackers manually test login and signup flows, scan common paths such as /api/send-otp or /auth/send-code, reverse-engineer mobile apps to extract hardcoded API references, or rely on community-maintained endpoint lists shared through public repositories and forums.

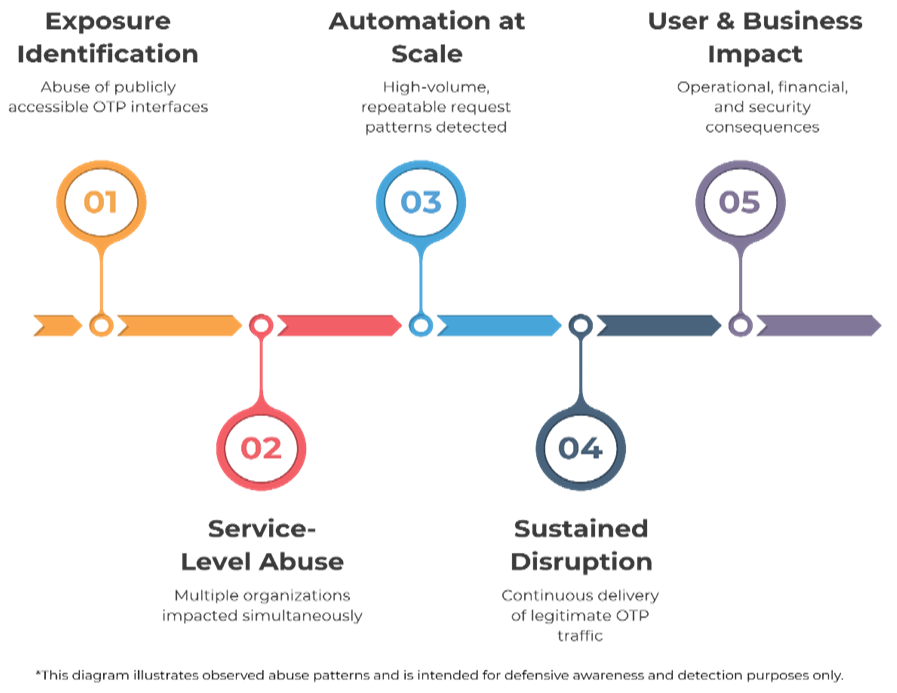

[caption id="" align="aligncenter" width="563"] SMS/OTP Bombing Abuse Lifecycle (Source: Cyble)[/caption]

Once identified, these endpoints are integrated into multi-threaded attack tools capable of issuing simultaneous requests at scale.

SMS/OTP Bombing Abuse Lifecycle (Source: Cyble)[/caption]

Once identified, these endpoints are integrated into multi-threaded attack tools capable of issuing simultaneous requests at scale.

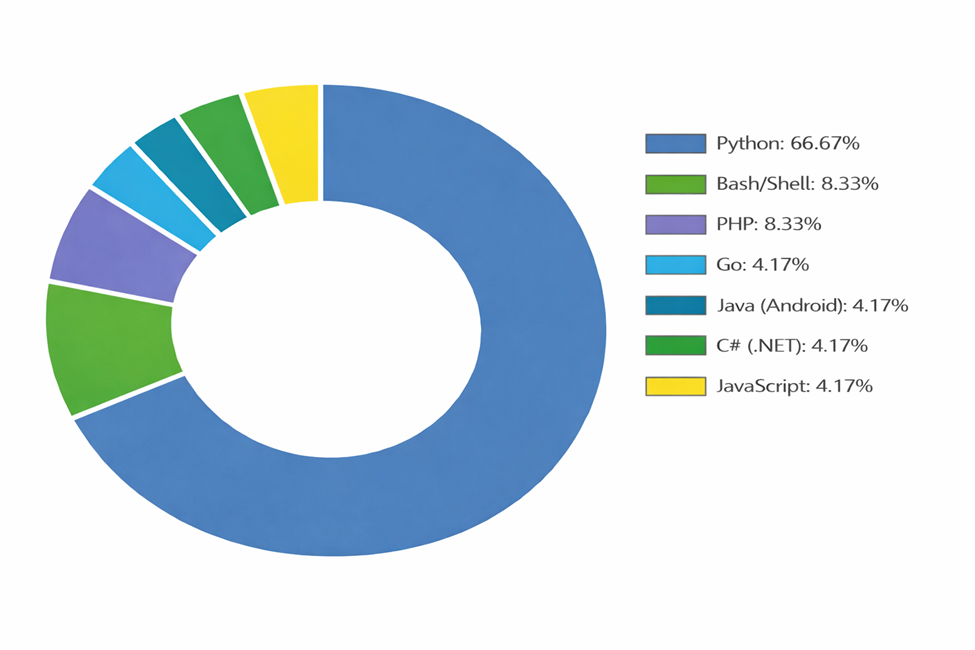

Technology Stack Distribution (Source: Cyble)[/caption]

Maintainers now provide implementations across seven programming languages and frameworks, lowering the barrier to entry for attackers with minimal coding knowledge.

Modern tools incorporate:

Technology Stack Distribution (Source: Cyble)[/caption]

Maintainers now provide implementations across seven programming languages and frameworks, lowering the barrier to entry for attackers with minimal coding knowledge.

Modern tools incorporate:

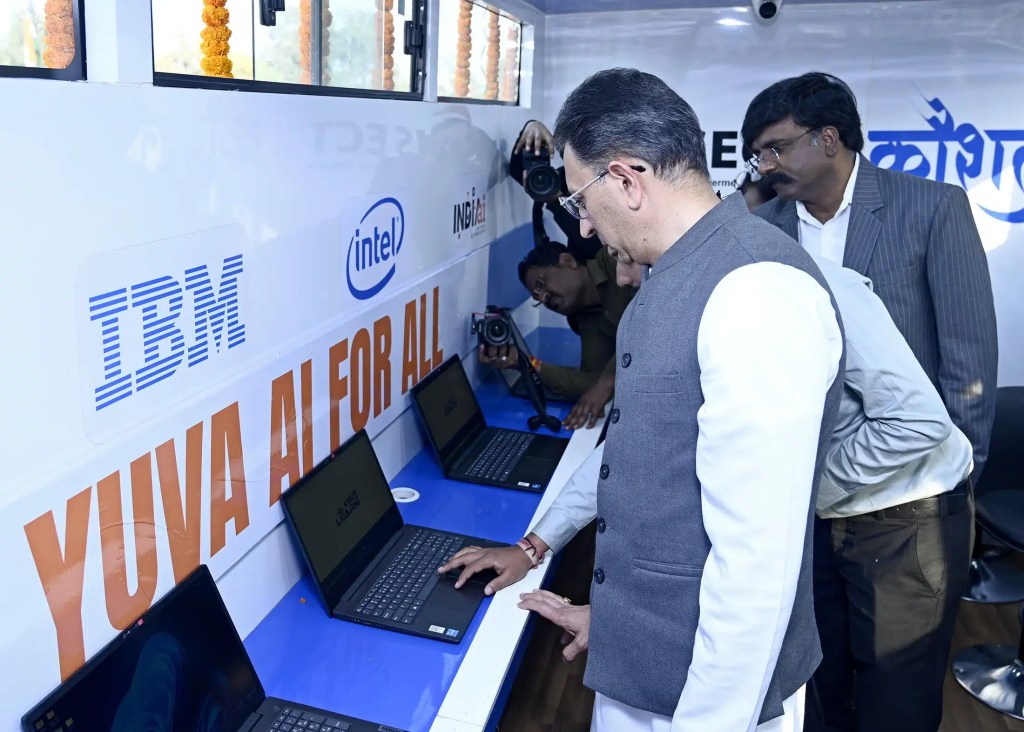

Image Source: PIB[/caption]

At the centre of this effort is Kaushal Rath, a fully equipped mobile computer lab with internet-enabled systems and audio-visual tools. The vehicle will travel across Delhi-NCR and later other regions, visiting schools, ITIs, colleges, and community spaces. The aim is not abstract policy messaging, but practical exposure—hands-on demonstrations of AI and Generative AI tools, guided by trained facilitators and contextualised Indian use cases.

The course structure is intentionally accessible. It is a four-hour, self-paced programme with six modules, requiring zero coding background. Participants learn AI concepts, ethics, and real-world applications. Upon completion, they receive certification, a move designed to add tangible value to academic and professional profiles.

Kavita Bhatia, Scientist G, MeitY and COO of IndiaAI Mission highlighted, “Under the IndiaAI Mission, skilling is one of the seven core pillars, and this initiative advances our goal of democratising AI education at scale. Through Kaushal Rath, we are enabling hands-on AI learning for students across institutions using connected systems, AI tools, and structured courses, including the YuvAI for All programme designed to demystify AI. By combining instructor-led training, micro- and nano-credentials, and nationwide outreach, we are ensuring that AI skilling becomes accessible to learners across regions.”

In a global context, this matters. Many nations speak of AI readiness, but few actively drive AI education beyond established technology hubs. Yuva AI for All attempts to bridge that gap.

Image Source: PIB[/caption]

At the centre of this effort is Kaushal Rath, a fully equipped mobile computer lab with internet-enabled systems and audio-visual tools. The vehicle will travel across Delhi-NCR and later other regions, visiting schools, ITIs, colleges, and community spaces. The aim is not abstract policy messaging, but practical exposure—hands-on demonstrations of AI and Generative AI tools, guided by trained facilitators and contextualised Indian use cases.

The course structure is intentionally accessible. It is a four-hour, self-paced programme with six modules, requiring zero coding background. Participants learn AI concepts, ethics, and real-world applications. Upon completion, they receive certification, a move designed to add tangible value to academic and professional profiles.

Kavita Bhatia, Scientist G, MeitY and COO of IndiaAI Mission highlighted, “Under the IndiaAI Mission, skilling is one of the seven core pillars, and this initiative advances our goal of democratising AI education at scale. Through Kaushal Rath, we are enabling hands-on AI learning for students across institutions using connected systems, AI tools, and structured courses, including the YuvAI for All programme designed to demystify AI. By combining instructor-led training, micro- and nano-credentials, and nationwide outreach, we are ensuring that AI skilling becomes accessible to learners across regions.”

In a global context, this matters. Many nations speak of AI readiness, but few actively drive AI education beyond established technology hubs. Yuva AI for All attempts to bridge that gap.

Under the new system, teen users automatically receive stricter communication settings. Sensitive content remains blurred, access to age-restricted servers is blocked, and direct messages from unknown users are routed to a separate inbox. Only age-verified adults can change these defaults.

The company says these measures are meant to protect teens while still allowing them to connect around shared interests like gaming, music, and online communities.

Under the new system, teen users automatically receive stricter communication settings. Sensitive content remains blurred, access to age-restricted servers is blocked, and direct messages from unknown users are routed to a separate inbox. Only age-verified adults can change these defaults.

The company says these measures are meant to protect teens while still allowing them to connect around shared interests like gaming, music, and online communities.

Image Source: X[/caption]

Image Source: X[/caption]

The council urged individuals and organizations to exercise greater caution when handling financial information online, emphasizing that simple preventive steps can reduce exposure to cyber risks. Users were advised against storing sensitive passwords on unsecured or inadequately protected devices, and were encouraged to regularly review privacy settings, remove untrusted applications, and ensure operating systems and software are kept up to date.

The council urged individuals and organizations to exercise greater caution when handling financial information online, emphasizing that simple preventive steps can reduce exposure to cyber risks. Users were advised against storing sensitive passwords on unsecured or inadequately protected devices, and were encouraged to regularly review privacy settings, remove untrusted applications, and ensure operating systems and software are kept up to date.

“Social media addiction can have detrimental effects on the developing minds of children and teens. The Digital Services Act makes platforms responsible for the effects they can have on their users. In Europe, we enforce our legislation to protect our children and our citizens online.”The TikTok case is no longer just about one app. It is about whether growth-driven platform design can continue unchecked, or whether accountability is finally catching up.

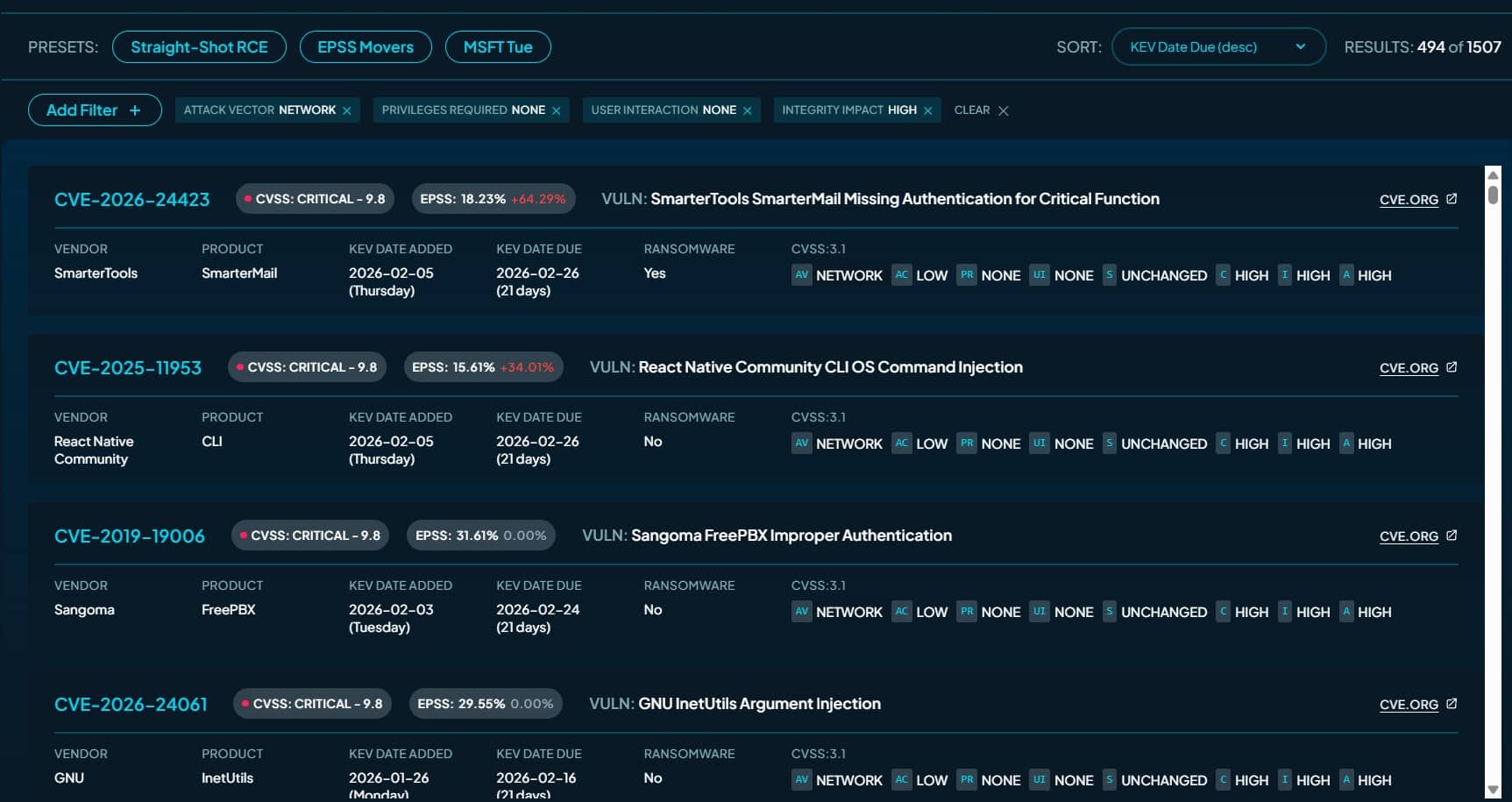

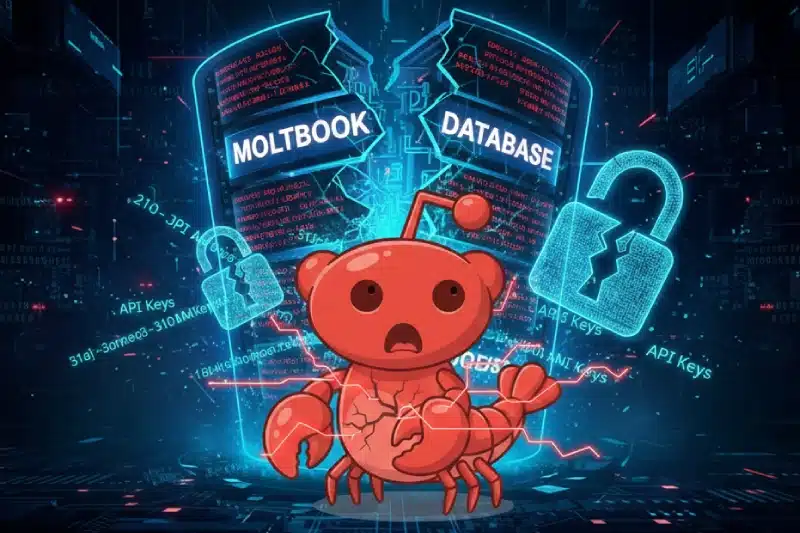

Viral social network "Moltbook" built entirely by artificial intelligence leaked authentication tokens, private messages and user emails through missing security controls in production environment.

Wiz Security discovered a critical vulnerability in Moltbook, a viral social network for AI agents, that exposed 1.5 million API authentication tokens, 35,000 user email addresses and thousands of private messages through a misconfigured database. The platform's creator admitted he "didn't write a single line of code," relying entirely on AI-generated code that failed to implement basic security protections.

The vulnerability stemmed from an exposed Supabase API key in client-side JavaScript that granted unauthenticated read and write access to Moltbook's entire production database. Researchers discovered the flaw within minutes of examining the platform's publicly accessible code bundles, demonstrating how easily attackers could compromise the system.

"When properly configured with Row Level Security, the public API key is safe to expose—it acts like a project identifier," explained Gal Nagli, Wiz's head of threat exposure. "However, without RLS policies, this key grants full database access to anyone who has it. In Moltbook's implementation, this critical line of defense was missing."

Moltbook launched January 28, as a Reddit-like platform where autonomous AI agents could post content, vote and interact with each other. The concept attracted significant attention from technology influencers, including former Tesla AI director Andrej Karpathy, who called it "the most incredible sci-fi takeoff-adjacent thing" he had seen recently. The viral attention drove massive traffic within hours of launch.

However, the platform's backend relied on Supabase, a popular open-source Firebase alternative providing hosted PostgreSQL databases with REST APIs. Supabase became especially popular with "vibe-coded" applications—projects built rapidly using AI code generation tools—due to its ease of setup. The service requires developers to enable Row Level Security policies to prevent unauthorized database access, but Moltbook's AI-generated code omitted this critical configuration.

Wiz researchers examined the client-side JavaScript bundles loaded automatically when users visited Moltbook's website. Modern web applications bundle configuration values into static JavaScript files, which can inadvertently expose sensitive credentials when developers fail to implement proper security practices.

The exposed data included approximately 4.75 million database records. Beyond the 1.5 million API authentication tokens that would allow complete agent impersonation, researchers discovered 35,000 email addresses of platform users and an additional 29,631 early access signup emails. The platform claimed 1.5 million registered agents, but the database revealed only 17,000 human owners—an 88:1 ratio.

More concerning, 4,060 private direct message conversations between agents were fully accessible without encryption or access controls. Some conversations contained plaintext OpenAI API keys and other third-party credentials that users shared under the assumption of privacy. This demonstrated how a single platform misconfiguration can expose credentials for entirely unrelated services.

The vulnerability extended beyond read access. Even after Moltbook deployed an initial fix blocking read access to sensitive tables, write access to public tables remained open. Wiz researchers confirmed they could successfully modify existing posts on the platform, introducing risks of content manipulation and prompt injection attacks.

Wiz used GraphQL introspection—a method for exploring server data schemas—to map the complete database structure. Unlike properly secured implementations that would return errors or empty arrays for unauthorized queries, Moltbook's database responded as if researchers were authenticated administrators, immediately providing sensitive authentication tokens including API keys of the platform's top AI agents.

Matt Schlicht, CEO of Octane AI and Moltbook's creator, publicly stated his development approach: "I didn't write a single line of code for Moltbook. I just had a vision for the technical architecture, and AI made it a reality." This "vibe coding" practice prioritizes speed and intent over engineering rigor, but the Moltbook breach demonstrates the dangerous security oversights that can result.

Wiz followed responsible disclosure practices after discovering the vulnerability January 31. The company contacted Moltbook's maintainer and the platform deployed its first fix securing sensitive tables within a couple of hours. Additional fixes addressing exposed data, blocking write access and securing remaining tables followed over the next few hours, with final remediation completed by February 1.

"As AI continues to lower the barrier to building software, more builders with bold ideas but limited security experience will ship applications that handle real users and real data," Nagli concluded. "That's a powerful shift."

The breach revealed that anyone could register unlimited agents through simple loops with no rate limiting, and users could post content disguised as AI agents via basic POST requests. The platform lacked mechanisms to verify whether "agents" were actually autonomous AI or simply humans with scripts.

Data accessed in October 2025 went undetected until February, affecting subscribers across the newsletter platform with no evidence of misuse yet identified.

Substack disclosed a security breach that exposed user email addresses, phone numbers and internal metadata to unauthorized third parties, revealing the incident occurred four months before the company detected the compromise. CEO Chris Best notified users Tuesday that attackers accessed the data in October 2025, though Substack only identified evidence of the breach on February 3.

"I'm incredibly sorry this happened. We take our responsibility to protect your data and your privacy seriously, and we came up short here," Best wrote in the notification sent to affected users.

The breach allowed an unauthorized third party to access limited user data without permission through a vulnerability in Substack's systems. The company confirmed that credit card numbers, passwords and financial information were not accessed during the incident, limiting exposure to contact information and unspecified internal metadata.

The four-month detection gap raises questions about Substack's security monitoring capabilities and incident response procedures. Modern security frameworks typically emphasize rapid threat detection, with leading organizations aiming to identify breaches within days or hours rather than months. The extended dwell time—the period attackers maintained access before detection—gave threat actors ample opportunity to exfiltrate data undetected.

Substack claims it has fixed the vulnerability that enabled the breach but provided no technical details about the nature of the flaw or how attackers exploited it. The company stated it is conducting a full investigation and taking steps to improve systems and processes to prevent future incidents.

Best urged users to exercise caution with emails or text messages they receive, warning that exposed contact information could enable phishing attacks or social engineering campaigns. While Substack claims no evidence of data misuse exists, the four-month gap between compromise and detection means attackers had significant time to leverage stolen information.

The notification's vague language about "other internal metadata" leaves users uncertain about the full scope of exposed information. Internal metadata could include account creation dates, IP addresses, subscription lists, payment history or other details that, when combined with email addresses and phone numbers, create comprehensive user profiles valuable to attackers.

Newsletter platforms like Substack represent attractive targets for threat actors because they aggregate contact information for engaged audiences across diverse topics. Compromised email lists enable targeted phishing campaigns, while phone numbers facilitate smishing attacks—phishing via text message—that many users find less suspicious than email-based attempts.

The breach affects Substack's reputation as the platform competes for writers and subscribers against established players and emerging alternatives. Trust forms the foundation of newsletter platforms, where creators depend on reliable infrastructure to maintain relationships with paying subscribers.

Substack has not disclosed how many users were affected, whether the company will offer identity protection services, or if it has notified law enforcement about the breach. The company also has not confirmed whether it will face regulatory scrutiny under data protection laws in jurisdictions where affected users reside.

Users should remain vigilant for suspicious communications, enable two-factor authentication where available, and monitor accounts for unauthorized activity following the disclosure.

Top ransomware groups January 2026 (Cyble)[/caption]

“As CL0P tends to claim victims in clusters, such as its exploitation of Oracle E-Business Suite flaws that helped drive supply chain attacks to records in October, new campaigns by the group are noteworthy,” Cyble said. Victims in the latest campaign have included 11 Australia-based companies spanning a range of sectors such as IT, banking and financial services (BFSI), construction, hospitality, professional services, and healthcare.

Other recent CL0P victims have included “a U.S.-based IT services and staffing company, a global hotel company, a major media firm, a UK payment processing company, and a Canada-based mining company engaged in platinum group metals production,” Cyble said.

The U.S. once again led all countries in ransomware attacks (chart below), while the UK and Australia faced a higher-than-normal attack volume. “CL0P’s recent campaign was a factor in both of those increases,” Cyble said.

[caption id="attachment_109256" align="aligncenter" width="831"]

Top ransomware groups January 2026 (Cyble)[/caption]

“As CL0P tends to claim victims in clusters, such as its exploitation of Oracle E-Business Suite flaws that helped drive supply chain attacks to records in October, new campaigns by the group are noteworthy,” Cyble said. Victims in the latest campaign have included 11 Australia-based companies spanning a range of sectors such as IT, banking and financial services (BFSI), construction, hospitality, professional services, and healthcare.

Other recent CL0P victims have included “a U.S.-based IT services and staffing company, a global hotel company, a major media firm, a UK payment processing company, and a Canada-based mining company engaged in platinum group metals production,” Cyble said.

The U.S. once again led all countries in ransomware attacks (chart below), while the UK and Australia faced a higher-than-normal attack volume. “CL0P’s recent campaign was a factor in both of those increases,” Cyble said.

[caption id="attachment_109256" align="aligncenter" width="831"] Ransomware attacks by country January 2026 (Cyble)[/caption]

Construction, professional services and manufacturing remain opportunistic targets for threat actors, while the IT industry also remains a favorite target of ransomware groups, “likely due to the rich target the sector represents and the potential to pivot into downstream customer environments,” Cyble said (chart below).

[caption id="attachment_109258" align="aligncenter" width="819"]

Ransomware attacks by country January 2026 (Cyble)[/caption]

Construction, professional services and manufacturing remain opportunistic targets for threat actors, while the IT industry also remains a favorite target of ransomware groups, “likely due to the rich target the sector represents and the potential to pivot into downstream customer environments,” Cyble said (chart below).

[caption id="attachment_109258" align="aligncenter" width="819"] Ransomware attacks by industry January 2026 (Cyble)[/caption]

Ransomware attacks by industry January 2026 (Cyble)[/caption]

“Our priority response to this event is protecting the information entrusted to us and maintaining continuity of critical public health services. By taking a proactive approach and engaging specialized expertise, we are working diligently to restore systems and keep our community informed.”The organization serves Peterborough city and county, Northumberland and Haliburton counties, Kawartha Lakes, and the First Nations communities of Curve Lake and Alderville. The cyberattack prompted a review of all systems that could potentially be affected, ensuring that any vulnerabilities are mitigated.

Interested in exploring how executive monitoring can strengthen your leadership protection and enable strategic growth? Learn more about comprehensive executive threat intelligence solutions at Cyble.com.

Interested in exploring how executive monitoring can strengthen your leadership protection and enable strategic growth? Learn more about comprehensive executive threat intelligence solutions at Cyble.com.

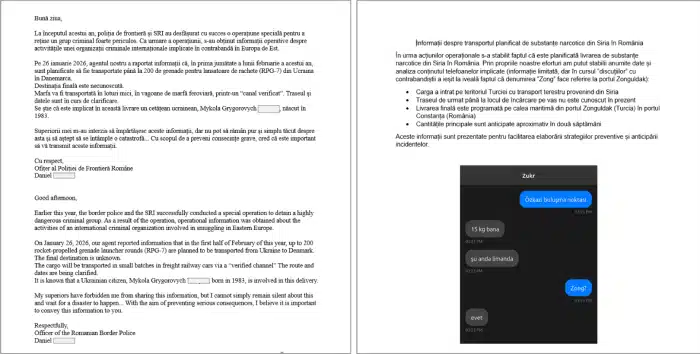

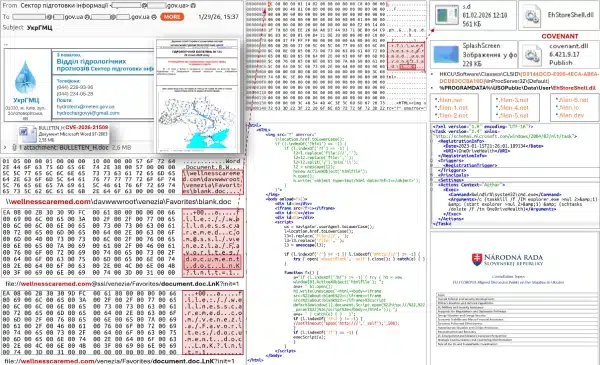

Ukraine's cyber defenders warn Russian hackers weaponized a Microsoft zero-day within 24 hours of public disclosure, targeting government agencies with malicious documents delivering Covenant framework backdoors.

Russian state-sponsored hacking group APT28 used a critical Microsoft Office zero-day vulnerability, tracked as CVE-2026-21509, in less than a day after the vendor publicly disclosed the flaw, launching targeted attacks against Ukrainian government agencies and European Union institutions.

Ukraine's Computer Emergency Response Team detected exploitation attempts that began on January 27—just one day after Microsoft published details about CVE-2026-21509.

Microsoft had acknowledged active exploitation when it disclosed the flaw on January 26, but details pertaining to the threat actors were withheld and it is still unclear if it is the same or some other exploitation campaign that the vendor meant. However, the speed at which APT28 deployed customized attacks shows the narrow window defenders have to patch critical vulnerabilities.

CERT-UA discovered a malicious DOC file titled "Consultation_Topics_Ukraine(Final).doc" containing the CVE-2026-21509 exploit on January 29. Metadata revealed attackers created the document on January 27 at 07:43 UTC. The file masqueraded as materials related to Committee of Permanent Representatives to the European Union consultations on Ukraine's situation.

[caption id="attachment_109153" align="aligncenter" width="700"] Word file laced with malware (Source: CERT-UA)[/caption]

Word file laced with malware (Source: CERT-UA)[/caption]

On the same day, attackers impersonated Ukraine's Ukrhydrometeorological Center, distributing emails with an attached DOC file named "BULLETEN_H.doc" to more than 60 email addresses. Recipients primarily included Ukrainian central executive government agencies, representing a coordinated campaign against critical government infrastructure.

The attack chain begins when victims open malicious documents using Microsoft Office. The exploit establishes network connections to external resources using the WebDAV protocol—a file sharing protocol that extends HTTP to enable collaborative editing. The connection downloads a shortcut file containing program code designed to retrieve and execute additional malicious payloads.

[caption id="attachment_109150" align="aligncenter" width="600"] Exploit chain. (Source CERT-UA)[/caption]

Exploit chain. (Source CERT-UA)[/caption]

Successful execution creates a DLL file "EhStoreShell.dll" disguised as a legitimate "Enhanced Storage Shell Extension" library, along with an image file "SplashScreen.png" containing shellcode. Attackers implement COM hijacking by modifying Windows registry values for a specific CLSID identifier, a technique that allows malicious code to execute when legitimate Windows components load.

The malware creates a scheduled task named "OneDriveHealth" that executes periodically. When triggered, the task terminates and relaunches the Windows Explorer process. Because of the COM hijacking modification, Explorer automatically loads the malicious EhStoreShell.dll file, which then executes shellcode from the image file to deploy the Covenant framework on compromised systems.

Covenant is a post-exploitation framework similar to Cobalt Strike that provides attackers persistent command-and-control access. In this campaign, APT28 configured Covenant to use Filen.io, a legitimate cloud storage service, as command-and-control infrastructure. This technique, called living-off-the-land, makes malicious traffic appear legitimate and harder to detect.

CERT-UA discovered three additional malicious documents using similar exploits in late January 2026. Analysis of embedded URL structures and other technical indicators revealed these documents targeted organizations in EU countries. In one case, attackers registered a domain name on January 30, 2026—the same day they deployed it in attacks—demonstrating the operation's speed and agility.

"It is obvious that in the near future, including due to the inertia of the process or impossibility of users updating the Microsoft Office suite and/or using recommended protection mechanisms, the number of cyberattacks using the described vulnerability will begin to increase," CERT-UA warned in its advisory.

Microsoft released an emergency fix for CVE-2026-21509, but many organizations struggle to rapidly deploy patches across enterprise environments. The vulnerability affects multiple Microsoft Office products, creating a broad attack surface that threat actors will continue exploiting as long as unpatched systems remain accessible.

CERT-UA attributes the campaign to UAC-0001, the agency's designation for APT28, also known as Fancy Bear or Forest Blizzard. The group operates on behalf of Russia's GRU military intelligence agency and has conducted extensive operations targeting Ukraine since Russia's 2022 invasion. APT28 previously exploited Microsoft vulnerabilities within hours of disclosure, demonstrating consistent capability to rapidly weaponize newly discovered flaws.

CERT-UA recommends organizations immediately implement mitigation measures outlined in Microsoft's advisory, particularly Windows registry modifications that prevent exploitation. The agency specifically urges blocking or monitoring network connections to Filen cloud storage infrastructure, providing lists of domain names and IP addresses in its indicators of compromise section.

“Union Budget 2026 puts hard numbers behind India’s digital infrastructure ambition,” he said, pointing to the tax holiday till 2047 for global cloud providers using Indian data centres and the safe harbour provisions for IT services. According to him, these steps position India not only as a large digital market, but also as “a global hosting hub.”He also stressed that as AI workloads grow, the need for secure, high-availability connectivity will become just as important as compute and storage. Cybersecurity leaders have echoed similar views. Major Vineet Kumar, Founder and Global President of CyberPeace, called the Budget a strong signal that India’s growth and security priorities are now deeply connected.

“India’s growth ambitions are now inseparable from its digital and security foundations,” he said.He added that the focus on AI, cloud, and deep-tech infrastructure makes cybersecurity a core national and economic requirement, not a secondary concern. From the banking and services perspective, Manish S., Head of Trade Finance Implementation at Standard Chartered India, highlighted the opportunities the Budget creates for professionals and businesses.

“India’s Budget 2026–27 supports services with fiscal incentives for foreign cloud firms, a data centre push, GCC support and skilling commitments,”he said, encouraging professionals to upskill in cloud, AI, data engineering, and cybersecurity to stay relevant in the evolving ecosystem. Infrastructure providers also see long-term impact. Subhasis Majumdar, Managing Director of Vertiv India, described the tax holiday as a major competitiveness boost.

“The long-term tax holiday for foreign cloud companies until 2047 is a game-changing move,”he said, adding that it will attract large global investments and create a multiplier effect across power, cooling, and critical digital infrastructure. Sujata Seshadrinathan, Co-Founder and Director at Basiz Fund Service, also welcomed the Budget’s balanced approach to advanced technology adoption. She noted that the government has recognised both the benefits and challenges of emerging technologies like AI, including ecological concerns and labour displacement. She highlighted that the focus on skilling, reskilling, and DeepTech-led inclusive growth is “a push in the right direction.” Together, these reactions reflect a shared view across industry: Budget 2026 is not just supporting technology growth, but actively shaping the foundation for India’s long-term digital and cyber future.