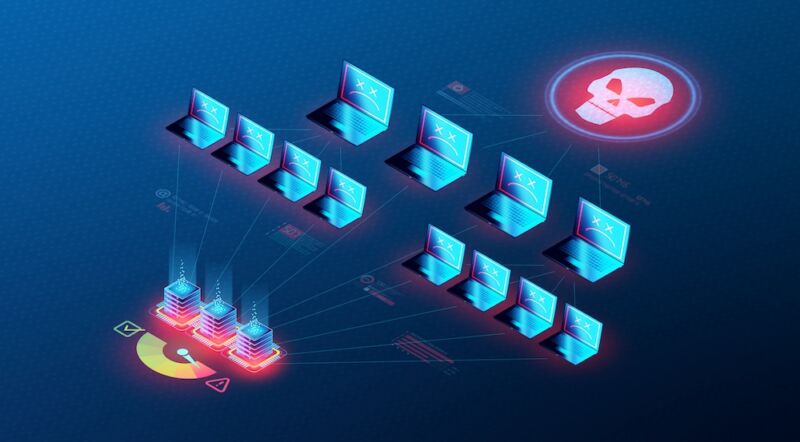

High-severity vulnerabilities affect a wide range of Asus router models

Enlarge (credit: Getty Images)

Hardware manufacturer Asus has released updates patching multiple critical vulnerabilities that allow hackers to remotely take control of a range of router models with no authentication or interaction required of end users.

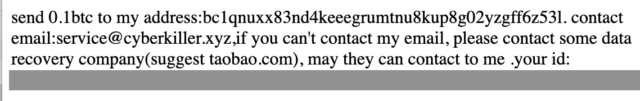

The most critical vulnerability, tracked as CVE-2024-3080 is an authentication bypass flaw that can allow remote attackers to log into a device without authentication. The vulnerability, according to the Taiwan Computer Emergency Response Team / Coordination Center (TWCERT/CC), carries a severity rating of 9.8 out of 10. Asus said the vulnerability affects the following routers:

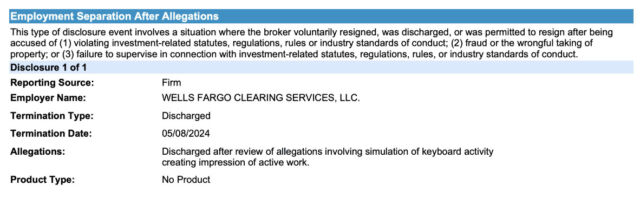

A favorite haven for hackers

A second vulnerability tracked as CVE-2024-3079 affects the same router models. It stems from a buffer overflow flaw and allows remote hackers who have already obtained administrative access to an affected router to execute commands.