The field of Artificial Intelligence is rapidly evolving, and OpenAI's ChatGPT is a leader in this revolution. This groundbreaking large language model (LLM) redefined the expectations for AI.

Just 18 months after its initial launch, OpenAI has released a major update: GPT-4o. This update widens the gap between OpenAI and its competitors, especially the likes of Google.

OpenAI unveiled GPT-4o, with the "o" signifying "omni," during a live stream earlier this week. This latest iteration boasts significant advancements across various aspects. Here's a breakdown of the key features and capabilities of OpenAI's GPT-4o.

Features of GPT-4o

Enhanced Speed and Multimodality: GPT-4o operates at a faster pace than its predecessors and excels at understanding and processing diverse information formats – written text, audio, and visuals. This versatility allows GPT-4o to engage in more comprehensive and natural interactions.

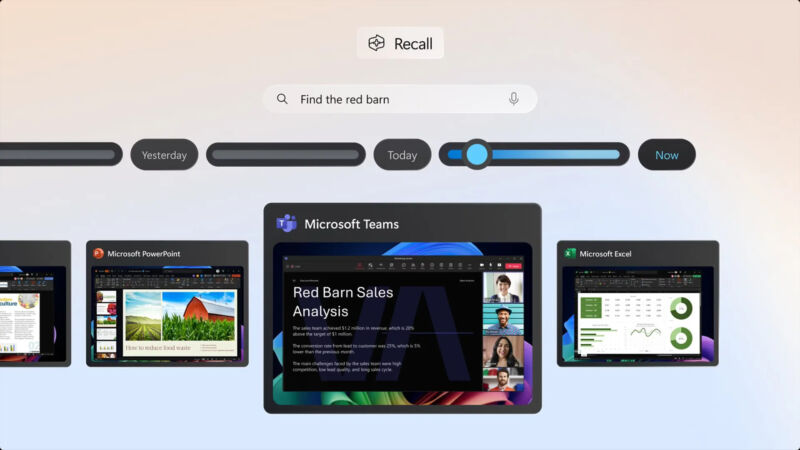

Free Tier Expansion:

OpenAI is making AI more accessible by offering some GPT-4o features to free-tier users. This includes the ability to access web-based information during conversations, discuss images, upload files, and even utilize enterprise-grade

data analysis tools (with limitations). Paid users will continue to enjoy a wider range of functionalities.

Improved User Experience: The blog post accompanying the announcement showcases some impressive capabilities. GPT-4o can now generate convincingly realistic laughter, potentially pushing the boundaries of the uncanny valley and increasing user adoption. Additionally, it excels at interpreting visual input, allowing it to recognize sports on television and explain the rules – a valuable feature for many users.

However, despite the new features and capabilities, the potential misuse of ChatGPT is still on the rise. The new version, though deemed safer than the previous versions, is still vulnerable to exploitation and can be leveraged by hackers and

ransomware groups for nefarious purposes.

Talking about the security concerns regarding the new version, OpenAI shared a detailed post about the new and advanced security measures being implemented in GPT-4o.

Security Concerns Surround ChatGPT 4o

The implications of ChatGPT for

cybersecurity have been a hot topic of discussion among security leaders and experts as many worry that the AI software can easily be misused.

Since its inception in November 2022, several organizations such as Amazon, JPMorgan Chase & Co., Bank of America, Citigroup, Deutsche Bank, Goldman Sachs, Wells Fargo and Verizon have restricted access or blocked the use of the program citing security concerns.

In April 2023, Italy became the first country in the world to ban ChatGPT after accusing OpenAI of stealing user data. These concerns are not unfounded.

OpenAI Assures Safety

OpenAI reassured people that GPT-4o has "new safety systems to provide guardrails on voice outputs," plus extensive post-training and filtering of the training data to prevent ChatGPT from saying anything inappropriate or unsafe. GPT-4o was built in accordance with OpenAI's internal Preparedness Framework and voluntary commitments. More than 70 external security researchers red teamed GPT-4o before its release.

In an article

published on its official website, OpenAI states that its evaluations of cybersecurity do not score above “medium risk.”

“GPT-4o has safety built-in by design across modalities, through techniques such as filtering training data and refining the model’s behavior through post-training. We have also created new safety systems to provide guardrails on voice outputs. Our evaluations of cybersecurity, CBRN, persuasion, and model autonomy show that GPT-4o does not score above Medium risk in any of these categories,” the post said.

“This assessment involved running a suite of automated and human evaluations throughout the model training process. We tested both pre-safety-mitigation and post-safety-mitigation versions of the model, using custom fine-tuning and prompts, to better elicit model capabilities,” it added.

OpenAI shared that it also employed the services of over 70 experts to

identify risks and amplify safety.

“GPT-4o has also undergone extensive external red teaming with 70+ external experts in domains such as social psychology, bias and fairness, and misinformation to identify risks that are introduced or amplified by the newly added modalities. We used these learnings to build out our safety interventions in order to improve the safety of interacting with GPT-4o. We will continue to mitigate new risks as they’re discovered,” it said.

Media Disclaimer: This report is based on internal and external research obtained through various means. The information provided is for reference purposes only, and users bear full responsibility for their reliance on it. The Cyber Express assumes no liability for the accuracy or consequences of using this information.