60,000 Records Exposed in Cyberattack on Uzbekistan Government

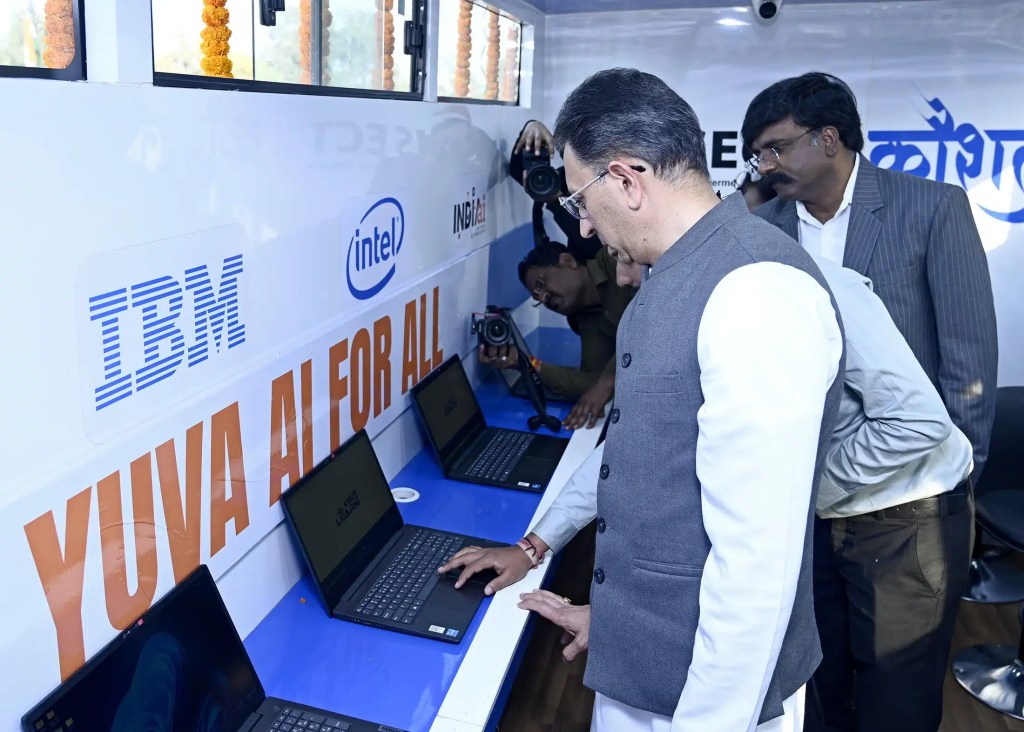

Image Source: PIB[/caption]

At the centre of this effort is Kaushal Rath, a fully equipped mobile computer lab with internet-enabled systems and audio-visual tools. The vehicle will travel across Delhi-NCR and later other regions, visiting schools, ITIs, colleges, and community spaces. The aim is not abstract policy messaging, but practical exposure—hands-on demonstrations of AI and Generative AI tools, guided by trained facilitators and contextualised Indian use cases.

The course structure is intentionally accessible. It is a four-hour, self-paced programme with six modules, requiring zero coding background. Participants learn AI concepts, ethics, and real-world applications. Upon completion, they receive certification, a move designed to add tangible value to academic and professional profiles.

Kavita Bhatia, Scientist G, MeitY and COO of IndiaAI Mission highlighted, “Under the IndiaAI Mission, skilling is one of the seven core pillars, and this initiative advances our goal of democratising AI education at scale. Through Kaushal Rath, we are enabling hands-on AI learning for students across institutions using connected systems, AI tools, and structured courses, including the YuvAI for All programme designed to demystify AI. By combining instructor-led training, micro- and nano-credentials, and nationwide outreach, we are ensuring that AI skilling becomes accessible to learners across regions.”

In a global context, this matters. Many nations speak of AI readiness, but few actively drive AI education beyond established technology hubs. Yuva AI for All attempts to bridge that gap.

Image Source: PIB[/caption]

At the centre of this effort is Kaushal Rath, a fully equipped mobile computer lab with internet-enabled systems and audio-visual tools. The vehicle will travel across Delhi-NCR and later other regions, visiting schools, ITIs, colleges, and community spaces. The aim is not abstract policy messaging, but practical exposure—hands-on demonstrations of AI and Generative AI tools, guided by trained facilitators and contextualised Indian use cases.

The course structure is intentionally accessible. It is a four-hour, self-paced programme with six modules, requiring zero coding background. Participants learn AI concepts, ethics, and real-world applications. Upon completion, they receive certification, a move designed to add tangible value to academic and professional profiles.

Kavita Bhatia, Scientist G, MeitY and COO of IndiaAI Mission highlighted, “Under the IndiaAI Mission, skilling is one of the seven core pillars, and this initiative advances our goal of democratising AI education at scale. Through Kaushal Rath, we are enabling hands-on AI learning for students across institutions using connected systems, AI tools, and structured courses, including the YuvAI for All programme designed to demystify AI. By combining instructor-led training, micro- and nano-credentials, and nationwide outreach, we are ensuring that AI skilling becomes accessible to learners across regions.”

In a global context, this matters. Many nations speak of AI readiness, but few actively drive AI education beyond established technology hubs. Yuva AI for All attempts to bridge that gap.

Under the new system, teen users automatically receive stricter communication settings. Sensitive content remains blurred, access to age-restricted servers is blocked, and direct messages from unknown users are routed to a separate inbox. Only age-verified adults can change these defaults.

The company says these measures are meant to protect teens while still allowing them to connect around shared interests like gaming, music, and online communities.

Under the new system, teen users automatically receive stricter communication settings. Sensitive content remains blurred, access to age-restricted servers is blocked, and direct messages from unknown users are routed to a separate inbox. Only age-verified adults can change these defaults.

The company says these measures are meant to protect teens while still allowing them to connect around shared interests like gaming, music, and online communities.

Image Source: X[/caption]

Image Source: X[/caption]

“Social media addiction can have detrimental effects on the developing minds of children and teens. The Digital Services Act makes platforms responsible for the effects they can have on their users. In Europe, we enforce our legislation to protect our children and our citizens online.”The TikTok case is no longer just about one app. It is about whether growth-driven platform design can continue unchecked, or whether accountability is finally catching up.

“Union Budget 2026 puts hard numbers behind India’s digital infrastructure ambition,” he said, pointing to the tax holiday till 2047 for global cloud providers using Indian data centres and the safe harbour provisions for IT services. According to him, these steps position India not only as a large digital market, but also as “a global hosting hub.”He also stressed that as AI workloads grow, the need for secure, high-availability connectivity will become just as important as compute and storage. Cybersecurity leaders have echoed similar views. Major Vineet Kumar, Founder and Global President of CyberPeace, called the Budget a strong signal that India’s growth and security priorities are now deeply connected.

“India’s growth ambitions are now inseparable from its digital and security foundations,” he said.He added that the focus on AI, cloud, and deep-tech infrastructure makes cybersecurity a core national and economic requirement, not a secondary concern. From the banking and services perspective, Manish S., Head of Trade Finance Implementation at Standard Chartered India, highlighted the opportunities the Budget creates for professionals and businesses.

“India’s Budget 2026–27 supports services with fiscal incentives for foreign cloud firms, a data centre push, GCC support and skilling commitments,”he said, encouraging professionals to upskill in cloud, AI, data engineering, and cybersecurity to stay relevant in the evolving ecosystem. Infrastructure providers also see long-term impact. Subhasis Majumdar, Managing Director of Vertiv India, described the tax holiday as a major competitiveness boost.

“The long-term tax holiday for foreign cloud companies until 2047 is a game-changing move,”he said, adding that it will attract large global investments and create a multiplier effect across power, cooling, and critical digital infrastructure. Sujata Seshadrinathan, Co-Founder and Director at Basiz Fund Service, also welcomed the Budget’s balanced approach to advanced technology adoption. She noted that the government has recognised both the benefits and challenges of emerging technologies like AI, including ecological concerns and labour displacement. She highlighted that the focus on skilling, reskilling, and DeepTech-led inclusive growth is “a push in the right direction.” Together, these reactions reflect a shared view across industry: Budget 2026 is not just supporting technology growth, but actively shaping the foundation for India’s long-term digital and cyber future.

Ad fraud isn’t just a marketing problem anymore — it’s a full-scale threat to the trust that powers the digital economy. As Data Privacy Week 2026 puts a global spotlight on protecting personal information and ensuring accountability online, the growing fraud crisis in digital advertising feels more urgent than ever.

In 2024 alone, fraud in mobile advertising jumped 21%, while programmatic ad fraud drained nearly $50 billion from the industry. During data privacy week 2026, these numbers serve as a reminder that ad fraud is not only about wasted budgets — it’s also about how consumer data moves, gets tracked, and sometimes misused across complex ecosystems.

This urgency is reflected in the rapid growth of the ad fraud detection tools market, expected to rise from $410.7 million in 2024 to more than $2 billion by 2034. And in the context of data privacy week 2026, the conversation is shifting beyond fraud prevention to a bigger question: if ads are being manipulated and user data is being shared without clear oversight, who is truly in control?

To unpack these challenges, The Cyber Express team, during data privacy week 2026, spoke with Dhiraj Gupta, CTO & Co-founder of mFilterIt, a technology leader at the forefront of helping brands win the battle against ad fraud and restore integrity across the advertising ecosystem. With a background in telecom and a passion for building AI-driven solutions, Gupta argues that brands can no longer rely on surface-level compliance or platform-reported metrics. As he puts it,“Independent verification and data-flow audits are critical because they validate what actually happens in a campaign, not just what media plans, platforms, or dashboards report.”Read the excerpt from the data privacy week 2026 interview below to understand why real-time audits, stronger privacy controls, and continuous accountability are quickly becoming non-negotiable in the fight against fraud — and in rebuilding consumer trust in digital advertising.

In a Substack post on Tuesday, Starmer said that for many children, social media has become “a world of endless scrolling, anxiety and comparison.” “Being a child should not be about constant judgement from strangers or the pressure to perform for likes,” he wrote.

Alongside the possible ban, the government has launched a formal consultation on children’s use of technology. The review will examine whether a social media ban for children would be effective and, if introduced, how it could be enforced. Ministers will also look at improving age assurance technology and limiting design features such as “infinite scrolling” and “streaks,” which officials say encourage compulsive use.

The consultation will be backed by a nationwide conversation with parents, young people, and civil society groups. The government said it would respond to the consultation in the summer.

In a Substack post on Tuesday, Starmer said that for many children, social media has become “a world of endless scrolling, anxiety and comparison.” “Being a child should not be about constant judgement from strangers or the pressure to perform for likes,” he wrote.

Alongside the possible ban, the government has launched a formal consultation on children’s use of technology. The review will examine whether a social media ban for children would be effective and, if introduced, how it could be enforced. Ministers will also look at improving age assurance technology and limiting design features such as “infinite scrolling” and “streaks,” which officials say encourage compulsive use.

The consultation will be backed by a nationwide conversation with parents, young people, and civil society groups. The government said it would respond to the consultation in the summer.

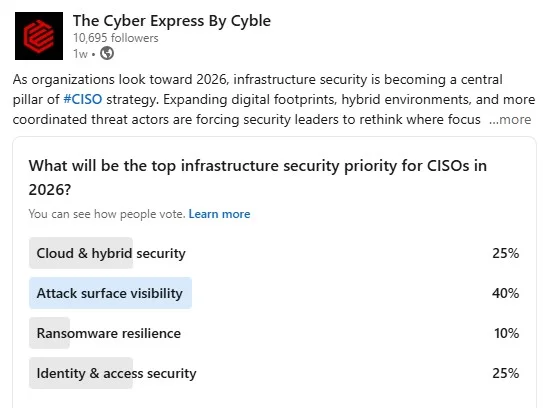

With 40% of respondents selecting attack surface visibility, it emerged as the top infrastructure security priority for CISOs heading into 2026. The result reflects a growing recognition that organizations cannot secure what they cannot see — particularly as assets are spread across cloud platforms, SaaS tools, APIs, endpoints, development environments, and third-party services.

Both cloud and hybrid security and identity and access security tied for second place, each receiving 25% of the vote. Ransomware resilience, while still a major operational concern, ranked lower at 10%, suggesting that many security leaders are shifting focus toward foundational controls that reduce exposure before attacks occur.

With 40% of respondents selecting attack surface visibility, it emerged as the top infrastructure security priority for CISOs heading into 2026. The result reflects a growing recognition that organizations cannot secure what they cannot see — particularly as assets are spread across cloud platforms, SaaS tools, APIs, endpoints, development environments, and third-party services.

Both cloud and hybrid security and identity and access security tied for second place, each receiving 25% of the vote. Ransomware resilience, while still a major operational concern, ranked lower at 10%, suggesting that many security leaders are shifting focus toward foundational controls that reduce exposure before attacks occur.

“Currently, there is no evidence indicating that APD systems have been compromised or that any APD data has been acquired by the threat actor. However, as a precautionary measure, the department is actively monitoring the systems and implementing protective measures to safeguard information.”Anchorage, Alaska’s largest city, is home to approximately 300,000 residents, making the protection of public safety data a critical priority for municipal authorities.

Image Source: X[/caption]

Image Source: X[/caption]

Source: X[/caption]

Source: X[/caption]

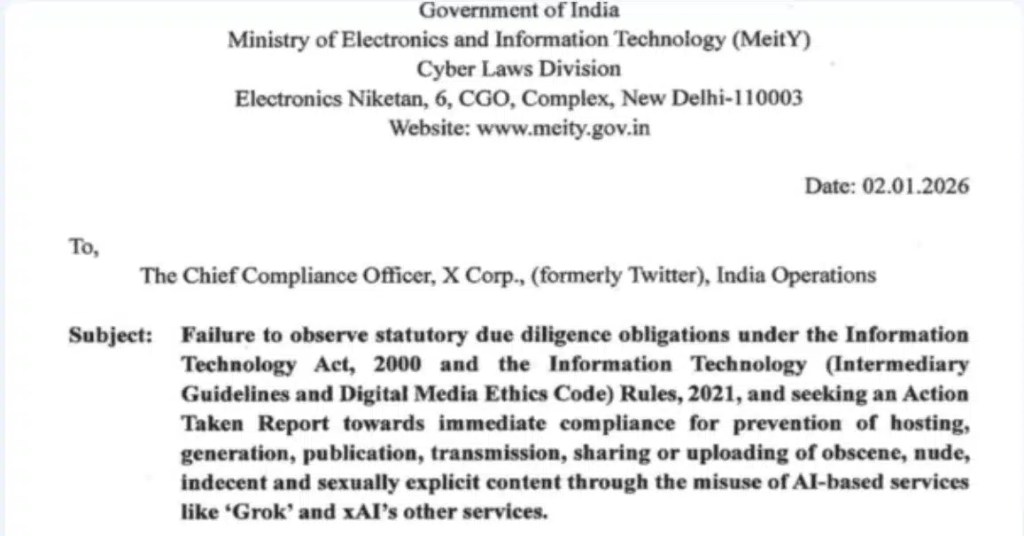

Source: India's Ministry of Electronics and Information Technology[/caption]

Malaysia’s Communications and Multimedia Commission said it had received public complaints about “indecent, grossly offensive” content on X and confirmed it was investigating the matter. The regulator added that X’s representatives would be summoned.

Source: India's Ministry of Electronics and Information Technology[/caption]

Malaysia’s Communications and Multimedia Commission said it had received public complaints about “indecent, grossly offensive” content on X and confirmed it was investigating the matter. The regulator added that X’s representatives would be summoned.

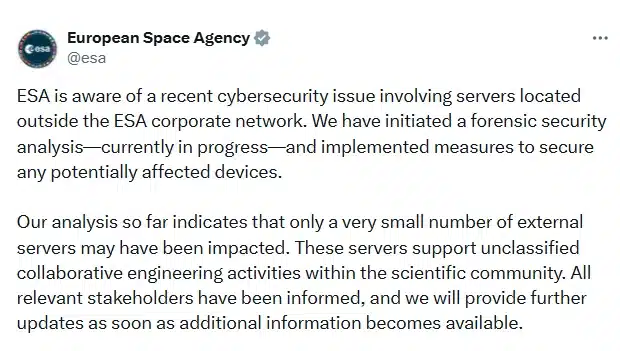

Source: ESA Twitter Handle[/caption]

ESA said it will provide further updates as additional details become available.

Source: ESA Twitter Handle[/caption]

ESA said it will provide further updates as additional details become available.

Source: Data Breach Fourm[/caption]

ESA has not verified the authenticity or scope of the claims made by the threat actor.

So far, ESA has not disclosed which specific external servers were compromised or whether any credentials or development assets referenced by the threat actor were confirmed to be exposed.

Founded 50 years ago and headquartered in Paris, the European Space Agency is an intergovernmental organization that coordinates space activities across 23 member states.

Given ESA’s role in space exploration, satellite systems, and scientific research, cybersecurity incidents involving the agency carry heightened strategic and reputational significance.

Source: Data Breach Fourm[/caption]

ESA has not verified the authenticity or scope of the claims made by the threat actor.

So far, ESA has not disclosed which specific external servers were compromised or whether any credentials or development assets referenced by the threat actor were confirmed to be exposed.

Founded 50 years ago and headquartered in Paris, the European Space Agency is an intergovernmental organization that coordinates space activities across 23 member states.

Given ESA’s role in space exploration, satellite systems, and scientific research, cybersecurity incidents involving the agency carry heightened strategic and reputational significance.

Threats now move faster than human workflows can respond. Static rules, manual triage, and analyst-centric escalation chains break down when adversaries use AI to adapt in real time. As a result, CISOs are increasingly backing AI-native SOC platforms that operate through autonomous agents rather than dashboards and alerts.

Cyble Blaze AI exemplifies this shift. Built as an AI-native, multi-agent cybersecurity platform, Blaze AI enables continuous threat hunting, real-time correlation, and autonomous response, allowing security teams to identify and neutralize threats in seconds rather than hours. In practice, this moves security operations from reactive monitoring to machine-speed defense.

AI-SOC is not about replacing analysts; it is about re-architecting operations so humans supervise outcomes instead of chasing alerts. Behavioural analysis, automated decisioning, and immediate containment are no longer “advanced capabilities”—they are foundational.

Any CISO still relying on static rules and manual triage in 2026 will be explaining failure, not preventing it.

Finally, CISOs will invest in tools that translate cyber risk into business reality.

By 2026, security leaders will no longer be judged on how many threats they block, but on how clearly they can explain risk, impact, and trade-offs to the business. Boards are done with abstract heat maps and technical severity scores. They want to know what a risk costs, what reducing it achieves, and what happens if it is ignored.

This is where risk quantification platforms come into play. By framing cyber exposure in business terms, they allow CISOs to prioritize controls, justify investment decisions, and have credible, outcome-driven conversations at the executive level. Platforms such as Cyble Saratoga, which focus on moving organizations beyond subjective assessments toward measurable risk understanding, reflect this shift in how security decisions are made.

In 2026, outcomes will matter more than effort. CISOs who cannot quantify risk and articulate trade-offs will lose influence, and eventually relevance.

Image Source: https://www.justice.gov/[/caption]

When users clicked on these fraudulent search ads, they believed they were visiting their bank’s official website. In reality, they were redirected to fake bank websites controlled by the attackers. Once victims entered their usernames and passwords, malicious software embedded in the fake pages captured those details in real time.

The stolen login credentials were then used to access legitimate bank accounts. From there, the criminals initiated unauthorized bank transfers, effectively draining funds before victims realized their accounts had been compromised.

Investigators confirmed that the seized domain continued hosting stolen credentials and backend infrastructure as recently as November 2025.

Image Source: https://www.justice.gov/[/caption]

When users clicked on these fraudulent search ads, they believed they were visiting their bank’s official website. In reality, they were redirected to fake bank websites controlled by the attackers. Once victims entered their usernames and passwords, malicious software embedded in the fake pages captured those details in real time.

The stolen login credentials were then used to access legitimate bank accounts. From there, the criminals initiated unauthorized bank transfers, effectively draining funds before victims realized their accounts had been compromised.

Investigators confirmed that the seized domain continued hosting stolen credentials and backend infrastructure as recently as November 2025.