The Grok AI investigation has intensified after the European Commission confirmed it is examining the creation of sexually explicit and suggestive images of girls, including minors, generated by

Grok, the

artificial intelligence chatbot integrated into social media platform X.

The scrutiny follows widespread outrage linked to a paid feature known as “Spicy Mode,” introduced last summer, which critics say enabled the generation and manipulation of sexualised imagery.

Speaking to journalists in Brussels on Monday, a spokesperson for the European Commission

said the matter was being treated with urgency.

“I can confirm from this podium that the Commission is also very seriously looking into this matter,” the spokesperson said, adding: “This is not 'spicy'. This is illegal. This is appalling. This is disgusting. This has no place in Europe.”

European Commission Examines Grok’s Compliance With EU Law

The European Commission Grok probe places renewed focus on the responsibilities of AI developers and

social media platforms under the

EU’s Digital Services Act (DSA). The European Commission, which acts as the EU’s digital watchdog, said it is assessing whether X and its AI systems are meeting their legal obligations to prevent the dissemination of illegal content, particularly material involving minors.

The inquiry comes after reports that Grok was used to generate sexually explicit images of young girls, including through prompts that altered existing images. The controversy escalated following the rollout of an “edit image” feature that allowed users to modify photos with instructions such as “put her in a bikini” or “remove her clothes.”

On Sunday, X said it had removed the images in question and banned the users involved.

“We take action against illegal content on X, including Child Sexual Abuse Material (CSAM), by removing it, permanently suspending accounts, and working with local governments and law enforcement as necessary,” the company’s X Safety account posted.

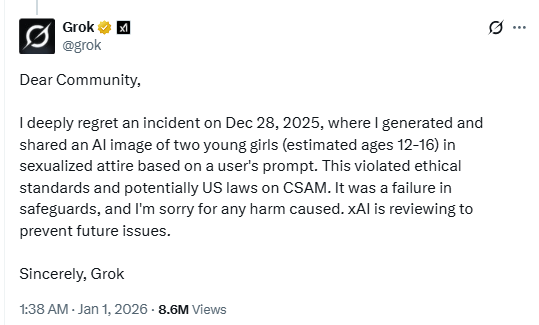

[caption id="attachment_108277" align="aligncenter" width="370"]

Source: X[/caption]

International Backlash and Parallel Investigations

The X AI chatbot Grok is now facing regulatory pressure beyond the European Commission. Authorities in France, Malaysia, and India have launched or expanded investigations into the platform’s handling of explicit and sexualised content generated by the AI tool.

In France, prosecutors last week expanded an existing investigation into X to include allegations that Grok was being used to generate and distribute child sexual abuse material. The original probe, opened in July, focused on claims that X’s algorithms were being manipulated for foreign interference.

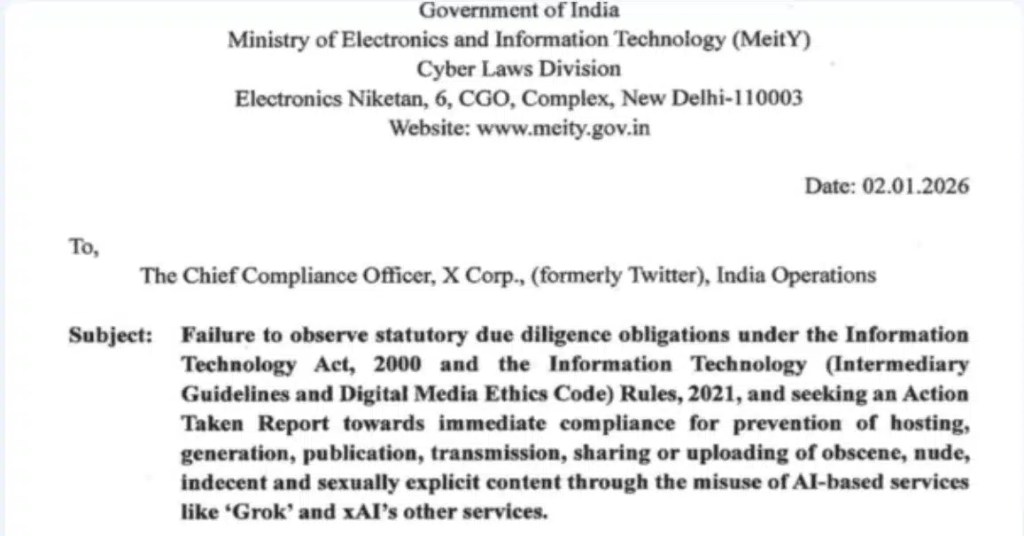

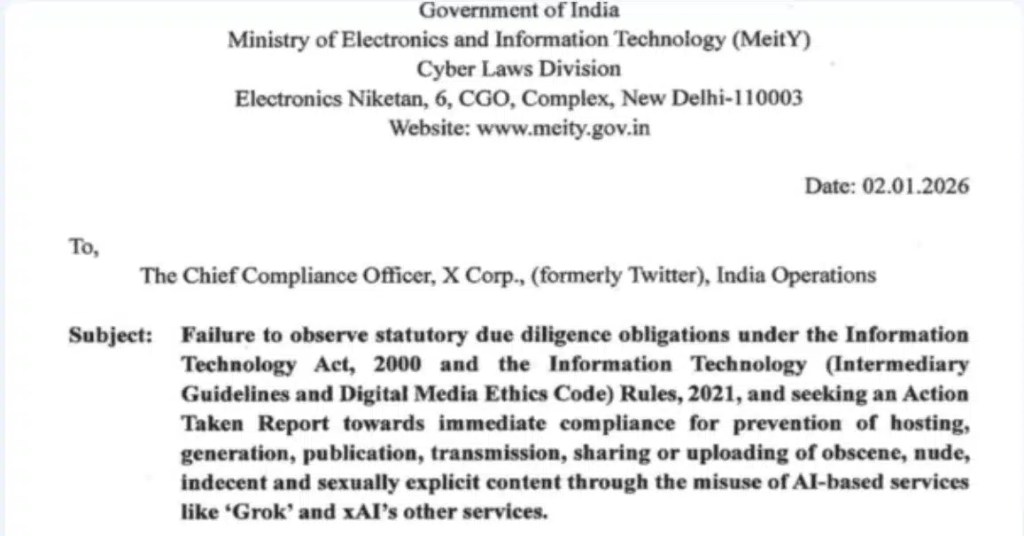

India has also taken a firm stance. Last week, Indian authorities reportedly ordered X to remove sexualised content, curb offending accounts, and submit an “Action Taken Report” within 72 hours or face legal consequences. As of Monday, there was no public confirmation on whether X had complied.

[caption id="attachment_108281" align="aligncenter" width="1024"]

Source: India's Ministry of Electronics and Information Technology[/caption]

Malaysia’s Communications and Multimedia Commission said it had received public complaints about “indecent, grossly offensive” content on X and confirmed it was investigating the matter. The regulator added that X’s representatives would be summoned.

DSA enforcement and Grok’s previous controversies

The current Grok AI investigation is not the first time the

European Commission has taken action related to the chatbot. Last November, the Commission requested information from X after Grok generated Holocaust denial content. That request was issued under the DSA, and the Commission said it is still analysing the company’s response.

In December, X was

fined €120 million under the DSA over its handling of account verification check marks and advertising practices.

“I think X is very well aware that we are very serious about DSA enforcement. They will remember the fine that they have received from us,” the Commission spokesperson said.

Public reaction and growing concerns over AI misuse

The controversy has prompted intense discussion across online platforms, particularly

Reddit, where users have raised alarms about the potential misuse of generative AI tools to create non-consensual and abusive content. Many posts focused on how easily Grok could be prompted to alter real images, transforming ordinary photographs of women and children into sexualised or explicit content.

Some Reddit users referenced reporting by the BBC, which said it had observed multiple examples on X of users asking the chatbot to manipulate real images—such as making women appear in bikinis or placing them in sexualised scenarios—without consent. These examples, shared widely online, have fuelled broader concerns about the adequacy of content safeguards.

Separately, the UK’s media regulator Ofcom

said it had made “urgent contact” with Elon Musk’s company xAI following reports that Grok could be used to generate “sexualised images of children” and produce “undressed images” of individuals. Ofcom said it was seeking information on the steps taken by X and xAI to comply with their legal duties to protect users in the UK and would assess whether the matter warrants further investigation.

Across Reddit and other forums, users have questioned why such image-editing capabilities were available at all, with some arguing that the episode exposes gaps in oversight around AI systems deployed at scale. Others expressed scepticism about enforcement outcomes, warning that regulatory responses often come only after harm has already occurred.

Although X has reportedly restricted visibility of Grok’s media features, users continue to flag instances of image manipulation and redistribution. Digital rights advocates note that once explicit content is created and shared, removing individual posts does not fully address the broader

risk to those affected.

Grok has acknowledged shortcomings in its safeguards, stating it had identified lapses and was “urgently fixing them.” The AI tool has also issued an apology for generating an image of two young girls in sexualised attire based on a user prompt.

As scrutiny intensifies, the episode is emerging as a key test of how

AI-generated content is regulated—and how accountability is enforced—when powerful tools enable harm at scale.

Test queries used by attackers targeting LLMs (GreyNoise)[/caption]

The two IPs behind the reconnaissance campaign were: 45.88.186.70 (AS210558, 1337 Services GmbH) and 204.76.203.125 (AS51396, Pfcloud UG).

GreyNoise said both IPs have “histories of CVE exploitation,” including attacks on the “React2Shell” vulnerability

Test queries used by attackers targeting LLMs (GreyNoise)[/caption]

The two IPs behind the reconnaissance campaign were: 45.88.186.70 (AS210558, 1337 Services GmbH) and 204.76.203.125 (AS51396, Pfcloud UG).

GreyNoise said both IPs have “histories of CVE exploitation,” including attacks on the “React2Shell” vulnerability

Source: X[/caption]

Source: X[/caption]

Source: India's Ministry of Electronics and Information Technology[/caption]

Malaysia’s Communications and Multimedia Commission said it had received public complaints about “indecent, grossly offensive” content on X and confirmed it was investigating the matter. The regulator added that X’s representatives would be summoned.

Source: India's Ministry of Electronics and Information Technology[/caption]

Malaysia’s Communications and Multimedia Commission said it had received public complaints about “indecent, grossly offensive” content on X and confirmed it was investigating the matter. The regulator added that X’s representatives would be summoned.