On a cozy December morning, as children in Australia set their bags aside for the holiday season and held their tabs and phones in hand to take that selfie and announce to the world they were all set for the fun to begin, something felt a miss. They couldn't access their Snap Chat and Instagram accounts. No it wasn't another downtime caused by a cyberattack, because they could see their parents lounging on the couch and laughing at the dog dance reels. So why were they not able to? The answer: the ban on social media for children under 16 had officially taken effect.

It wasn't just one or 10 or 100 but more than one million young users who woke up locked out of their social media. No TikTok scroll. No Snapchat streak. No YouTube comments. Australia had quietly entered a new era, the world’s first nationwide

ban on social media for children under 16, effective December 10.

The move has initiated global debate, parental relief, youth frustration, and a broader question: Is this the start of a global shift, or a risky social experiment?

Prime Minister Anthony Albanese was clear about why his government took this unparalleled step.

“Social media is doing harm to our kids, and I’m calling time on it,” he said during a press conference. “I’ve spoken to thousands of parents… they’re worried sick about the safety of our kids online, and I want Australian families to know that the Government has your back.”

Under the Anthony Albanese social media policy, platforms including Instagram, Facebook, X, Snapchat, TikTok, Reddit, Twitch, Kick, Threads and YouTube must block users under 16, or face fines of up to AU$32 million. Parents and children won’t be penalized, but

tech companies will.

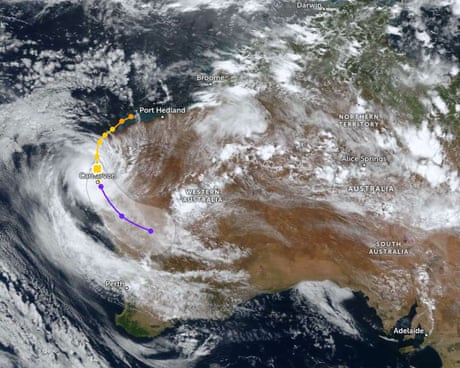

[caption id="attachment_107569" align="aligncenter" width="448"]

Source: eSafety Commissioner[/caption]

Australia's Ban on Social Media: A Big Question

Albanese pointed to rising concerns about the effects of social media on children, from body-image distortion to exposure to inappropriate content and addictive algorithms that tug at young attention spans.

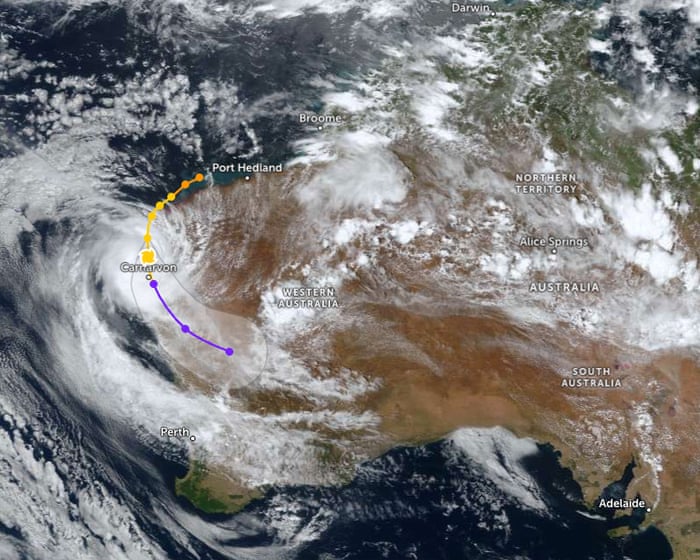

[caption id="attachment_107541" align="aligncenter" width="960"]

Source: Created using Google Gemini[/caption]

Research supports these concerns. A

Pew Research Center study found:

- 48% of teens say social media has a mostly negative effect on people their age, up sharply from 32% in 2022.

- 45% feel they spend too much time on social media.

- Teen girls experience more negative impacts than boys, including mental health struggles (25% vs 14%) and loss of confidence (20% vs 10%).

- Yet paradoxically, 74% of teens feel more connected to friends because of social media, and 63% use it for creativity.

These contradictions make the issue far from black and white.

Psychologists

remind us that adolescence, beginning around age 10 and stretching into the mid-20s, is a time of rapid biological and social change, and that maturity levels vary. This means that a one-size-fits-all ban on social media may overshoot the mark.

Ban on Social Media for Users Under 16: How People Reacted

Australia’s

announcement, first revealed in November 2024, has motivated countries from Malaysia to Denmark to consider similar legislation. But not everyone is convinced this is the right way forward.

Supporters Applaud “A Chance at a Real Childhood”

Pediatric occupational therapist Cris Rowan, who has spent 22 years working with children,

celebrated the move:

“This may be the first time children have the opportunity to experience a real summer,” she said.“Canada should follow Australia’s bold initiative. Parents and teachers can start their own movement by banning social media from homes and schools.”

Parents’ groups have also welcomed the decision, seeing it as a necessary intervention in a world where screens dominate childhood.

Others Say the Ban Is Imperfect, but Necessary

Australian author Geoff Hutchison

puts it bluntly: “We shouldn’t look for absolutes. It will be far from perfect. But we can learn what works… We cannot expect the repugnant tech bros to care.”

His view reflects a broader belief that tech companies have too much power, and too little accountability.

Experts Warn Against False Security

However, some experts caution that the Australia ban on social media may create the illusion of safety while failing to address deeper issues.

Professor Tama Leaver, Internet Studies expert at Curtin University, told

The Cyber Express that while the ban on social media addresses some risks, such as algorithmic amplification of inappropriate content and endless scrolling, many online dangers remain.

“The social media ban only really addresses on set of risks for young people, which is algorithmic amplification of inappropriate content and the doomscrolling or infinite scroll. Many risks remain. The ban does nothing to address cyberbullying since messaging platforms are exempt from the ban, so cyberbullying will simply shift from one platform to another.”

Leaver also noted that restricting access to popular platforms will not drive children offline. Due to ban on social media young users will explore whatever digital spaces remain, which could be less regulated and potentially riskier.

“Young people are not leaving the digital world. If we take some apps and platforms away, they will explore and experiment with whatever is left. If those remaining spaces are less known and more risky, then the risks for young people could definitely increase. Ideally the ban will lead to more conversations with parents and others about what young people explore and do online, which could mitigate many of the risks.”

From a broader perspective, Leaver emphasized that the ban on social media will only be fully beneficial if accompanied by significant investment in digital literacy and digital citizenship programs across schools:

“The only way this ban could be fully beneficial is if there is a huge increase in funding and delivery of digital literacy and digital citizenship programs across the whole K-12 educational spectrum. We have to formally teach young people those literacies they might otherwise have learnt socially, otherwise the ban is just a 3 year wait that achieves nothing.”

He added that platforms themselves should take a proactive role in protecting children:

“There is a global appetite for better regulation of platforms, especially regarding children and young people. A digital duty of care which requires platforms to examine and proactively reduce or mitigate risks before they appear on platforms would be ideal, and is something Australia and other countries are exploring. Minimizing risks before they occur would be vastly preferable to the current processes which can only usually address harm once it occurs.”

Looking at the global stage, Leaver sees Australia ban on social media as a potential learning opportunity for other nations:

“There is clearly global appetite for better and more meaningful regulation of digital platforms. For countries considered their own bans, taking the time to really examine the rollout in Australia, to learn from our mistakes as much as our ambitions, would seem the most sensible path forward.”

Other specialists continue to warn that the ban on social media could isolate vulnerable teenagers or push them toward more dangerous, unregulated corners of the internet.

Legal Voices Raise Serious Constitutional Questions

Senior Supreme Court Advocate Dr. K. P. Kylasanatha Pillay

offered a thoughtful reflection:

“Exposure of children to the vagaries of social media is a global concern… But is a total ban feasible? We must ask whether this is a reasonable restriction or if it crosses the limits of state action. Not all social media content is harmful. The best remedy is to teach children awareness.”

His perspective reflects growing debate about rights, safety, and state control.

LinkedIn, Reddit, and the Public Divide

Social media itself has become the battleground for reactions.

On Reddit, youngesters were particularly vocal about the ban on social media. One teen wrote:

“Good intentions, bad execution. This will make our generation clueless about internet safety… Social media is how teenagers express themselves. This ban silences our voices.”

Another pointed out the easy loophole:

“Bypassing this ban is as easy as using a free

VPN. Governments don’t care about safety — they want control.”

But one adult user disagreed:

“Everyone against the ban seems to be an actual child. I got my first smartphone at 20. My parents were right — early exposure isn’t always good.”

This generational divide is at the heart of the debate.

Brands, Marketers, and Schools Brace for Impact

Bindu Sharma, Founder of World One Consulting,

highlighted the global implications:

“Ten of the biggest platforms were ordered to block children… The world is watching how this plays out.”

If the ban succeeds, brands may rethink how they target younger audiences. If it fails, digital regulation worldwide may need reimagining.

Where Does This Leave the World?

Australia’s decision to ban social media for children under 16 is bold, controversial, and rooted in good intentions. It could reshape how societies view childhood, technology, and digital rights.

But as critics note, ban on social media platforms can also create unintended consequences, from delinquency to digital illiteracy.

What’s clear is this: Australia has started a global conversation that’s no longer avoidable.

As one LinkedIn user concluded:

“Safety of the child today is assurance of the safety of society tomorrow.”

Source: eSafety Commissioner[/caption]

Source: eSafety Commissioner[/caption]

Source: Created using Google Gemini[/caption]

Research supports these concerns. A

Source: Created using Google Gemini[/caption]

Research supports these concerns. A