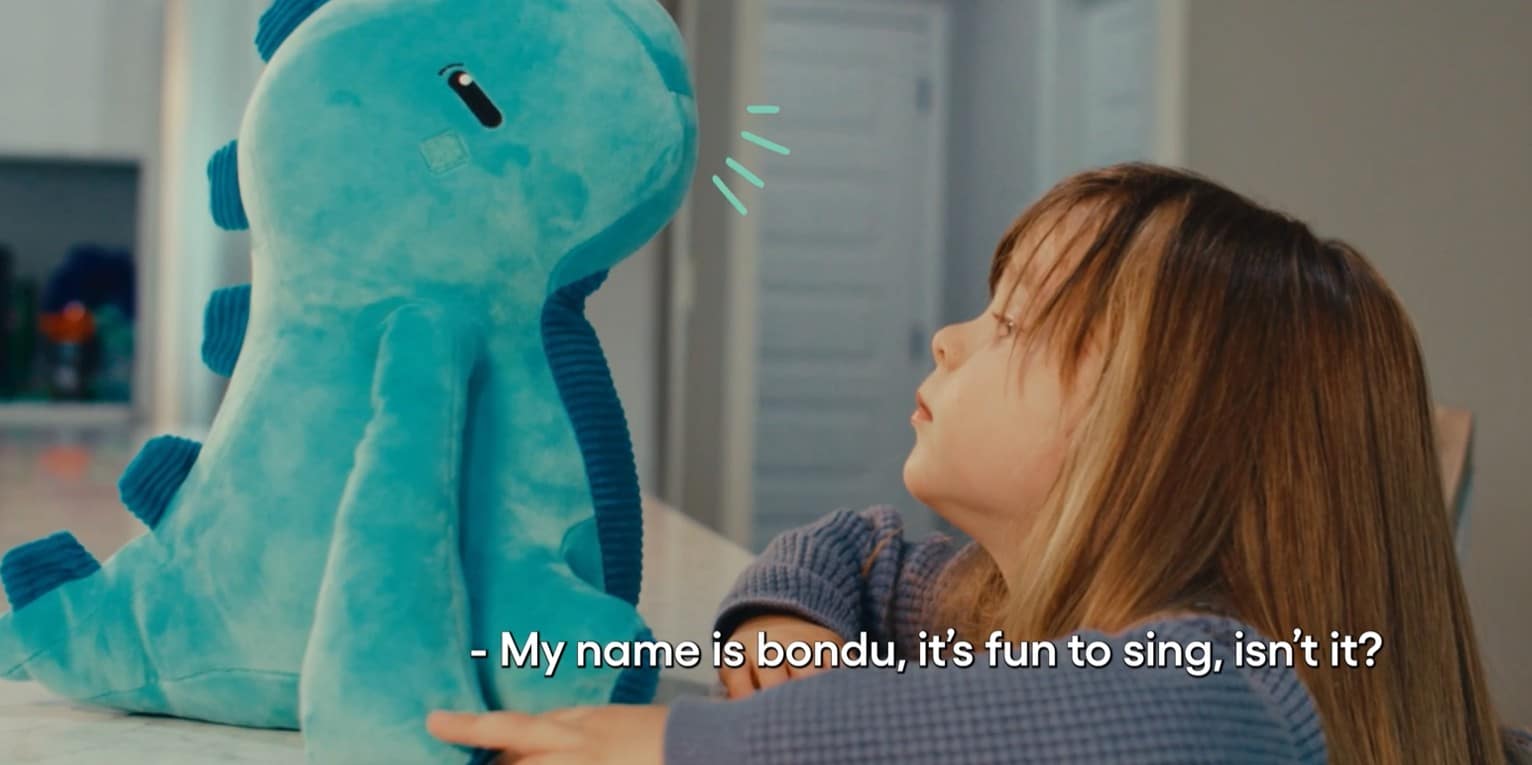

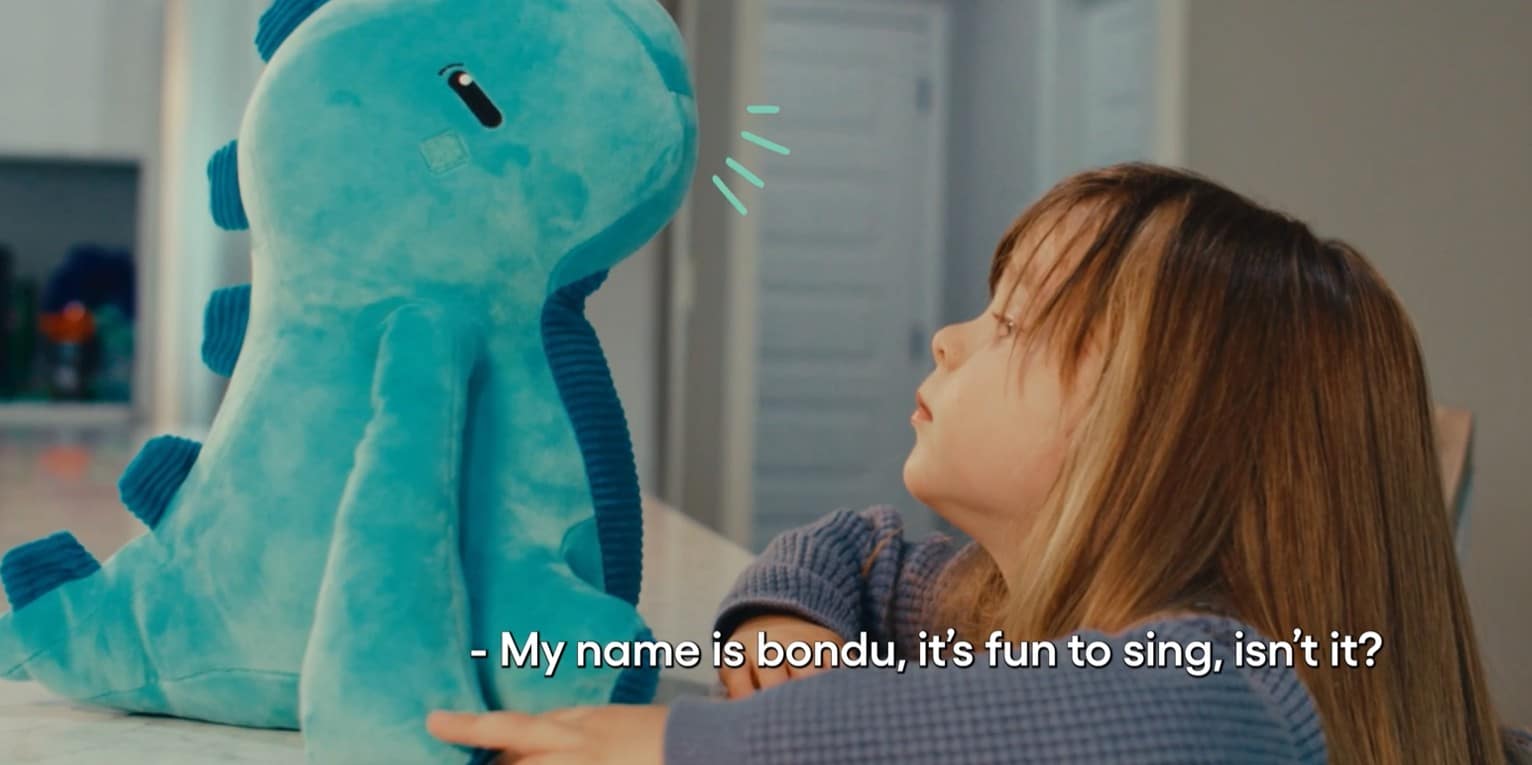

A security researcher investigating an AI toy for a neighbor found an exposed admin panel that could have leaked the personal data and conversations of the children using the toy.

The findings, detailed in a blog

post by security researcher Joseph Thacker, outlines the work he did with fellow researcher Joel Margolis, who found the exposed admin panel for the Bondu AI toy.

Margolis found an intriguing domain (console.bondu.com) in the mobile app backend’s Content Security Policy headers. There he found a button that simply said: “Login with Google.”

“By itself, there’s nothing weird about that as it was probably just a parent portal,” Thacker wrote. But instead of a parent portal, it turned out to be the Bondu core admin panel.

“We had just logged into their admin dashboard despite [not] having any special accounts or affiliations with Bondu themselves,” Thacker said.

AI Toy Admin Panel Exposed Children’s Conversations

After some investigation in the admin panel, the researchers found they had full access to “Every conversation transcript that any child has had with the toy,” which numbered in the “tens of thousands of sessions.”

The panel also contained personal

data about children and their family, including:

- The child’s name and birth date

- Family member names

- The child’s likes and dislikes

- Objectives for the child (defined by the parent)

- The name given to the toy by the child

- Previous conversations between the child and the toy (used to give the LLM context)

- Device information, such as location via IP address, battery level, awake status, and more

- The ability to update device firmware and reboot devices

They noticed the application is based on OpenAI GPT-5 and Google Gemini. “Somehow, someway, the toy gets fed a prompt from the backend that contains the child profile information and previous conversations as context,” Thacker wrote. “As far as we can tell, the data that is being collected is actually disclosed within their

privacy policy, but I doubt most people realize this unless they go and read it (which most people don’t do nowadays).”

In addition to the authentication bypass, they also discovered an Insecure Direct Object Reference (IDOR)

vulnerability in the product’s API “that allowed us to retrieve any child’s profile data by simply guessing their ID.”

“This was all available to anyone with a Google account,” Thacker said. “Naturally we didn’t access nor store any data beyond what was required to validate the vulnerability in order to responsibly disclose it.”

A (Very) Quick Response from Bondu

Margolis reached out to Bondu’s CEO on LinkedIn over the weekend – and the company took down the console “within 10 minutes.”

“Overall we were happy to see how the Bondu team reacted to this report; they took the issue seriously, addressed our findings promptly, and had a good collaborative response with us as

security researchers,” Thacker said.

The company took other steps to investigate and look for additional security flaws, and also started a bug bounty program. They examined console access logs and found that there had been no unauthorized access except for the researchers’ activity, so the company was saved from a

data breach.

Despite the positive experience working with Bondu, the experience made Thacker reconsider buying AI toys for his own kids.

“To be honest, Bondu was totally something I would have been prone to buy for my kids before this finding,” he wrote. “However this vulnerability shifted my stance on smart toys, and even smart devices in

general.”

“AI models are effectively a curated, bottled-up access to all the information on the

internet,” he added. “And the internet can be a scary place. I’m not sure handing that type of access to our kids is a good idea.”

Aside from potential security issues, “AI makes this problem even more interesting because the designer (or just the AI model itself) can have actual ‘control’ of something

in your house. And I think that is even more terrifying than anything else that has existed yet,” he said.

Bondu's

website says the AI toy was built with child safety in mind, noting that its "safety and behavior systems were built over 18 months of beta testing with thousands of families. Thanks to rigorous review processes and continuous monitoring, we did not receive a single report of unsafe or inappropriate behavior from bondu throughout the entire beta period."