Bruce Schneier Reminds LLM Engineers About the Risks of Prompt Injection Vulnerabilities

Read more of this story at Slashdot.

Read more of this story at Slashdot.

Read more of this story at Slashdot.

Jan Leike, a key safety researcher at firm behind ChatGPT, quit days after launch of its latest AI model, GPT-4o

A former senior employee at OpenAI has said the company behind ChatGPT is prioritising “shiny products” over safety, revealing that he quit after a disagreement over key aims reached “breaking point”.

Jan Leike was a key safety researcher at OpenAI as its co-head of superalignment, ensuring that powerful artificial intelligence systems adhered to human values and aims. His intervention comes before a global artificial intelligence summit in Seoul next week, where politicians, experts and tech executives will discuss oversight of the technology.

Continue reading...© Photograph: Michael Dwyer/AP

© Photograph: Michael Dwyer/AP

Source: www.cybertalk.org – Author: slandau EXECUTIVE SUMMARY: Over 90 percent of organizations consider threat hunting a challenge. More specifically, seventy-one percent say that both prioritizing alerts to investigate and gathering enough data to evaluate a signal’s maliciousness can be quite difficult. Threat hunting is necessary simply because no cyber security protections are always 100% effective. […]

La entrada How AI turbocharges your threat hunting game – Source: www.cybertalk.org se publicó primero en CISO2CISO.COM & CYBER SECURITY GROUP.

Source: www.cybertalk.org – Author: slandau EXECUTIVE SUMMARY: Cyber security experts have recently uncovered a sophisticated cyber attack campaign targeting U.S-based organizations that are involved in artificial intelligence (AI) projects. Targets have included organizations in academia, private industry and government service. Known as UNK_SweetSpecter, this campaign utilizes the SugarGh0st remote access trojan (RAT) to infiltrate networks. […]

La entrada SugarGh0st RAT variant, targeted AI attacks – Source: www.cybertalk.org se publicó primero en CISO2CISO.COM & CYBER SECURITY GROUP.

Jan Leike, who ran OpenAI’s “Super Alignment” team, believes there should be more focus on preparing for the next generation of AI models, including on things like safety.

The post A Former OpenAI Leader Says Safety Has ‘Taken a Backseat to Shiny Products’ at the AI Company appeared first on SecurityWeek.

Existing Regulations As part of its guidance to agencies in the AI Risk Management (AI RMF), the National Institute of Standards and Technology (NIST) recommends that an organization must have an inventory of its AI systems and models. An inventory is necessary from the perspective of risk identification and assessment, monitoring and auditing, and governance […]

Existing Regulations As part of its guidance to agencies in the AI Risk Management (AI RMF), the National Institute of Standards and Technology (NIST) recommends that an organization must have an inventory of its AI systems and models. An inventory is necessary from the perspective of risk identification and assessment, monitoring and auditing, and governance […]

The post An Analysis of AI usage in Federal Agencies appeared first on Security Boulevard.

Stuff posted on Reddit is getting incorporated into ChatGPT, Reddit and OpenAI announced on Thursday. The new partnership grants OpenAI access to Reddit’s Data API, giving the generative AI firm real-time access to Reddit posts.

Reddit content will be incorporated into ChatGPT "and new products," Reddit's blog post said. The social media firm claims the partnership will "enable OpenAI’s AI tools to better understand and showcase Reddit content, especially on recent topics." OpenAI will also start advertising on Reddit.

The deal is similar to one that Reddit struck with Google in February that allows the tech giant to make "new ways to display Reddit content" and provide "more efficient ways to train models," Reddit said at the time. Neither Reddit nor OpenAI disclosed the financial terms of their partnership, but Reddit's partnership with Google was reportedly worth $60 million.

Enlarge (credit: Tim Robberts | DigitalVision)

After launching Slack AI in February, Slack appears to be digging its heels in, defending its vague policy that by default sucks up customers' data—including messages, content, and files—to train Slack's global AI models.

According to Slack engineer Aaron Maurer, Slack has explained in a blog that the Salesforce-owned chat service does not train its large language models (LLMs) on customer data. But Slack's policy may need updating "to explain more carefully how these privacy principles play with Slack AI," Maurer wrote on Threads, partly because the policy "was originally written about the search/recommendation work we've been doing for years prior to Slack AI."

Maurer was responding to a Threads post from engineer and writer Gergely Orosz, who called for companies to opt out of data sharing until the policy is clarified, not by a blog, but in the actual policy language.

Read more of this story at Slashdot.

Slack reveals it has been training AI/ML models on customer data, including messages, files and usage information. It's opt-in by default.

The post User Outcry as Slack Scrapes Customer Data for AI Model Training appeared first on SecurityWeek.

A critical vulnerability tracked as CVE-2024-34359 and dubbed Llama Drama can allow hackers to target AI product developers.

The post Critical Flaw in AI Python Package Can Lead to System and Data Compromise appeared first on SecurityWeek.

Enlarge / The Sony Music letter expressly prohibits artificial intelligence developers from using its music — which includes artists such as Beyoncé. (credit: Kevin Mazur/WireImage for Parkwood via Getty Images)

Sony Music is sending warning letters to more than 700 artificial intelligence developers and music streaming services globally in the latest salvo in the music industry’s battle against tech groups ripping off artists.

The Sony Music letter, which has been seen by the Financial Times, expressly prohibits AI developers from using its music—which includes artists such as Harry Styles, Adele and Beyoncé—and opts out of any text and data mining of any of its content for any purposes such as training, developing or commercializing any AI system.

Sony Music is sending the letter to companies developing AI systems including OpenAI, Microsoft, Google, Suno, and Udio, according to those close to the group.

Enlarge / This is essentially the kind of water heater the author has hooked up, minus the Wi-Fi module that led him down a rabbit hole. Also, not 140-degrees F—yikes. (credit: Getty Images)

The hot water took too long to come out of the tap. That is what I was trying to solve. I did not intend to discover that, for a while there, water heaters like mine may have been open to anybody. That, with some API tinkering and an email address, a bad actor could possibly set its temperature or make it run constantly. That’s just how it happened.

Let’s take a step back. My wife and I moved into a new home last year. It had a Rinnai tankless water heater tucked into a utility closet in the garage. The builder and home inspector didn't say much about it, just to run a yearly cleaning cycle on it.

Because it doesn’t keep a big tank of water heated and ready to be delivered to any house tap, tankless water heaters save energy—up to 34 percent, according to the Department of Energy. But they're also, by default, slower. Opening a tap triggers the exchanger, heats up the water (with natural gas, in my case), and the device has to push it through the line to where it's needed.

© Photo Illustration by The New York Times

Enlarge / Screenshot from the documentary Who Is Bobby Kennedy? (credit: whoisbobbykennedy.com)

In a lawsuit that seems determined to ignore that Section 230 exists, Robert F. Kennedy Jr. has sued Meta for allegedly shadowbanning his million-dollar documentary, Who Is Bobby Kennedy? and preventing his supporters from advocating for his presidential campaign.

According to Kennedy, Meta is colluding with the Biden administration to sway the 2024 presidential election by suppressing Kennedy's documentary and making it harder to support Kennedy's candidacy. This allegedly has caused "substantial donation losses," while also violating the free speech rights of Kennedy, his supporters, and his film's production company, AV24.

Meta had initially restricted the documentary on Facebook and Instagram but later fixed the issue after discovering that the film was mistakenly flagged by the platforms' automated spam filters.

Read more of this story at Slashdot.

One in three office workers who use GenAI admit to sharing customer info, employee details and financial data with the platforms. Are you worried yet?

The post Risks of GenAI Rising as Employees Remain Divided About its Use in the Workplace appeared first on Security Boulevard.

At I/O 2024, Google announced a great new AI feature for Google Photos, simply called Ask Photos. With Ask Photos, you can treat the app like a chatbot, say, Gemini or ChatGPT: You can request a specific photo in your library, or ask the app a general question about your photos, and the AI will sift through your entire library to both find the photos and the answers to your queries.

When you ask Ask Photos a question, the bot will make a detailed search of your library on your behalf: It first identifies relevant keywords in your query, such as locations, people, and dates, as well as longer phrases, such as "summer hike in Maine."

After that, Ask Photos will study the search results, and decide which ones are most relevant to your original query. Gemini's multimodal abilities allow it to process the elements of each photo, including text, subjects, and action, which helps it decide whether that image is pertinent to the search. Once Ask Photos picks the relevant photos and videos for your query, it combines them into a helpful response.

Google says your personal data in Google Photos is never used for ads and human reviewers won't see the conversions and personal data in Ask Photos, except, "in rare cases to address abuse or harm." The company also said they don't train their other AI products with this Google Photos data, including other Gemini models and services.

Of course, Ask Photos is an ideal way to quickly find specific photos you're looking for. You could ask, "Show me the best photos from my trip to Spain last year,” and Google Photos will pull up all your photos from that vacation, along with a text summary of its results. You can use the feature to arrange these photos in a new album, or generate captions for a social media post.

However, the more interesting use here is for finding answers to questions contained in your photos without having to scroll through those photos yourself. Google shared a great example during its presentation: If you ask the app, "What is my license plate number?" it will identify your car out of all the photos of cars in your library. It will not only return a picture of your car with your license plate, but will answer the original question itself. If you're offering advice to a friend about the best restaurants to try in a city you've been to, you could ask, "What restaurants did we go to in New York last year?" and Ask Photos will return both the images of the restaurants in your library, as well as a list you can share.

Google says the experimental feature is rolling out in the coming months, but no specific timeframe was given.

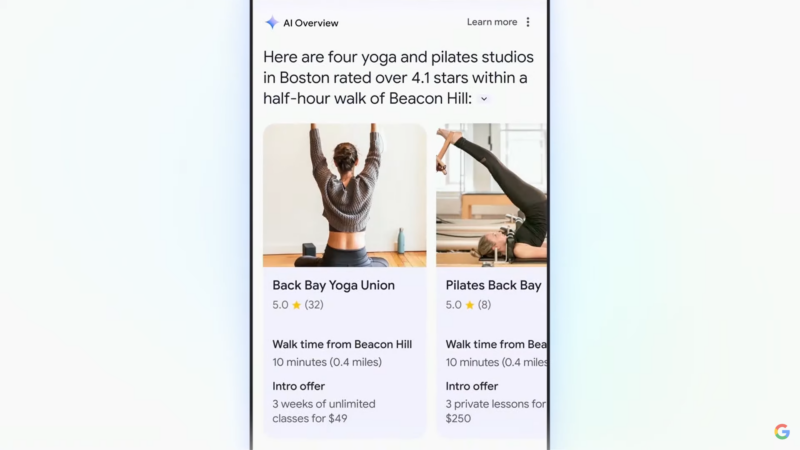

AI has been the dominating force in this year's Google I/O—and one of the biggest announcements that Google made was a new Gemini model customized for Google Search. Over the next few weeks, Google will be rolling out a few AI features in Search, including AI Overviews, AI-organized search results, and search with video.

When you're searching for something on Google and want a quick answer, AI Overviews come into play. The feature gives you an AI-generated overview of the topic you're searching for, and cites its sources with links you can click through for further reading. Google was testing AI Overviews in Search Labs, but has been rolling out the feature to everyone in the U.S. this week.

At a later date, you'll be able to adjust your AI Overview with options to simplify some of the terminology used, and even break down results in more detail. Ideally, you could turn a complex search into something accessible for anyone. Google is also pushing a feature that lets you stack multiple queries into one search: The company used the example of “find the best yoga or pilates studios in Boston and show me details on their intro offers, and walking time from Beacon Hill," and AI Overviews returned a complete result.

As with other AI-generated models, you can also use this feature to put together plans of action, including creating meal plans and prepping for a trip.

In addition to AI Overviews, Google Search will soon be using generative AI to create an "AI-organized results page." The idea is the AI will intelligently sort your most relevant options for you, so you won't have to do as much digging around the web. So when you're searching for something like, say, restaurants for a birthday dinner, Google's AI will suggest the best options it can find, organized beneath AI-generated headlines. AI-organized results will be available for English searches in the U.S.

Google previously rolled out Circle to Search, which lets you circle elements of your screen to start a Google search for that particular subject. But soon, you'll also be able to start a Google search with video. The company gave an example of a customer who bought a used record player whose needle wasn't working properly. The customer took a video of the issue, describing it out loud, and sent it along as a Google search. Google analyzed the issue and returned a relevant result, as if the user had simply typed out the problem in detail.

Search with video will soon be available for Search Labs users in English in the U.S. Google will expand the feature to more users in the coming months.

OpenAI’s updated chatbot GPT-4o is weirdly flirtatious, coquettish and sounds like Scarlett Johansson in Her. Why?

“Any sufficiently advanced technology is indistinguishable from magic,” Arthur C Clarke famously said. And this could certainly be said of the impressive OpenAI update to ChatGPT, called GPT-4o, which was released on Monday. With the slight caveat that it felt a lot like the magician was a horny 12-year-old boy who had just watched the Spike Jonze movie Her.

If you aren’t up to speed on GPT-4o (the o stands for “omni”) it’s basically an all-singing, all-dancing, all-seeing version of the original chatbot. You can now interact with it the same way you’d interact with a human, rather than via text-based questions. It can give you advice, it can rate your jokes, it can describe your surroundings, it can banter with you. It sounds human. “It feels like AI from the movies,” OpenAI CEO Sam Altman said in a blog post on Monday. “Getting to human-level response times and expressiveness turns out to be a big change.”

Continue reading...© Photograph: Warner Bros./Sportsphoto/Allstar

© Photograph: Warner Bros./Sportsphoto/Allstar

AI chatbots are more popular than ever, and there are plenty of solid options out there to choose from beyond OpenAI's ChatGPT. One particularly strong competitor is Google's Gemini AI, which used to be called Google Bard. This AI chatbot pulls information from the internet and runs off the latest Gemini language model created by Google.

Bard, or Gemini as the company now calls it, is Google's answer to ChatGPT. It's an AI chatbot designed to respond to various queries and tasks, all while being plugged into Google's search engine and receiving frequent updates. Like most other chatbots, including ChatGPT, Gemini can answer math problems and help with writing articles and documents, as well as with most other tasks you would expect a generative AI bot to do.

Nothing happened—Google just changed the name. Bard is now Gemini, and Gemini is Google's home for all things AI. The company says it wanted to bring everything into one easy-to-follow ecosystem, which is why it felt the name change was important. You can still access Gemini through the old bard.google.com system, but it will now redirect you to gemini.google.com.

Much like ChatGPT, Gemini is powered by a large language model (LLM) and is designed to respond with reasonable and human-like answers to your queries and requests. Previously, Gemini used Google's PaLM 2 language model, but Google has since released an update that adds Gemini 1.5 Flash and Gemini 1.5 Pro models, the search giant's most complex and capable language models yet. Running Gemini with multiple language models has allowed Google to see the bot in action in several different ways. Gemini can be accessed on any device by visiting the chatbot's website, just like ChatGPT, and is also available on Android and iPhones via the Gemini app.

Gemini is currently available to the general public. Google is still working on the AI chatbot, and hopes to continue improving it. As such, any responses, queries, or tasks submitted to Gemini can be reviewed by Google engineers to help the AI learn more from the questions that you're asking.

To start using Gemini, simply head over to gemini.google.com and sign in. Users who subscribe to Gemini Advanced can utilize the newest and most powerful versions of the AI language model. (More on that later.)

Gemini 1.0 Pro currently supports over 40 languages. Google hasn't said yet if it plans to add more language support to the chatbot, but a Google support doc notes that it currently supports: Arabic, Bengali, Bulgarian, Chinese (Simplified / Traditional), Croatian, Czech, Danish, Dutch, English, Estonian, Farsi, Finnish, French, German, Greek, Gujarati, Hebrew, Hindi, Hungarian, Indonesian, Italian, Japanese, Kannada, Korean, Latvian, Lithuanian, Malayalam, Marathi, Norwegian, Polish, Portuguese, Romanian, Russian, Serbian, Slovak, Slovenian, Spanish, Swahili, Swedish, Tamil, Telugu, Thai, Turkish, Ukrainian, Urdu, and Vietnamese.

Gemini 1.5 Pro supports 35 languages and is available in over 150 different countries and territories. The supported languages include Arabic, Bulgarian, Chinese (Simplified / Traditional), Croatian, Czech, Danish, Dutch, English, Estonian, Farsi, Finnish, French, German, Greek, Hebrew, Hungarian, Indonesian, Italian, Japanese, Korean, Latvian, Lithuanian, Norwegian, Polish, Portuguese, Romanian, Russian, Serbian, Slovak, Slovenian, Spanish, Swahili, Swedish, Thai, Turkish, Ukrainian, and Vietnamese.

(Note: At the time of this article's writing, Google Gemini Advanced is only optimized for English. However, Google says it should still work with any languages Gemini supports.)

Like ChatGPT, Gemini can answer basic questions, help with coding, and solve complex mathematic equations. Additionally, Google added support for multimodal search in July, allowing users to input pictures as well as text into conversations. This, along with the chatbot's other capabilities, enables it to complete reverse image searches. Google can also include images in its answers, which are pulled from the search giant's online results.

Google also previously added the ability to generate images in Gemini using its Imagen model. You can take advantage of this new feature by telling the bot to "create an image." This makes the chatbot more competitive with OpenAI, which also offers image generation through DALL-E.

During Google I/O 2024, Google also showed off plans to expand that multimodal support for Gemini to include video and voice, allowing you to chat with the AI chatbot in real-time, similar to what we're already seeing with ChatGPT's new GPT-4o model.

Yes, Google Gemini is connected to the internet and is trained on the latest and most up-to-date information found online. This is obviously a nice advantage over ChatGPT, which just added full access to the internet back in September, and only for paid users who subscribe to its GPT-4 model.

Now that the chatbot is using Gemini 1.0 Pro and Gemini 1.5 Pro, it's expected to be one of the most accurate chatbots available on the web right now. However, past experiences with Gemini have shown that the bot is likely to hallucinate or take credit for information that it found via Google searches. This is a problem that Google has been working to fix, and the company has managed to improve the results and how they are handled.

However, like any chatbot, Gemini is still capable of creating information that is untrue or plagiarized. As such, it is always recommended you double-check any information that chatbots like Gemini provide, to ensure it is original and accurate.

Gemini is currently free to use, but Google also offers a subscription-based plan that allows you to take advantage of its best AI yet, Gemini Advanced. The service is available as part of Google's new Google One AI Premium Plan, which currently runs for $19.99 a month, putting it on par with ChatGPT Plus. The advantage here, of course, is that you also get access to 2TB of storage in Google Drive, as well as access to Gemini in Gmail, Docs, Slides, Sheets, and more. This feature was previously known as Duet AI, but it has also been rounded up under the Gemini umbrella.

Google also launched a dedicated Gemini mobile app for Android. iPhone users can access Gemini through the Google app on iOS. Currently, the Gemini mobile app is only available on select devices and only supports English in the U.S. However, Google plans to extend the available countries and languages the Gemini app supports in the future. Additionally, the mobile app supports many of the same functions as Google Assistant, and Google is positioning it to replace Assistant with Gemini in near future.

Gemini is a solid competitor for ChatGPT, especially now that Gemini should return results more akin to those in GPT-4. The interface is very similar, and the functionality offered by both chatbots should handle most of the queries and tasks that you throw at either of them.

Even with Google's paid plan, Gemini is still a more accessible option, as its free models are more similar to GPT-4 than ChatGPT's free option is. That said, OpenAI is starting to roll out a version of GPT-4o to all users, even free ones, but it will have usage limits and isn't widely available yet.

For now, Gemini presents the fewest barriers to internet access, and can use Google as a search engine. When ChatGPT does connect to the internet, it utilizes Bing as a search engine instead of Google.

Google did share some information about how Gemini compares to GPT-4V, one of the latest versions of GPT-4, and said it actually achieves more accurate results in several fields. But as no trustworthy tests are yet available for how Gemini 1.5 Pro compares to GPT-4o, it's unclear exactly how the two newest models from Google and OpenAI compare when placed head to head. Google Gemini 1.5 Pro does offer a maximum context-token count of one million, so it can handle much longer context documents than ChatGPT can now. And Google isn't stopping there, as it plans to offer a Gemini version with support for two million context tokens, which it is already testing with developers.

Ultimately, it's hard to say exactly which one is better, as they both have their strengths. I'd recommend trying to complete whatever task you want to accomplish in both, and then seeing which one works best for your needs. Also, keep in mind that some of the most impressive features that Gemini and ChatGPT offer are not fully available yet. For its part, Google is working on other AI-driven systems, which it could possibly include in Gemini down the line. These include MusicLM, which uses AI to generate music, something the tech giant showed off during Google I/O 2024.

At I/O 2024, Google made lots of exciting AI announcements—but one that has everyone talking is Project Astra. Essentially, Project Astra is what Google is calling an "advanced seeing and talking responsive agent." This means that a future Google AI will be able to get context from what's around you and you can ask a question and get a response in real time. It's almost like an amped-up version of Google Lens.

Project Astra is being developed by Google's DeepMind team, which is on a mission to build AI that can responsibly benefit humanity; this project is just one of the ways it's doing so. Google says that Project Astra is built upon its Gemini 1.5 Pro, which has gained improvements in areas such as translation, coding, reasoning and more. As part of this project, Google says they've developed prototype AI agents that can process information even faster by continuously encoding video frames and combining video and speech input into a timeline of events. The company is also using their speech models to enhance how its AI agents sound, for a wider range of intonations.

Google released a two-part demo video to show off how Project Astra works. The first half of the video shows Project Astra running on a Google Pixel phone; the latter half shows the new AI running on a prototype glasses device.

In the demo video, we can see the user using their Pixel phone with a camera viewfinder open and moving their device around the room while asking the next-generation Gemini AI assistant, "Tell me when you see something that makes sound" and the AI responding by pointing out the speaker on the desk. Other examples in the video include asking what a part of the code on a computer screen does, what city neighborhood they're currently in and coming up with a band name for a dog and its toy tiger.

While it will be a long time before we see this next-generation AI from Project Astra coming to our daily lives, it's still quite cool to see what the future holds.

As part of Google I/O, the company made several AI announcements, including a new experimental tool called VideoFX. With VideoFX, users can have a high-quality video generated just by typing in a prompt—and it's powered by a new AI model that Google calls Veo.

To get the new VideoFX tool to work, Google's DeepMind team developed a new AI model called Veo. The AI model was specifically created with video generation in mind and has a deep understanding of natural language and visual semantics, meaning you could give it prompts such as "Drone shot along the Hawaii jungle coastline, sunny day" or "Alpacas wearing knit wool sweaters, graffiti background, sunglasses."

The Veo model is clearly Google's answer to OpenAI's Sora AI video generator. While both Veo and Sora can create realistic videos using AI, Sora's videos had a limit of 60 seconds. Meanwhile, Google says that Veo can generate 1080p videos that can go beyond a minute in length, although Google doesn't specify how much longer.

Essentially, the new VideoFX tool takes the power of the new Veo AI model and puts it into an easy-to-use video editing tool. You'll be able to write up your prompts and they'll be turned into a video clip. The tool will also feature a Storyboard mode that you can use to create different shots, add music, and export your final video.

Google's VideoFX tool is currently being tested in a private preview in the U.S. The company hasn't said when the VideoFX tool will be publicly available beyond this preview.

For those interested in trying the new Veo-powered tools in VideoFX, you can join the waitlist: Go to http://labs.google/trustedtester and submit your information. Google will be reviewing all submissions on a rolling basis.

Enlarge / Still images taken from videos generated by Google Veo. (credit: Google / Benj Edwards)

On Tuesday at Google I/O 2024, Google announced Veo, a new AI video-synthesis model that can create HD videos from text, image, or video prompts, similar to OpenAI's Sora. It can generate 1080p videos lasting over a minute and edit videos from written instructions, but it has not yet been released for broad use.

Veo reportedly includes the ability to edit existing videos using text commands, maintain visual consistency across frames, and generate video sequences lasting up to and beyond 60 seconds from a single prompt or a series of prompts that form a narrative. The company says it can generate detailed scenes and apply cinematic effects such as time-lapses, aerial shots, and various visual styles

Since the launch of DALL-E 2 in April 2022, we've seen a parade of new image synthesis and video synthesis models that aim to allow anyone who can type a written description to create a detailed image or video. While neither technology has been fully refined, both AI image and video generators have been steadily growing more capable.

Read more of this story at Slashdot.

Google's AI heavy Google I/O keynote has ended, but Gemini has a long way to go before it can turn Google's AI dreams into realities. While many of Google's AI features are months down the line, the AI Overviews feature is already live for all US users.

Google is only going to be adding more AI features to the Search page going forward. This includes the ability to ask longer, more complex questions, or even to organize the entire Search page in different sections using AI. If that sounds like too much for you, there's something you can do about it.

While releasing all its new AI features, Google has also introduced something that will help you go back—way back. There's now a new, easy to miss button at the top of the search results page simply called "Web." If you switch to it, Google will only show you text links from websites, just like the good old days (although these can include sponsored ads).

The irony of needing to press a button called Web to get results for a web search is not lost on me. Nevertheless, it will be a useful feature for anyone who prefers the old-school approach to Google Search, the one that only showed you the top results from the web, made up of trusted sites.

The Web filter is rolling out on desktop and mobile search globally starting today and tomorrow, and you should see it in your searches soon. If you don't find it in the toolbar, click the More menu, and it should be there.

When you switch to the Web filter, your search results will also get rid of any kind of media or pull-out boxes. You won't see sections for images, videos, or Google News stories. Instead, you'll just see links (which themselves can point to YouTube videos, or news stories), according to Google Search Liaison's post on X.

Google has also confirmed to The Verge that the Web filter will stay like this, even as Google continues to add more AI features to the main page of Google Search.

While the Web filter is a nice touch, it's not the default option, and you'll need to switch to it manually, all the time (like you do when you switch to the Images or Maps filter). This step has also made something else clear: Google is not offering a way to turn off AI search features in the default Search page. Perhaps we will eventually see Chrome extensions that can alter the Google Search page, but for now, the only escape Google Search's AI is to switch to the Web filter.

Source: www.cybertalk.org – Author: slandau EXECUTIVE SUMMARY: Last week, over 40,000 business and cyber security leaders converged at the Moscone Center in San Francisco to attend the RSA Conference, one of the leading annual cyber security conferences and expositions worldwide, now in its 33rd year. Across four days, presenters, exhibitors and attendees discussed a wide […]

La entrada 5 key takeaways for CISOs, RSA Conference 2024 – Source: www.cybertalk.org se publicó primero en CISO2CISO.COM & CYBER SECURITY GROUP.

A few weeks ago, Best Buy revealed its plans to deploy generative AI to transform its customer service function. It’s betting on the technology to create “new and more convenient ways for customers to get the solutions they need” and to help its customer service reps develop more personalized connections with its consumers. By the […]

The post Navigating the New Frontier of AI-Driven Cybersecurity Threats appeared first on Security Boulevard.

As long as you've signed up for the ChatGPT Plus package ($20 a month), you can make use of custom GPTs—Generative Pre-trained Transformers. These are chatbots with a specific purpose in mind, whether it's vacation planning or scientific research, and there are a huge number of them to choose from.

They're not all high-quality, but several are genuinely useful; I've picked out our favorites below. To find them, just click Explore GPTs in the left-hand navigation bar in ChatGPT on the web. Once you've installed a particular GPT, you'll be able to get at it from the same navigation bar.

These GPTs have replaced plugins on the platform, and offer more focused experiences and features than the general ChatGPT bot. A lot of them also come with extra knowledge that ChatGPT doesn't have, and you can even have a go at creating your own: From the GPTs directory, click + Create up in the top right corner.

Travel portal Kayak has its very own GPT, ready to answer any questions you might have regarding a particular destination or how to get there. Ask about the best time to visit a city, or about the cost of a flight somewhere, or some places you can get to on a particular budget. It's great for getting inspiration about a trip or working out the finer details, and when I tested it on my home city of Manchester, UK, the answers that it came up with were reassuringly accurate—it even correctly told me the price you'll currently pay for a beer.

The Dall-E image generator (another OpenAI property) is built right into ChatGPT, so you get image creation and manipulation tools out of the box, but Hot Mods is a bit more specific: It takes pictures you've already got and turns them into something else. Add new elements, change the lighting or the vibe, swap out the background, turn photos into paintings and vice versa, and so on. The image transformations aren't always spot on—such is the nature of AI art—but you can have a lot of fun playing around with this.

Want to know the perfect wine for a particular dish, event, or time of year? Of course you do, which is why Wine Sommelier can be so helpful. Put it to the test and it'll tell you which wines go well with chicken salad, which wines suit a vegan diet, and which wines will be well-received at a wedding. You can also quiz the GPT on related topics, like wine production processes or vineyards of note. Whether you're a wine expert or you want some tips on how to become one, the Wine Sommelier bot is handy to have around.

You might already be familiar with Canva's straightforward and intuitive graphic design tools on the web and mobile, and its official GPT enables you to get creative inside the ChatGPT interface as well. Everything from posters to flyers to social media posts is covered, and you can be as deliberate or as vague as you like when it comes to the designs Canva comes up with. The GPT is able to produce text for your projects as well as images, and will ask you questions if it needs more guidance about the graphics you're asking for.

SciSpace is an example of a GPT that brings a wealth of extra knowledge with it, above and beyond ChatGPT's own training data—it can dig into more than 278 million research papers, in fact, so you're able to ask it any question to get the latest academic thinking on a particular topic. Whether you want to know about potential links between exercise and heart health, or whale migration patterns, or anything else, SciSpace has you covered. You can also upload research papers and ask the bot to analyze and summarize them for you.

This is a great idea: Get AI to produce custom coloring books for you, based around any idea or subject you like. Obviously you're going to need a printer as well (unless you're doing the coloring digitally), but there's a lot of fun to be had in playing around with designs and topics—you can even upload your own images and have them converted to coloring book format. Some of the usual AI weirdness does occasionally creep in to the pictures, but they're mostly spot on in terms of bold, black lines and big white spaces.

Wolfram Alpha is one of the best resources on the web, an outstanding collection of algorithms and knowledge, and the Wolfram GPT brings a lot of this usefulness into ChatGPT as well. This bot is perfect for complex math calculations and graphs, for conversions between different units, and for asking questions about any of the knowledge gathered by humanity so far. The breadth of the capabilities here is seriously impressive, and when needed the GPT is able to pull data and images from Wolfram Alpha seamlessly.

The group recommends that Congress draft emergency spending legislation to boost U.S. investments in artificial intelligence, including new R&D and testing standards to understand the technology's potential harms.

The post Senators Urge $32 Billion in Emergency Spending on AI After Finishing Yearlong Review appeared first on SecurityWeek.

The 2024 RSA Conference can be summed up in two letters: AI. AI was everywhere. It was the main topic of more than 130 sessions. Almost every company with a booth in the Expo Hall advertised AI as a component in their solution. Even casual conversations with colleagues over lunch turned to AI. In 2023, … Continued

The post The Rise of AI and Blended Attacks: Key Takeaways from RSAC 2024 appeared first on DTEX Systems Inc.

The post The Rise of AI and Blended Attacks: Key Takeaways from RSAC 2024 appeared first on Security Boulevard.

Enlarge / An image Ilya Sutskever tweeted with this OpenAI resignation announcement. From left to right: New OpenAI Chief Scientist Jakub Pachocki, President Greg Brockman, Sutskever, CEO Sam Altman, and CTO Mira Murati. (credit: Ilya Sutskever / X)

On Tuesday evening, OpenAI Chief Scientist Ilya Sutskever announced that he is leaving the company he co-founded, six months after he participated in the coup that temporarily ousted OpenAI CEO Sam Altman. Jan Leike, a fellow member of Sutskever's Superalignment team, is reportedly resigning with him.

"After almost a decade, I have made the decision to leave OpenAI," Sutskever tweeted. "The company’s trajectory has been nothing short of miraculous, and I’m confident that OpenAI will build AGI that is both safe and beneficial under the leadership of @sama, @gdb, @miramurati and now, under the excellent research leadership of @merettm. It was an honor and a privilege to have worked together, and I will miss everyone dearly."

Sutskever has been with the company since its founding in 2015 and is widely seen as one of the key engineers behind some of OpenAI's biggest technical breakthroughs. As a former OpenAI board member, he played a key role in the removal of Sam Altman as CEO in the shocking firing last November. While it later emerged that Altman's firing primarily stemmed from a power struggle with former board member Helen Toner, Sutskever sided with Toner and personally delivered the news to Altman that he was being fired on behalf of the board.

Read more of this story at Slashdot.

AI is a fascinating field, one that has seen a ton of advancements in recent years. In fact, OpenAI's ChatGPT has singlehandedly increased the hype around generative AI to new levels. But the days of ChatGPT being the only viable AI chatbot option are long gone. Now, others are available, including Anthropic's Claude AI, which has some key differences from the AI chatbot most people are familiar with. The question is this: Can Anthropic's version of ChatGPT stand up to the original?

Anthropic is an AI startup co-founded by ex-OpenAI members. It's especially notable because the company has a much stricter set of ethics surrounding its AI than OpenAI currently does. The company includes the Amodei siblings, Daniela and Dario, who were instrumental in creating GPT-3.

The Amodei siblings, as well as others, left OpenAI and founded Anthropic to create an alternative to ChatGPT that addressed their AI safety concerns better. One way that Anthropic has differentiated itself from OpenAI is by training its AI to align with a "document of constitutional AI principles," like opposition to inhumane treatment, as well as support of freedom and privacy.

Claude AI, or the latest version of the model, Claude 3, is Anthropic's version of ChatGPT. Like ChatGPT, Claude 3 is an AI chatbot with a special large language model (LLM) running behind it. However, it is designed by a different company, and thus offers some differences than OpenAI's current GPT model. It's probably the strongest competitor out of the various ChatGPT alternatives that have popped up, and Anthropic continues to update it with a ton of new features and limitations.

Anthropic technically offers four versions of Claude, including Claude 1, Claude 2, Claude-Instant, and the latest update, Claude 3. While each is similar in nature, the language models all offer some subtle differences in capability.

If you have any experience using ChatGPT, you're already well on your way to using Claude, too. The system uses a simple chat box, in which you can post queries to get responses from the system. It's as simple as it gets, and you can even copy the responses Claude offers, retry your question, or ask it to provide additional feedback. It's very similar to ChatGPT.

While Claude can do a lot of the same things that ChatGPT can, there are some limitations. Where ChatGPT now has internet access, Claude is only trained on the information that the developers at Anthropic have provided it with, which is limited to August 2023, according to the latest notes from Anthropic. As such, it cannot look beyond that scope.

Claude also cannot interpret or create images, something that you can now do in ChatGPT thanks to the introduction of DALL-E 3. The company does offer similar things to ChatGPT, including a cheaper and faster processing option—Claude-Instant—that is more premium than Claude 3. The previous update, Claude-2, is considered on-par with ChatGPT's GPT-4 model. Claude 3, on the other hand, has actually outperformed GPT-4 in a number of areas.

Of course, all of that pales in comparison to what OpenAI has made possible with the newly released GPT-4o. While all of its newest ground-breaking features haven't released just yet, OpenAI has really upped the ante, bringing full multimodal support to the AI chatbot. Now, ChatGPT will be able to respond directly to questions, you'll be able to interrupt its answers when using voice mode, and you can even capture both live video and your device's display and share them directly with the chatbot to get real-time responses.

Claude AI is actually free to try, though that freedom comes with some limitations, like how many questions you can ask and how much data the chatbot can process. There is a premium subscription, called Claude Pro, which will grant you additional data for just $20 a month.

Unlike ChatGPT's premium subscription, using the free version of Claude actually gives you access to Claude's latest model, though you miss out on the added data tokens and higher priority that a subscription offers.

Like ChatGPT, Claude offers a free version. Both are solid options to try out the AI chatbots, but if you plan to use them extensively, it's definitely worth looking at the more premium subscription plans that they offer.

While Claude gives you access to its more advanced Claude 3 in the free version, it does come with severe limits. You can't process PDFs larger than 10 megabytes, for instance, and its usage limits can vary depending on the current load. Anthropic hasn't shared an exact limit or even a range that you can expect, but CNBC estimates it's about five summaries every four hours. At the end of the day, it depends on how many people are using the system when you are. The nice thing about Claude 3 is that it brings in a ton of new features you can try out in Claude's free version, including multilingual capabilities, vision and image processing, as well as easier to steer prompting.

ChatGPT used to limit free users to GPT-3.5, locking them to the older and thus less reliable model. That, however, has changed with the release of GPT-4o, which introduces limited usage rates for free ChatGPT accounts. OpenAI hasn't shared specifics on how limited GPT-4o is with the free version, but it does give you access to all the improvements the system offers, until you eventually run out of usage and get bumped back down to GPT-3.5.

Still, that does mean you can technically use GPT-4o without paying a single cent. However, there are some limitations in place if the service is extremely busy, and you may see your requests taking much longer or even returned if usage is high. It's also possible that your free ChatGPT account may not even be available during certain times of high activity, as OpenAI sometimes limits access to free accounts to help mitigate high server usage.

It's also important to note that ChatGPT 3.5 is more likely to hallucinate than GPT-4 and the newer GPT-4o does, so it's important to double-check all the information that it provides. (That said, you should always double-check important information generated by AI.) The free version of ChatGPT also now has access to the GPT Store: Here, you can make use of various GPTs, which personalize the chatbot to respond to your questions and queries in different ways. Claude doesn't currently offer any kind of system like this, so you'll have to word your prompts correctly to get the most out of it.

If you're planning to use Claude or ChatGPT extensively, it might be worth upgrading to one of the currently available monthly plans. Both Anthropic and OpenAI offer subscription plans, so how do you decide which one to purchase? Here's how they stack up against each other.

Claude Pro costs $20 a month. Unlike ChatGPT Plus (which gives you access to OpenAI's GPT-4 and GPT-4 Turbo model), Claude already offers its latest and greatest model in the free and limited plan. As such, subscribing for $20 a month will simply reward you with at least five times the usage of the free service, making it easier to send longer messages and have longer conversations before the context tokens on the AI run out (context tokens determine how much information the AI can understand when it responds), as well as increasing the length of files that you can attach. Claude Pro will also get you faster response times and higher availability and priority when demand is high.

On the other hand, ChatGPT Plus seems to offer a bit more for that $20 subscription, as it nets you GPT-4 and GPT-4 Turbo, OpenAI's most complex and successful language models. These models are capable of far more than the free systems available in ChatGPT without a subscription. Subscribing to ChatGPT Plus will also get you faster response times, priority access when demand for the chatbot is high, and access to the newest features, such as DALL-E 3's image creation option.

Accuracy is an area that AI language models, such as those that run Claude and ChatGPT, still struggle with. While these models can be accurate and are trained on terabytes of data, they have been known to "hallucinate" and create their own facts and data.

My own experience has shown that Claude tends to be more factually accurate when summarizing things than ChatGPT, but that's based on a very small subset of data. And Claude's data is extremely outdated if you're looking to discuss recent happenings. It also doesn't have open access to the internet, so you're more limited in the possible ways that it can hallucinate or pull from bad sources, which is a blessing and a curse, as it locks you out of the good sources, too.

No matter which service you go with, they're both going to have problems, and you'll want to double-check any information that ChatGPT or Claude provides you with to ensure it isn't plagiarized from something else—or just entirely made up.

There are some places where Claude is better than ChatGPT, though Claude 3 reportedly outperforms ChatGPT's latest models based on Anthropic's data. The biggest difference, for starters, is that Claude offers a much safer approach to the use of AI, with more restrictions placed upon its language models that ChatGPT just doesn't offer. This includes more restrictive ethics, though ChatGPT has continued to evolve how it approaches the ethics of AI as a whole.

Claude also offers longer context token limits than ChatGPT currently does. Tokens are broken-down pieces of text the AI can understand (OpenAI says one token is roughly four characters of text.) Claude offers 200,000 tokens for Claude 3, while GPT-4 tops out at 32,000 in some plans, which may be useful for those who want to have longer conversations before they have to worry about the AI model losing track of what they are talking about. This increased size in context tokens means that Claude is much better at analyzing large files, which is something to keep in mind if you plan to use it for that sort of thing.

However, there are also several areas that ChatGPT comes ahead. Access to the internet is a big one: Having open access to the internet means ChatGPT is always up-to-date on the latest information on the web. It also means the bot is susceptible to more false information, though, so there's definitely a trade-off. With the introduction of GPT-4o's upcoming features like voice mode, ChatGPT will be able to respond to your queries in real-time: If Claude has plans for a similar feature set, it hasn't entertained it publicly just yet.

OpenAI has also made it easy to create your own custom GPTs using its API and language models, something that, as I noted above, Claude doesn't support just yet. In addition. ChatGPT gives you in-chat image creation thanks to DALL-E 3, which is actually impressive for AI image generation.

Ultimately, Claude and ChatGPT are both great AI chatbots that offer a ton of usability for those looking to dip their toes in the AI game. If you want the latest, cutting-edge, though, the trophy currently goes to ChatGPT, as the things you're able to do with GPT-4o open entirely new doors that Claude isn't trying to open just yet.

Enlarge (credit: imaginima | E+)

After backlash over Google's search engine becoming the primary traffic source for deepfake porn websites, Google has started burying these links in search results, Bloomberg reported.

Over the past year, Google has been driving millions to controversial sites distributing AI-generated pornography depicting real people in fake sex videos that were created without their consent, Similarweb found. While anyone can be targeted—police already are bogged down with dealing with a flood of fake AI child sex images—female celebrities are the most common victims. And their fake non-consensual intimate imagery is more easily discoverable on Google by searching just about any famous name with the keyword "deepfake," Bloomberg noted.

Google refers to this content as "involuntary fake" or "synthetic pornography." The search engine provides a path for victims to report that content whenever it appears in search results. And when processing these requests, Google also removes duplicates of any flagged deepfakes.

During the kickoff keynote for Google I/O 2024, the general tone seemed to be, “Can we have an extension?” Google’s promised AI improvements are definitely taking center stage here, but with a few exceptions, most are still in the oven.

That’s not too surprising—this is a developer conference, after all. But it seems like consumers will have to wait a while longer for their promised "Her" moment. Here’s what you can expect once Google’s new features start to arrive.

Maybe the most impactful addition for most people will be expanded Gemini integration in Google Search. While Google already had a “generative search” feature in Search Labs that could jot out a quick paragraph or two, everyone will soon get the expanded version, “AI Overviews.”

Optionally in searches, AI Overviews can generate multiple paragraphs of information in response to queries, complete with subheadings. It will also provide additional context over its predecessor and can take more detailed prompts.

For instance, if you live in a sunny area with good weather and ask for “restaurants near you,” Overviews might give you a few basic suggestions, but also a separate subheading with restaurants that have good patio seating.

In the more traditional search results page, you’ll instead be able to use “AI organized search results,” which eschew traditional SEO to intelligently recommend web pages to you based on highly specific prompts.

For instance, you can ask Google to “create a gluten free three-day meal plan with lots of veggies and at least two desserts,” and the search page will create several subheadings with links to appropriate recipes under each.

Google is also bringing AI to how you search, with an emphasis on multimodality—meaning you can use it with more than text. Specifically, an “Ask with Video” feature is in the works that will allow you to simply point your phone camera at an object, ask for identification or repair help, and get answers via generative search.

Google didn't directly address how its handling criticism that AI search results essentially steal content from sources around the web without users needing to click through the original source. That said, demonstrators highlighted multiple times that these features bring you to useful links you can check out yourself, perhaps covering their bases in the face of these critiques.

AI Overviews are already rolling out to Google users in the US, with AI Organized Search Results and Ask with Video set for “the coming weeks.”

Another of the more concrete features in the works is “Ask Photos,” which plays with multimodality to help you sort through the hundreds of gigabytes of images on your phone.

Say your daughter took swimming lessons last year and you’ve lost track of your first photos of her in the water. Ask photos will let you simply ask, “When did my daughter learn to swim?" Your phone will automatically know who you mean by “your daughter,” and surface images from her first swimming lesson.

That’s similar to searching your photo library for pictures of your cat by just typing “cat,” sure, but the idea is that the multimodal AI can support more detailed questions and understand what you’re asking with greater context, powered by Gemini and the data already stored on your phone.

Other details are light, with Ask Photos set to debut “in the coming months.”

Here’s where we get into more pie in the sky stuff. Project Astra is the most C-3PO we’ve seen AI get yet. The idea is you’ll be able to load up the Gemini app on your phone, open your camera, point it around, and ask for questions and help based on what your phone sees.

For instance, point at a speaker, and Astra will be able to tell you what parts are in the hardware and how they’re used. Point at a drawing of a cat with dubious vitality, and Astra will answer your riddle with “Schrödinger’s Cat.” Ask it where your glasses are, and if Astra was looking at them earlier in your shot, it will be able to tell you.

This is maybe the classical dream when it comes to AI, and quite similar to OpenAI's recently announced GPT-4o, so it makes sense that it’s not ready yet. Astra is set to come “later this year,” but curiously, it’s also supposed to work on AR glasses as well as phones. Perhaps we’ll be learning of a new Google wearable soon.

It’s unclear when this feature will be ready, since it seems to be more of an example for Google’s improved AI models than a headliner, but one of the more impressive (and possibly unsettling) demos Google showed off during I/O involved creating a custom podcast hosted by AI voices.

Say your son is studying physics in school, but is more of an audio learner than a text-oriented one. Supposedly, Gemini will soon let you dump written PDFs into Google’s NotebookLM app and ask Gemini to make an audio program discussing them. The app will generate what feels like a podcast, hosted by AI voices talking naturally about the topics from the PDFs.

Your son will then be able to interrupt the hosts at any time to ask for clarification.

Hallucination is obviously a major concern here, and the naturalistic language might be a little “cringe,” for lack of a better word. But there’s no doubt it’s an impressive showcase…if only we knew when we’ll be able to recreate it.

There’s a few other tools in the works that seem purpose-built for your typical consumer, but for now, they’re going to be limited to Google’s paid Workspace (and in some cases Google One AI Premium) plans.

The most promising of these is Gmail integration, which takes a three-pronged approach. The first is summaries, which can read through a Gmail thread and break down key points for you. That’s not too novel, nor is the second prong, which allows AI to suggest contextual replies for you based on information in your other emails.

But Gemini Q&A seems genuinely transformative. Imagine you’re looking to get some roofing work done and you’ve already emailed three different construction firms for quotes. Now, you want to make a spreadsheet of each firm, their quoted price, and their availability. Instead of having to sift through each of your emails with them, you can instead ask a Gemini box at the bottom of Gmail to make that spreadsheet for you. It will search your Gmail inbox and generate a spreadsheet within minutes, saving you time and perhaps helping you find missed emails.

This sort of contextual spreadsheet building will also be coming to apps outside of Gmail, but Google was also proud to show off its new “Virtual Gemini Powered Teammate.” Still in the early stages, this upcoming Workspace feature is kind of like a mix between a typical Gemini chat box and Astra. The idea is that organizations will be able to add AI agents to their Slack equivalents that will be on call to answer questions and create documents on a 24/7 basis.

Gmail’s Gemini-powered summarization features will be rolling out this month to Workspace Labs users, with its other Gmail features coming to Labs in July.

Earlier this year, OpenAI replaced ChatGPT plugins with “GPTs,” allowing users to create custom versions of its ChatGPT chatbots built to handle specific questions. Gems are Google’s answer to this, and work relatively similarly. You’ll be able to create a number of Gems that each have their own page within your Gemini interface, and each answer to a specific set of instructions. In Google’s demo, suggested Gems included examples like “Yoga Bestie,” which offers exercise advice.

Gems are another feature that won’t see the light of day until a few months from now, so for now, you'll have to stick with GPTs.

Fresh off the muted reception to the Humane AI Pin and Rabbit R1, AI aficionados were hoping that Google I/O would show Gemini’s answer to the promises behind these devices, i.e. the ability to go beyond simply collating information and actually interact with websites for you. What we got was a light tease with no set release date.

In a pitch from Google CEO Sundar Pichai, we saw the company’s intention to make AI Agents that can “think multiple steps ahead.” For example, Pichai talked about the possibility for a future Google AI Agent to help you return shoes. It could go from “searching your inbox for the receipt,” all the way to “filling out a return form,” and “scheduling a pickup,” all under your supervision.

All of this had a huge caveat in that it wasn’t a demo, just an example of something Google wants to work on. “Imagine if Gemini could” did a lot of heavy lifting during this part of the event.

In addition to highlighting specific features, Google also touted the release of new AI models and updates to its existing AI model. From generative models like Imagen 3, to larger and more contextually intelligent builds of Gemini, these aspects of the presentation were intended more for developers than end users, but there’s still a few interesting points to pull out.

The key standouts are the introduction of Veo and Music AI Sandbox, which generate AI video and sound respectively. There’s not too many details on how they work yet, but Google brought out big stars like Donald Glover and Wyclef Jean for promising quotes like, “Everybody’s gonna become a director” and, “We digging through the infinite crates.”

For now, the best demos we have for these generative models are in examples posted to celebrity YouTube channels. Here’s one below:

Google also wouldn’t stop talking about Gemini 1.5 Pro and 1.5 Flash during its presentation, new versions of its LLM primarily meant for developers that support larger token counts, allowing for more contextuality. These probably won’t matter much to you, but pay attention to Gemini Advanced.

Gemini Advanced is already on the market as Google’s paid Gemini plan, and allows a larger amount of questions, some non-developer interaction with Gemini 1.5 Pro, integration with various apps such as Docs (including some but not all of today's announced Workspace features), and uploads of files like PDFs.

Some of Google’s promised features sound like they’ll need you to have a Gemini Advanced subscription, specifically those that want you to upload documents so the chatbot can answer questions related to them or riff off them with its own content. We don’t know for sure yet what will be free and what won’t, but it’s yet another caveat to keep in mind for Google’s “keep your eye on us” promises this I/O.

That's a wrap on Google's general announcements for Gemini. That said, they also made announcements for new AI features in Android, including a new Circle to Search ability and using Gemini for scam detection. (Not Android 15 news, however: That comes tomorrow.)

Read more of this story at Slashdot.

© Jeff Chiu/Associated Press

Read more of this story at Slashdot.

Enlarge / A video still of Project Astra demo at the Google I/O conference keynote in Mountain View on May 14, 2024. (credit: Google)

Just one day after OpenAI revealed GPT-4o, which it bills as being able to understand what's taking place in a video feed and converse about it, Google announced Project Astra, a research prototype that features similar video comprehension capabilities. It was announced by Google DeepMind CEO Demis Hassabis on Tuesday at the Google I/O conference keynote in Mountain View, California.

Hassabis called Astra "a universal agent helpful in everyday life." During a demonstration, the research model showcased its capabilities by identifying sound-producing objects, providing creative alliterations, explaining code on a monitor, and locating misplaced items. The AI assistant also exhibited its potential in wearable devices, such as smart glasses, where it could analyze diagrams, suggest improvements, and generate witty responses to visual prompts.

Google says that Astra uses the camera and microphone on a user's device to provide assistance in everyday life. By continuously processing and encoding video frames and speech input, Astra creates a timeline of events and caches the information for quick recall. The company says that this enables the AI to identify objects, answer questions, and remember things it has seen that are no longer in the camera's frame.

Enlarge / "Google will do the Googling for you," says firm's search chief. (credit: Google)

Search is still important to Google, but soon it will change. At its all-in-one AI Google I/O event Tuesday, the company introduced a host of AI-enabled features coming to Google Search at various points in the near future, which will "do more for you than you ever imagined."

"Google will do the Googling for you," said Liz Reid, Google's head of Search.

It's not AI in every search, but it will seemingly be hard to avoid a lot of offers to help you find, plan, and brainstorm things. "AI Overviews," the successor to the Search Generative Experience, will provide summary answers to questions, along with links to sources. You can also soon submit a video as a search query, perhaps to identify objects or provide your own prompts by voice.

Source: www.cybertalk.org – Author: slandau By Ana Paula Assis, Chairman, Europe, Middle East and Africa, IBM. EXECUTIVE SUMMARY: From the shop floor to the boardroom, artificial intelligence (AI) has emerged as a transformative force in the business landscape, granting organizations the power to revolutionize processes and ramp up productivity. The scale and scope of this […]

La entrada AI is changing the shape of leadership – how can business leaders prepare? – Source: www.cybertalk.org se publicó primero en CISO2CISO.COM & CYBER SECURITY GROUP.

Highlights from the 2023 World CyberCon in Mumbai.[/caption]

A Comprehensive Platform for Learning & Innovation

The World CyberCon META Edition 2024 promises a rich agenda with topics ranging from the nuances of national cybersecurity strategies to the latest in threat intelligence and protection against advanced threats. Discussions will span a variety of crucial subjects including:

Highlights from the 2023 World CyberCon in Mumbai.[/caption]

A Comprehensive Platform for Learning & Innovation

The World CyberCon META Edition 2024 promises a rich agenda with topics ranging from the nuances of national cybersecurity strategies to the latest in threat intelligence and protection against advanced threats. Discussions will span a variety of crucial subjects including:

Read more of this story at Slashdot.

Read more of this story at Slashdot.